Recon is a team of invariant testing engineers and security researchers that provide invariant testing as a service while also developing tools and educational content to make it easier for anyone to test invariants on their smart contracts.

Our goal is to make Invariant Testing a baseline for all projects building on the EVM.

We're amongst the most prolific invariant testing writers, with our template being used over 300 times by multiple professionals.

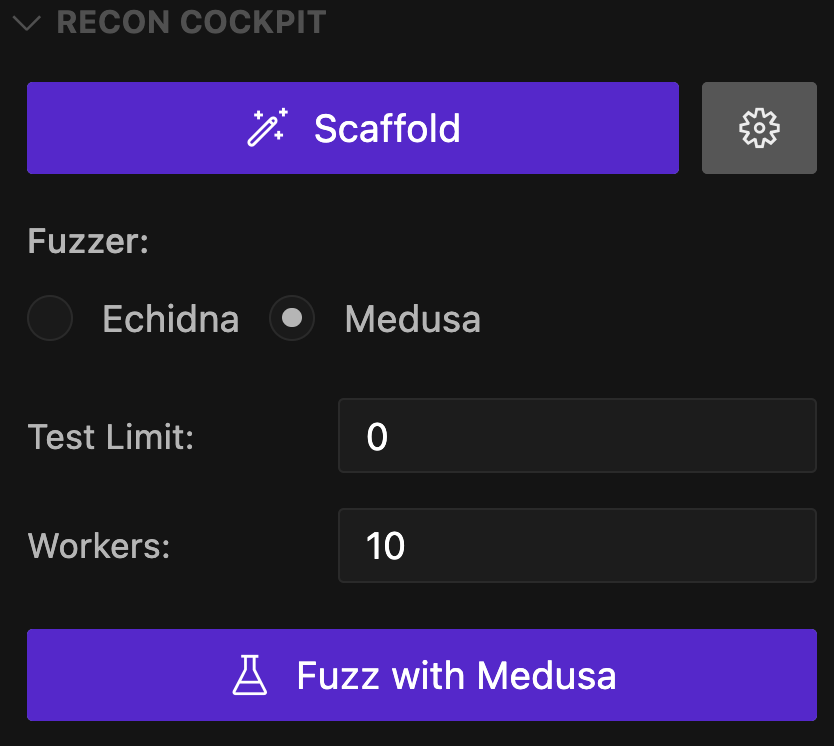

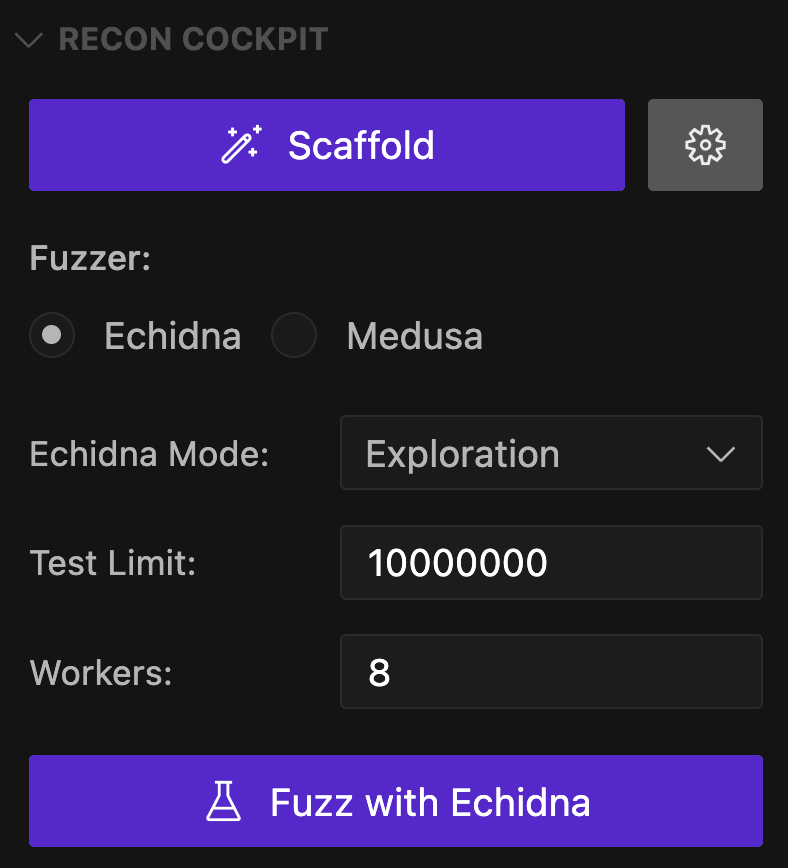

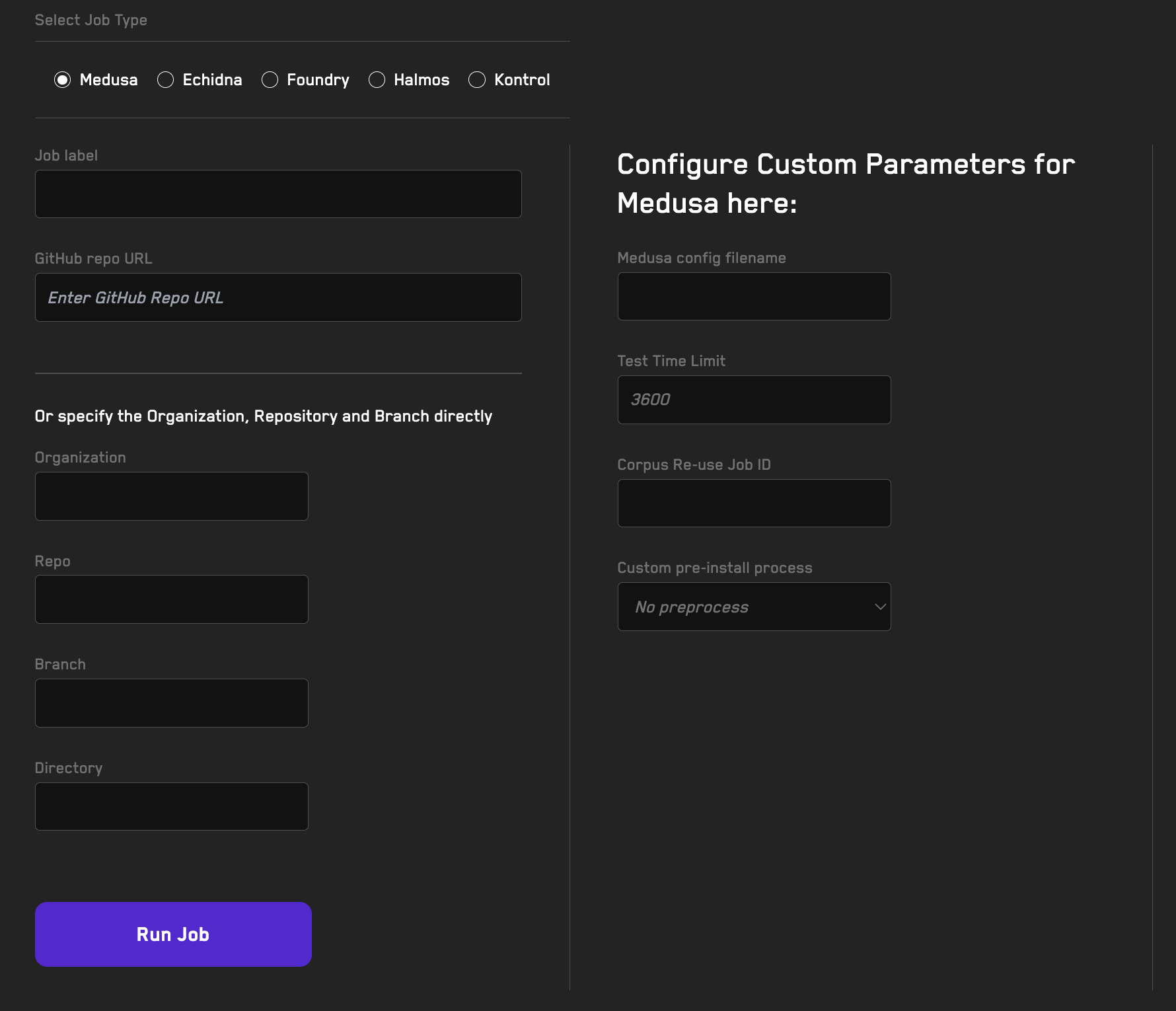

Recon Pro is a tool for running invariant tests in the cloud.

- Video Tutorial: Intro to Recon Pro V2 (1min)

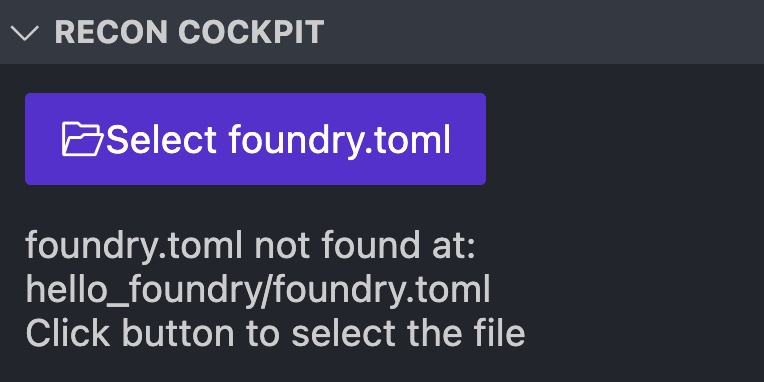

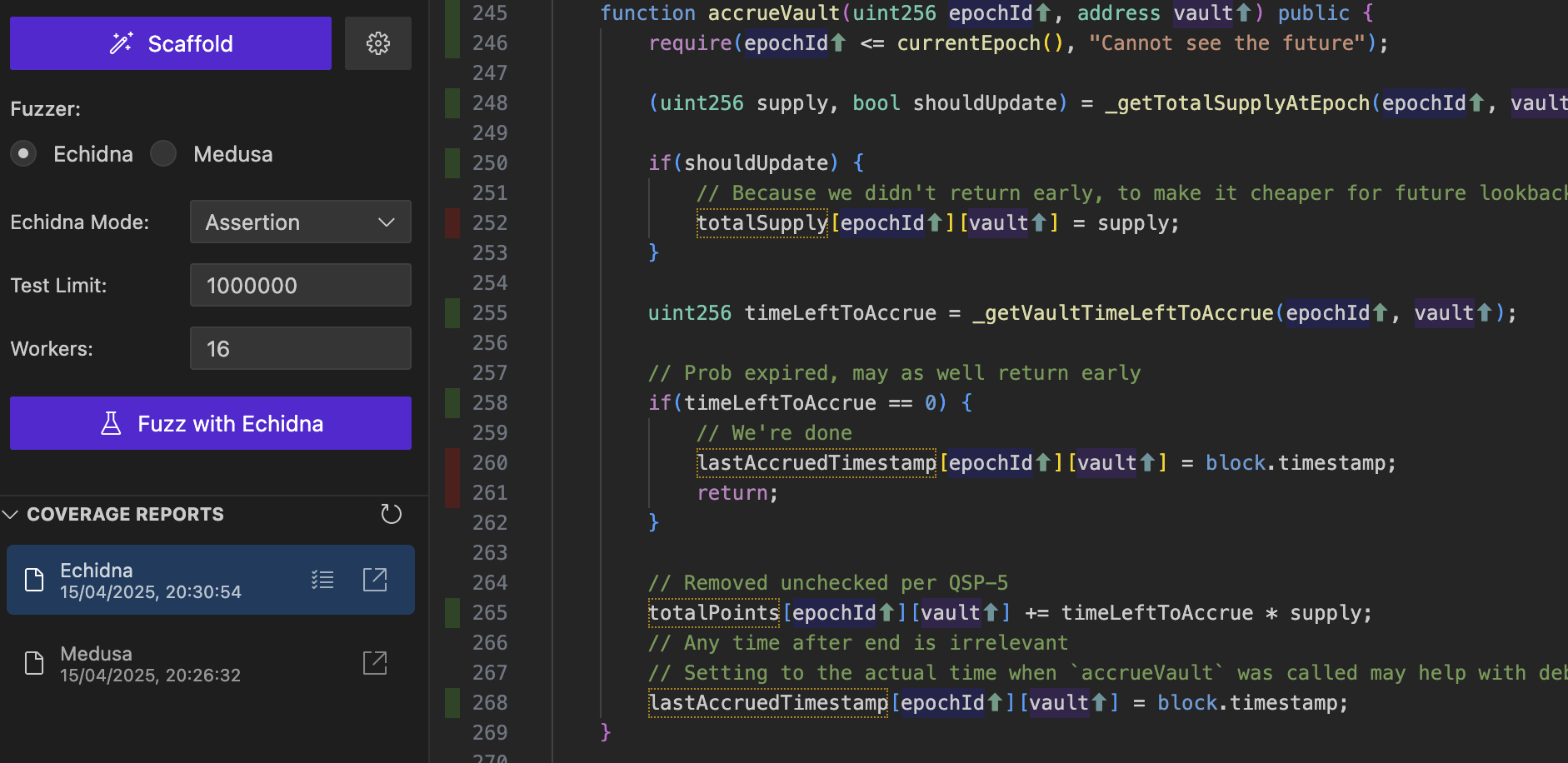

The Recon Extension is an open source VS Code Extension to scaffold a Invariant Testing Boilerplate using the Chimera Framework.

Navigating the Book

Writing Invariant Tests

If you're new to invariant testing with fuzzing, use the Learn Invariant Testing section to learn the background info you'll need to get started.

You can then follow along with the Example Project to see how to set up a project using the Chimera Framework and Create Chimera App template.

Once you've gotten the hang of writing invariants and are using them in real projects, check out the Advanced Fuzzing Tips section for best practices we've developed after using our Chimera framework for over a year on dozens of engagements.

Building Handlers

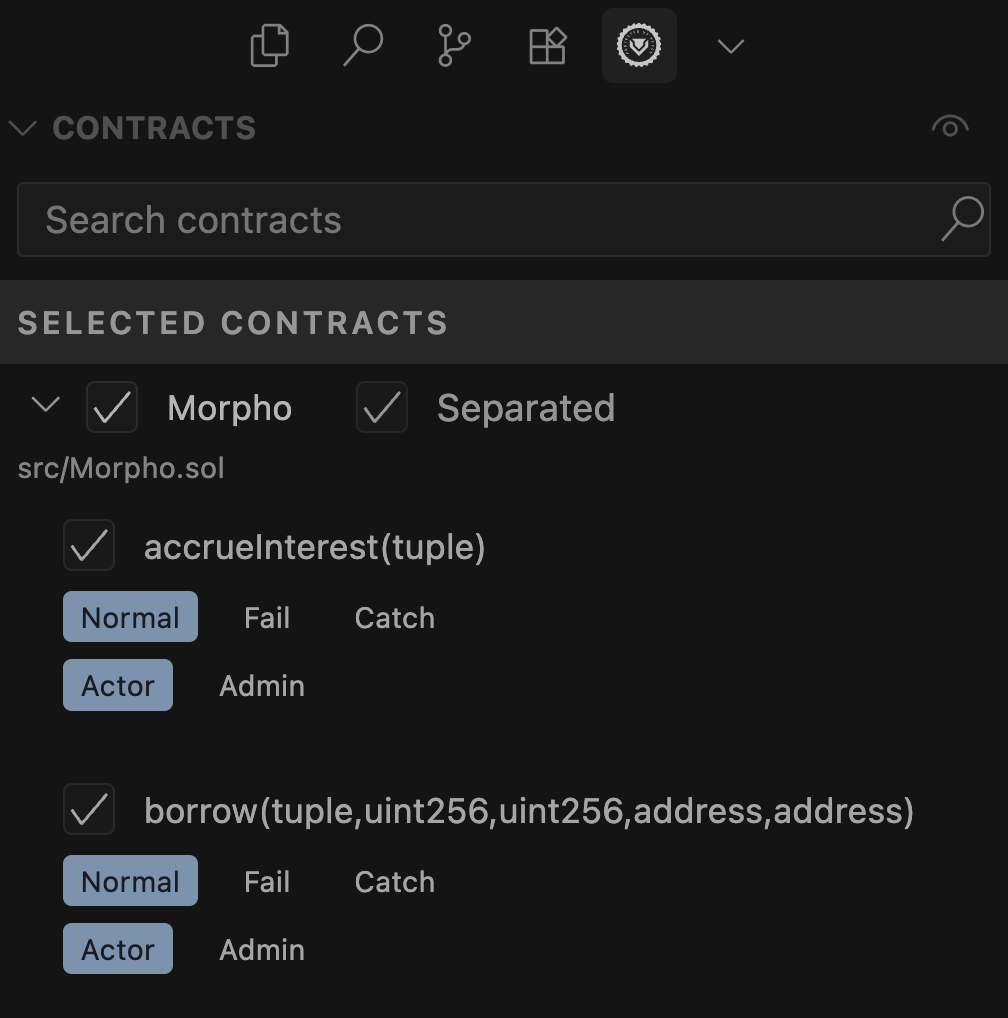

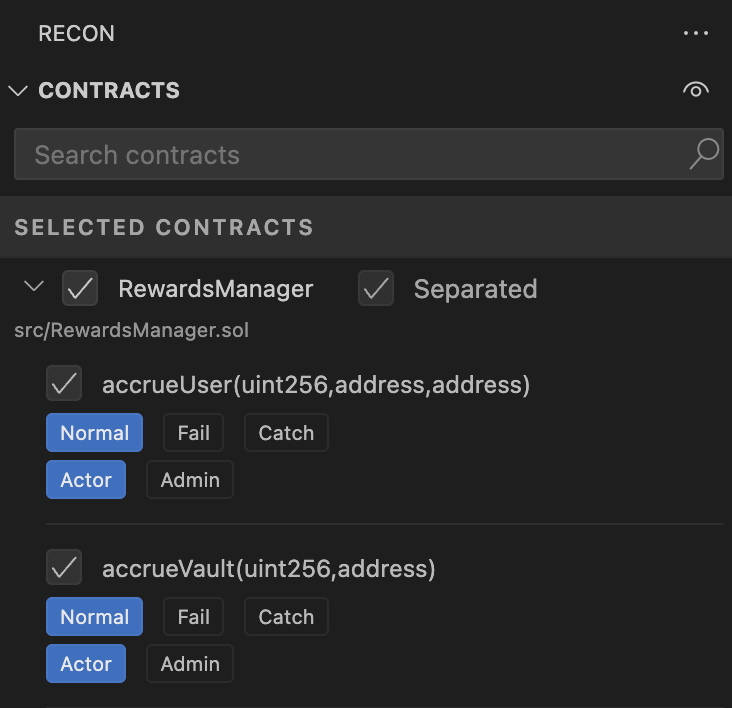

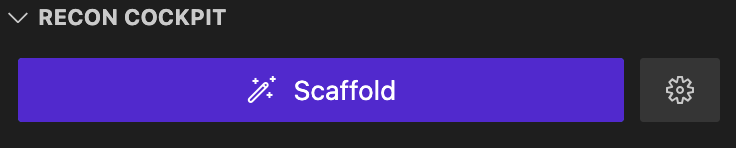

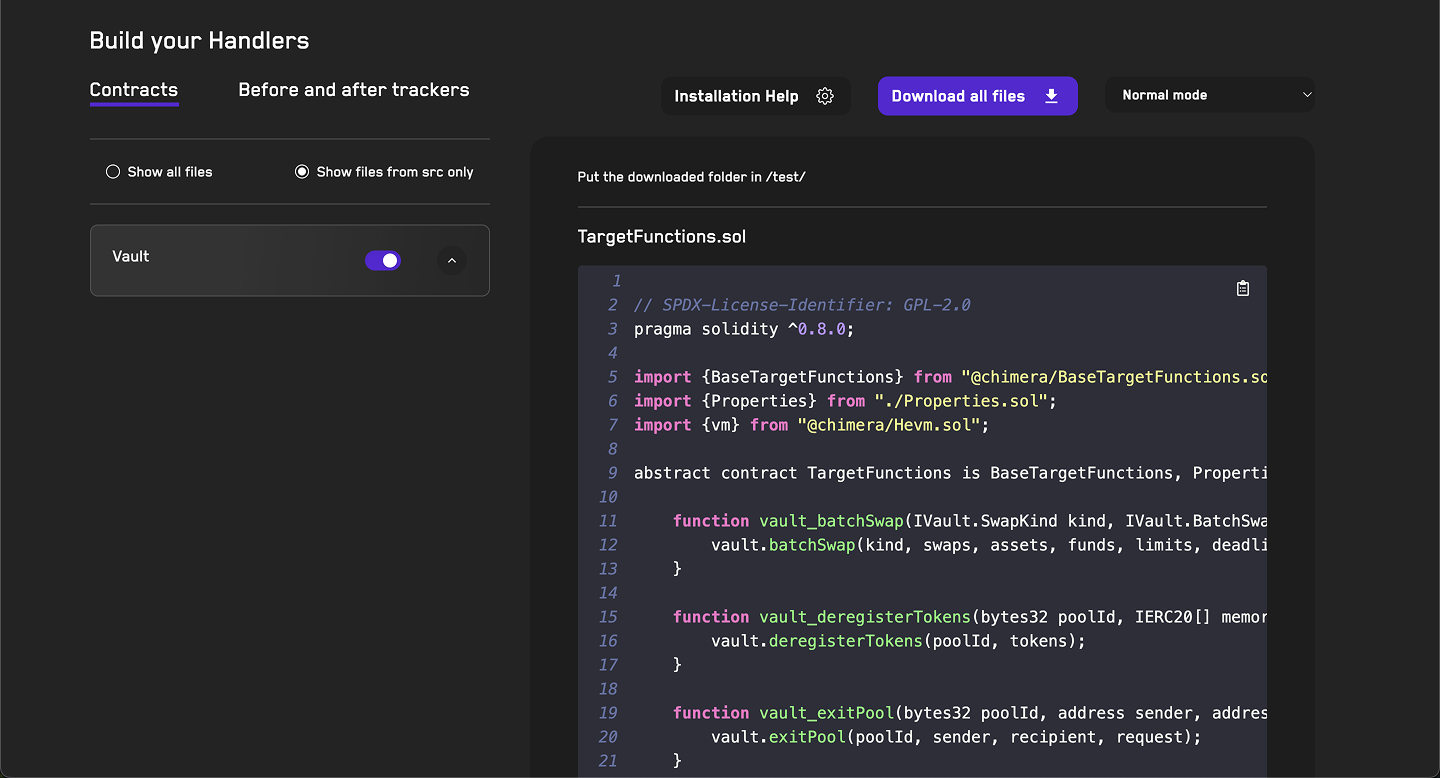

Learn how to use the Recon UI to add a test harness to your Foundry project.

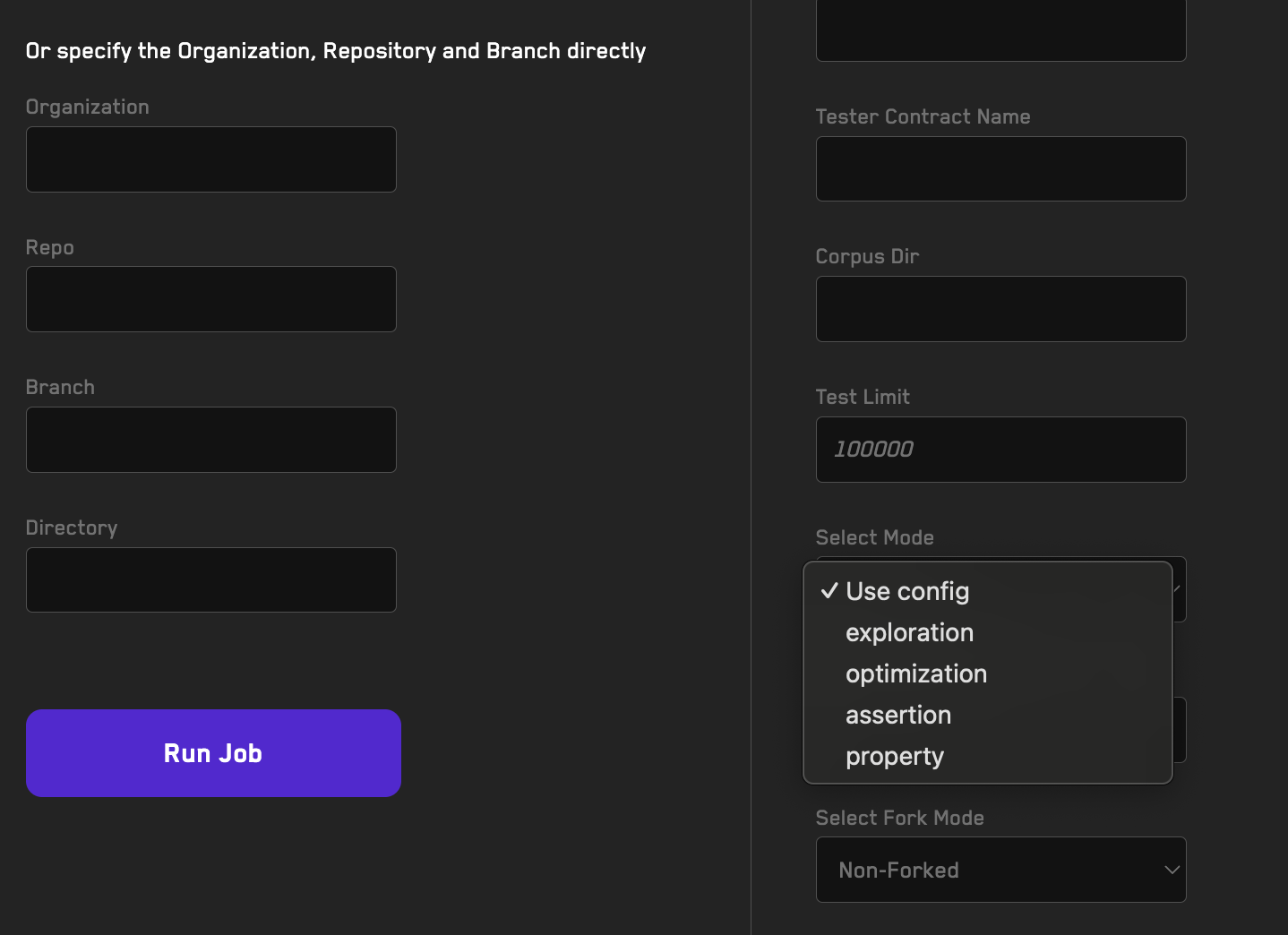

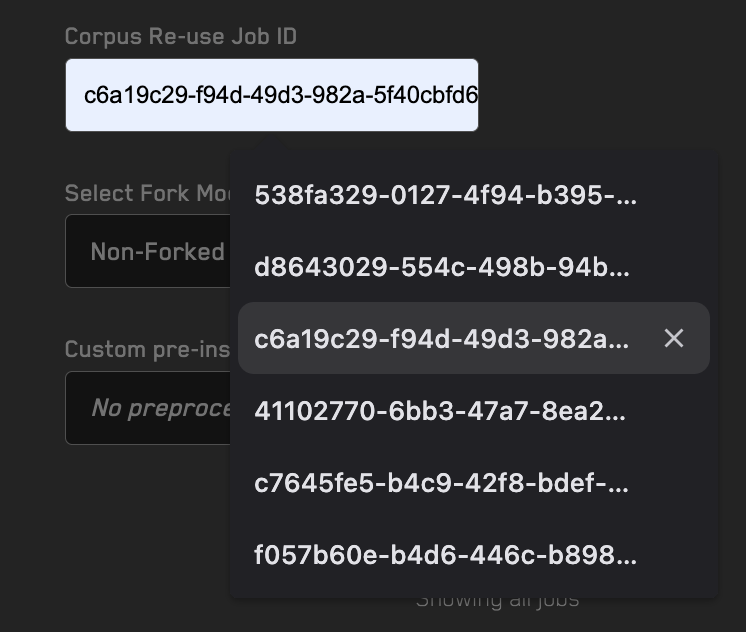

Running Jobs

Learn how to offload a fuzzing job using the Recon cloud runner.

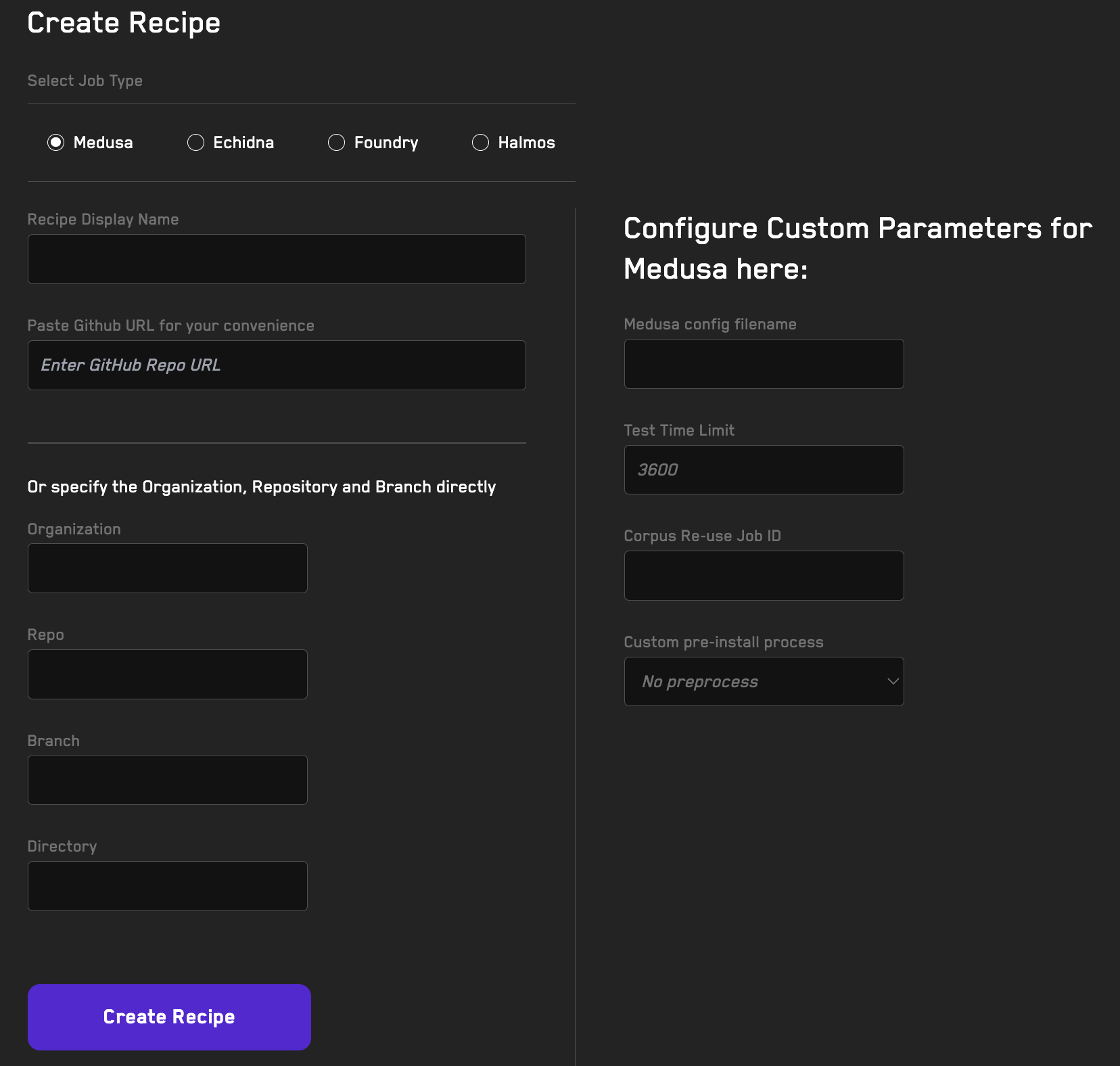

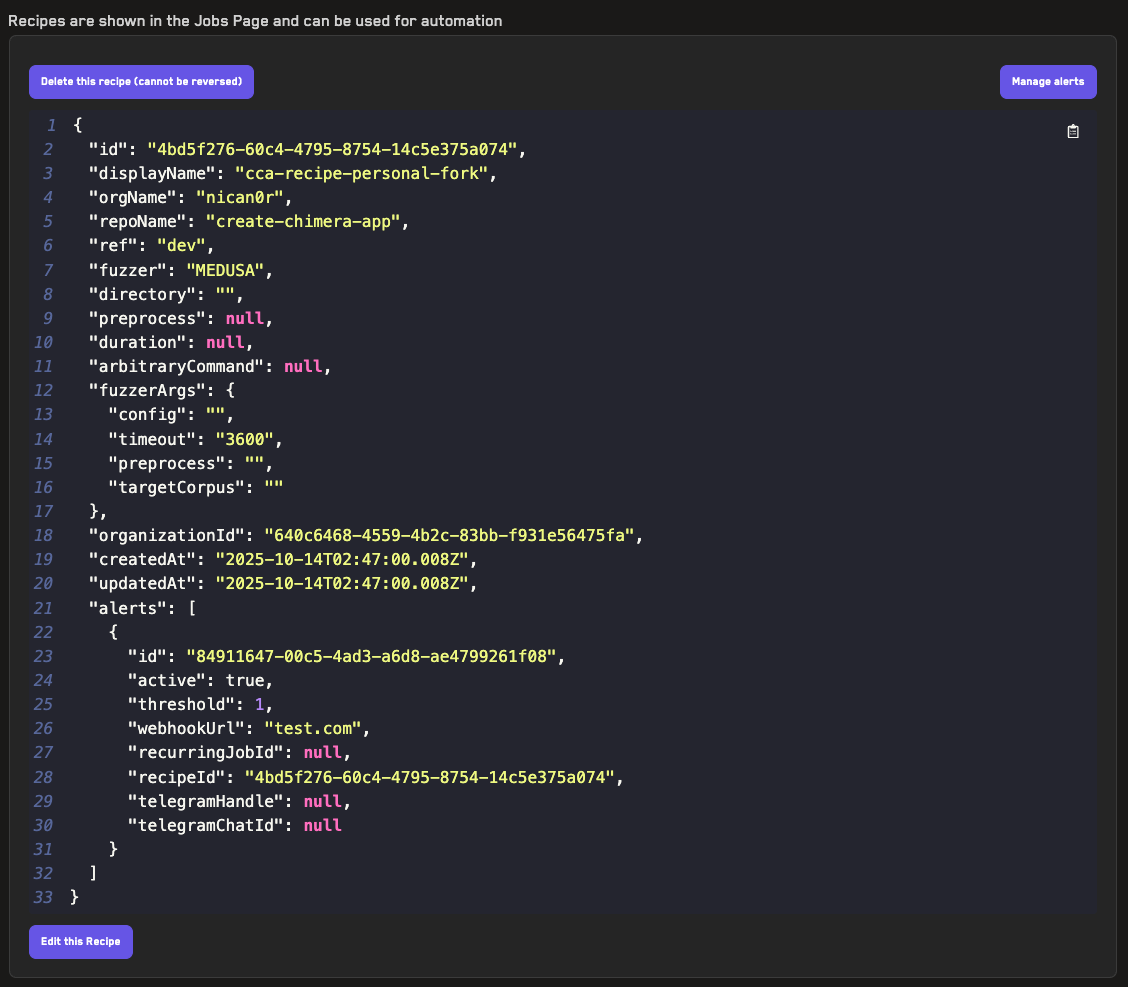

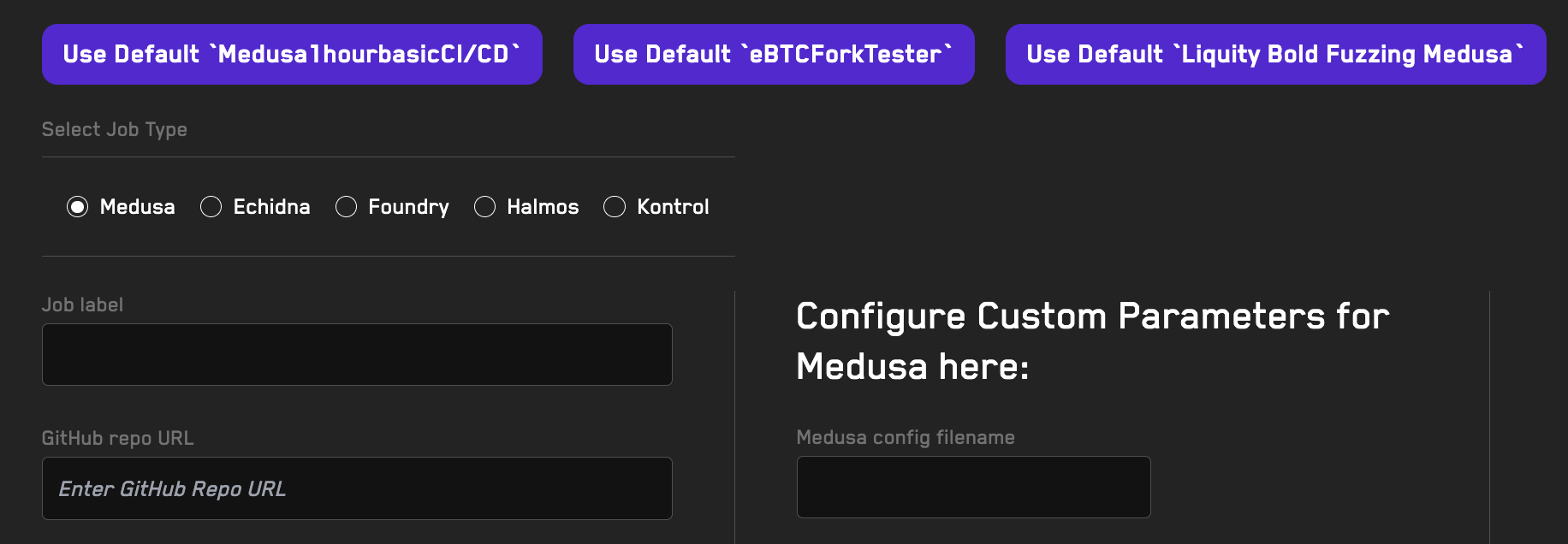

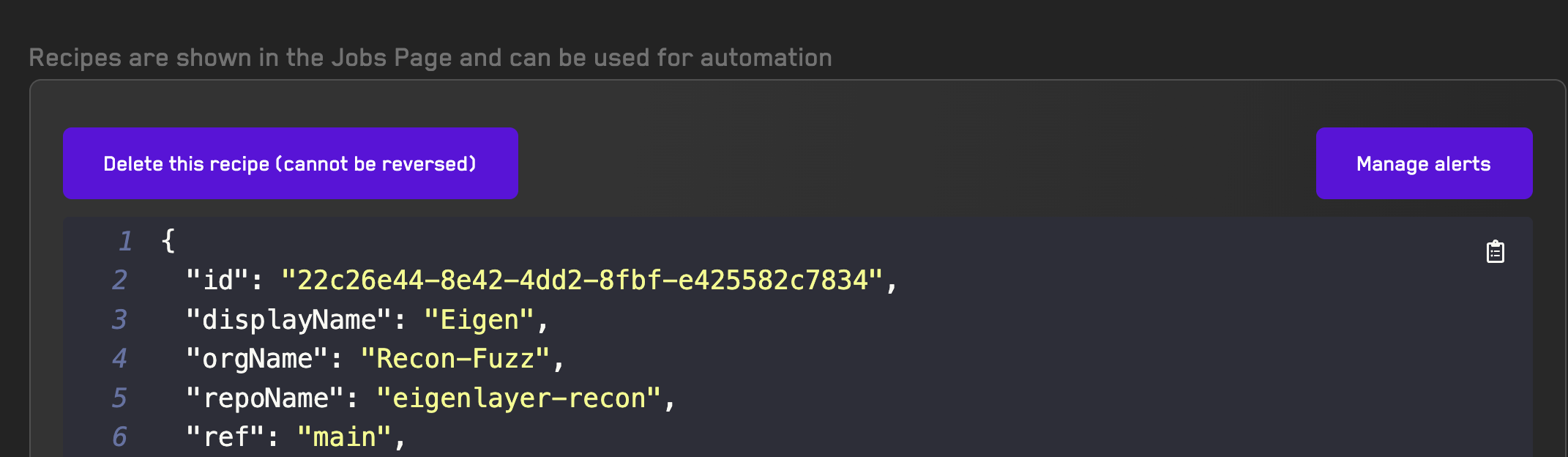

Using Recipes

Learn how to reuse fuzzer configurations when running jobs on the Recon cloud runner using recipes.

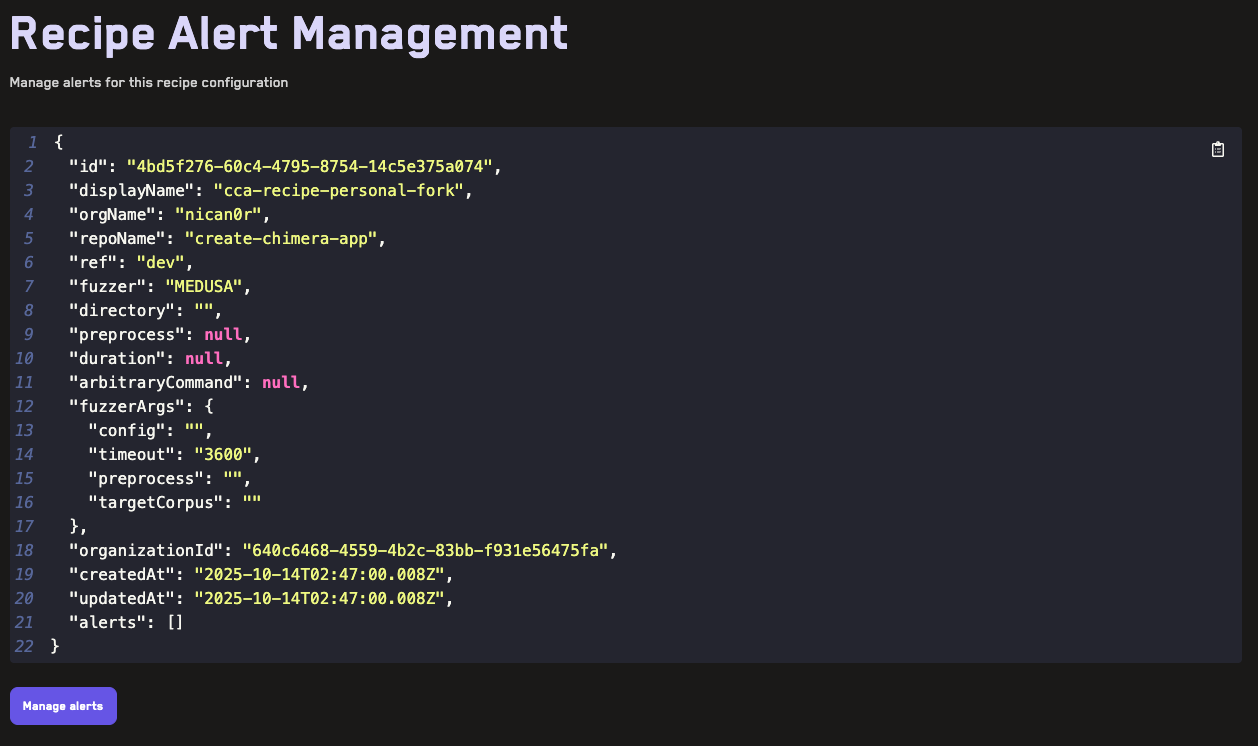

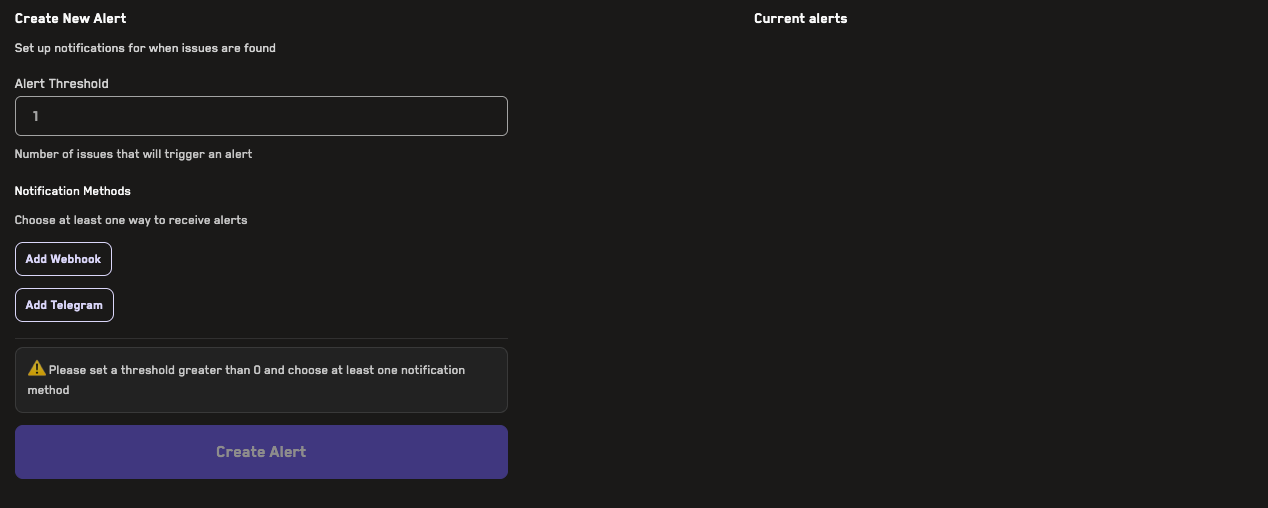

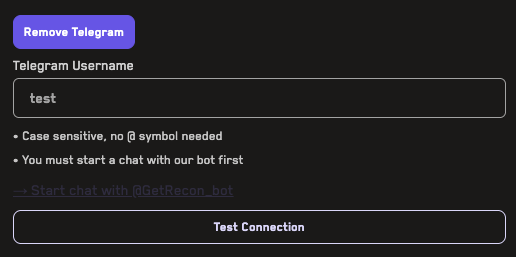

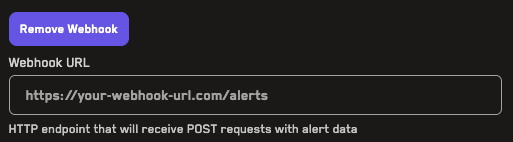

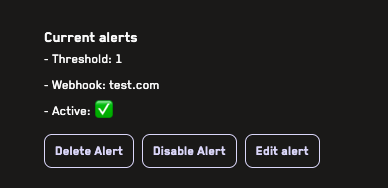

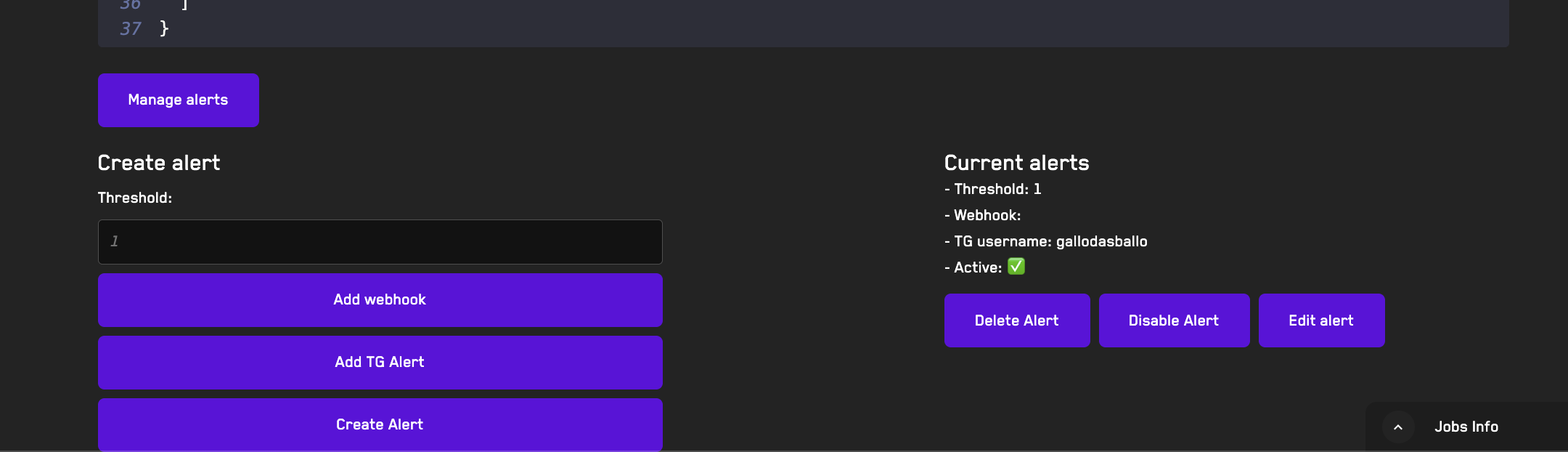

Adding Alerts

Learn how to add alerts for jobs run on the Recon cloud runner that can notify you of broken properties via Telegram or webhook.

Dynamic Replacement

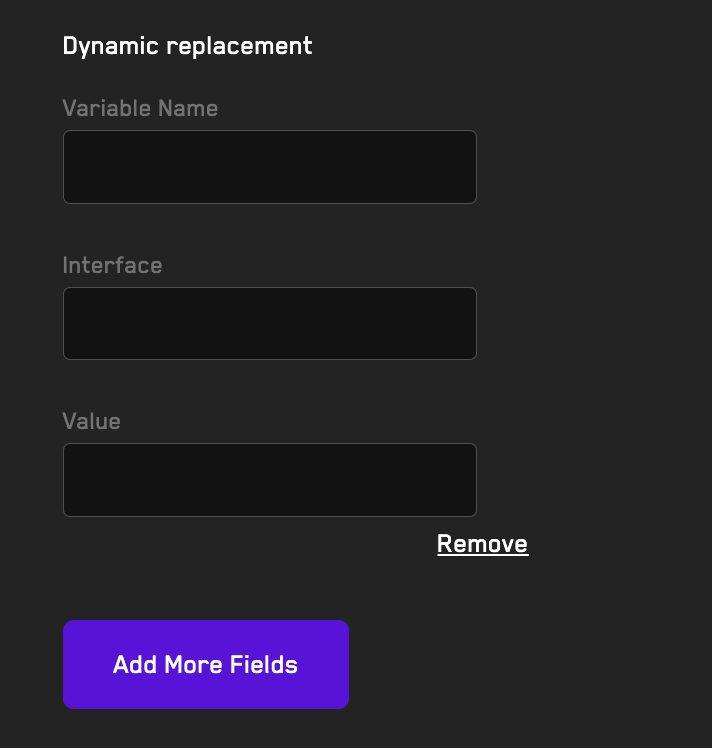

You can test your existing invariant suite with different setup configurations without having to modify the existing setup using dynamic replacement.

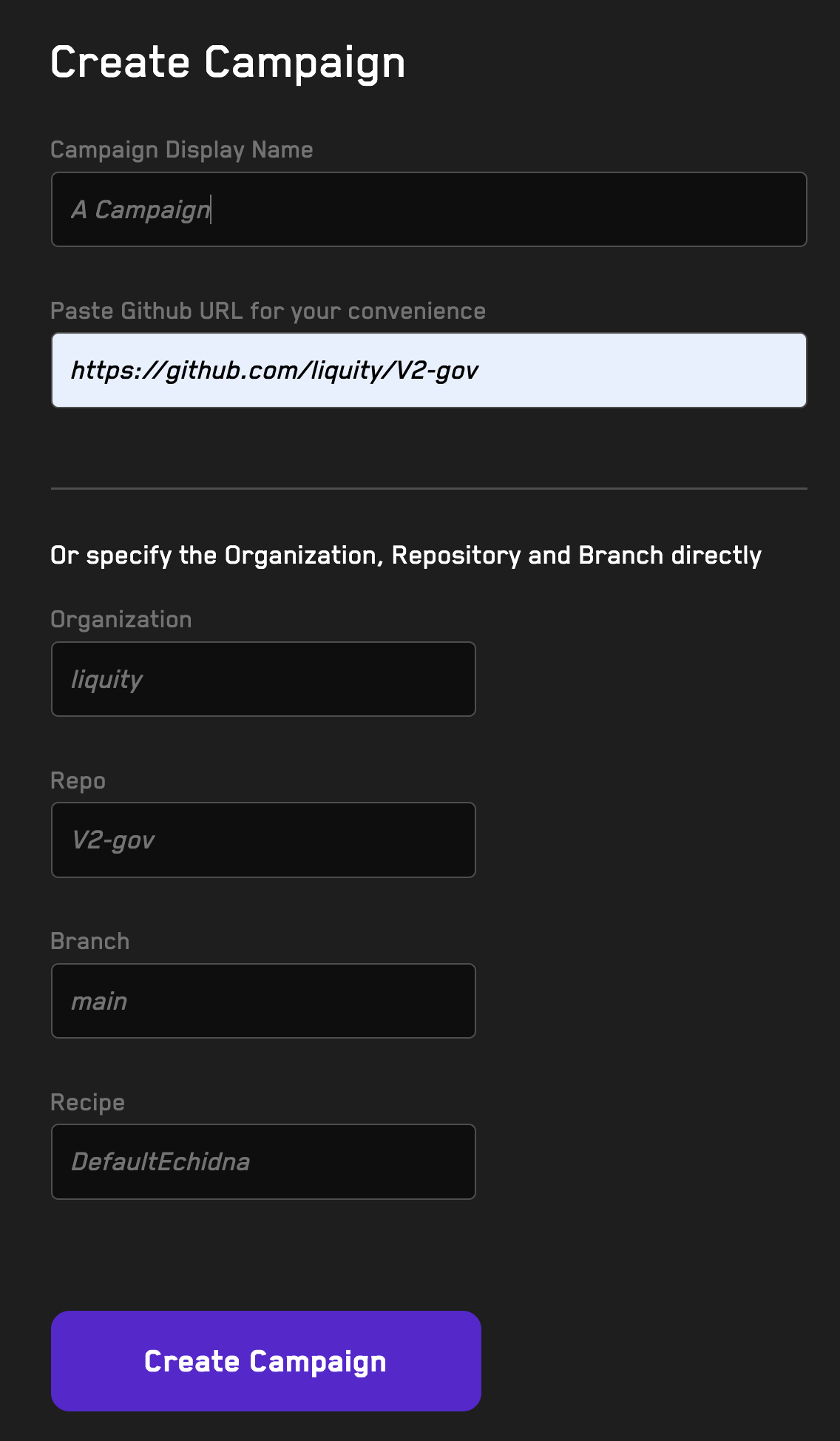

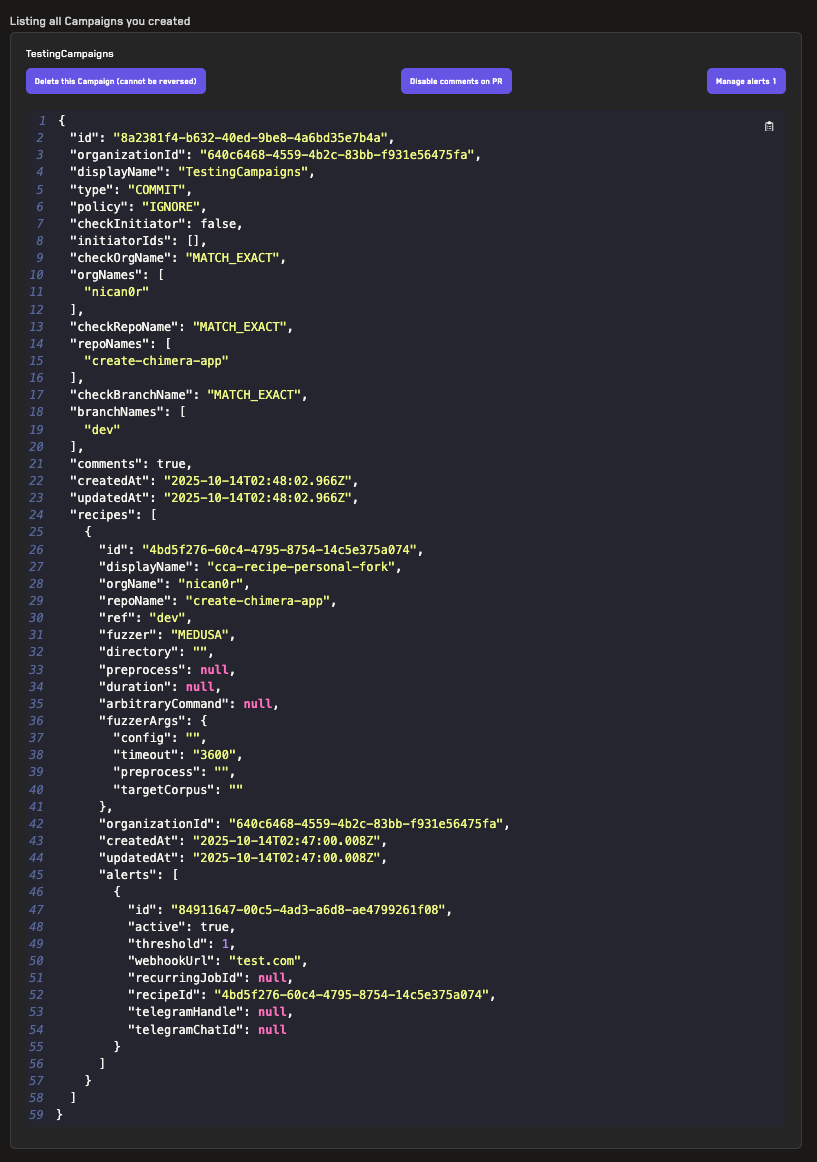

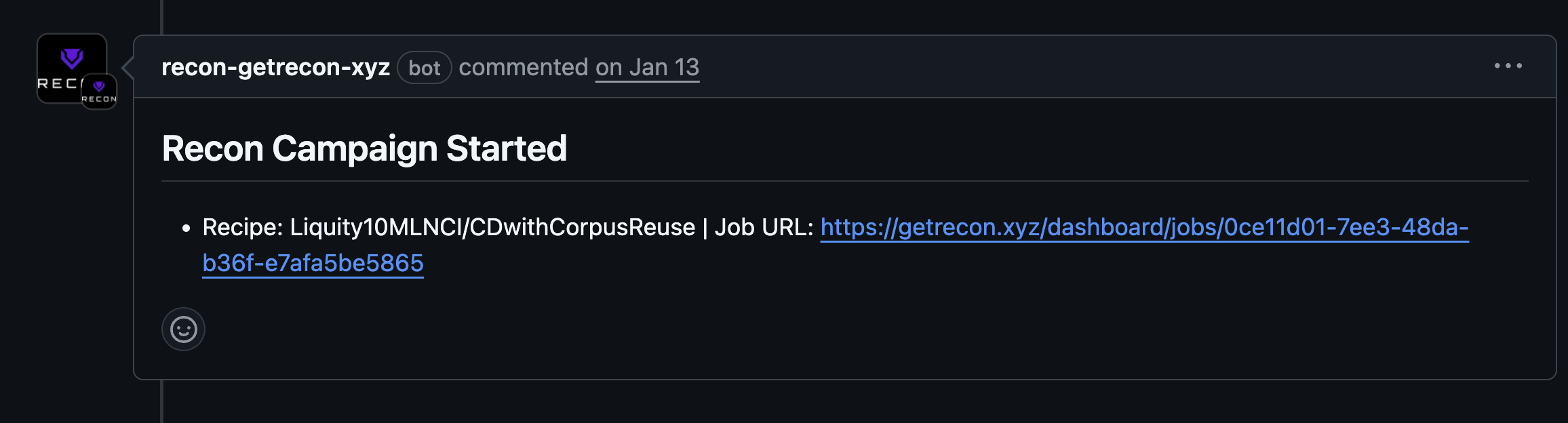

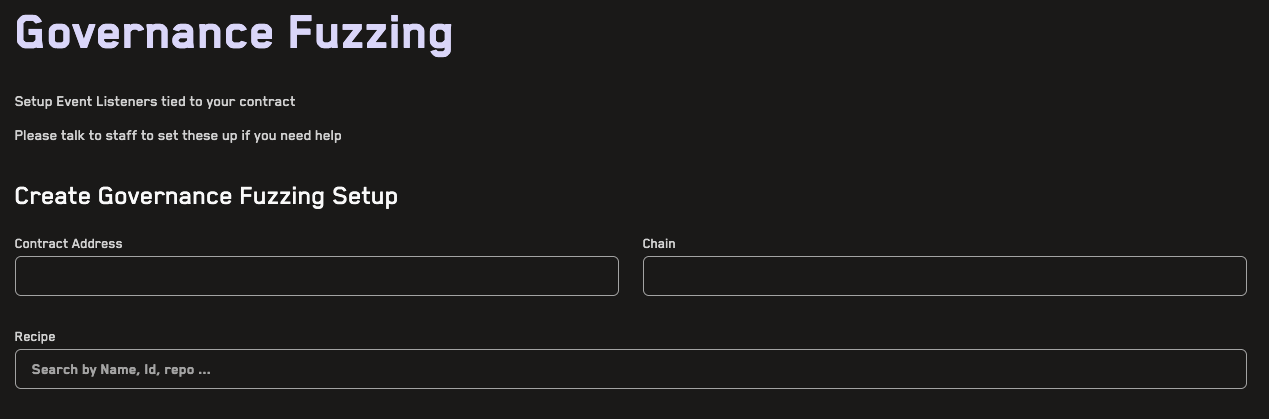

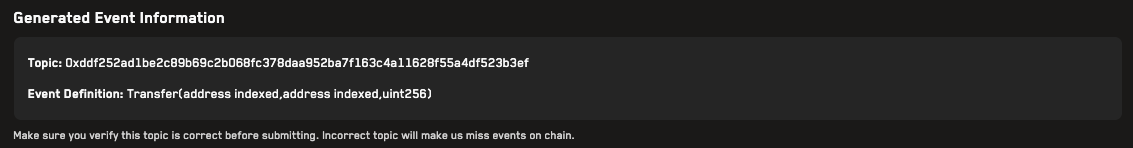

Governance Fuzzing

Simulate on-chain changes that modify the system state (function calls) or system configuration (governance function calls) in a forked testing environment so you can preview changes and their side effects before they happen on-chain using governance fuzzing.

Useful Tips

Learn how to make the most of Recon's features so that you can fuzz more effectively.

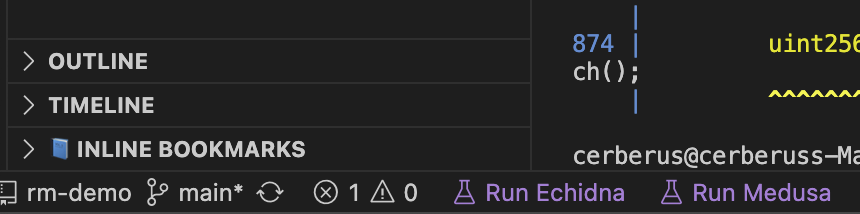

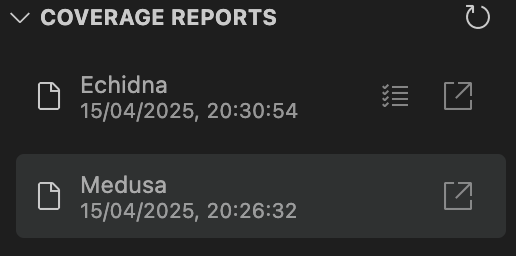

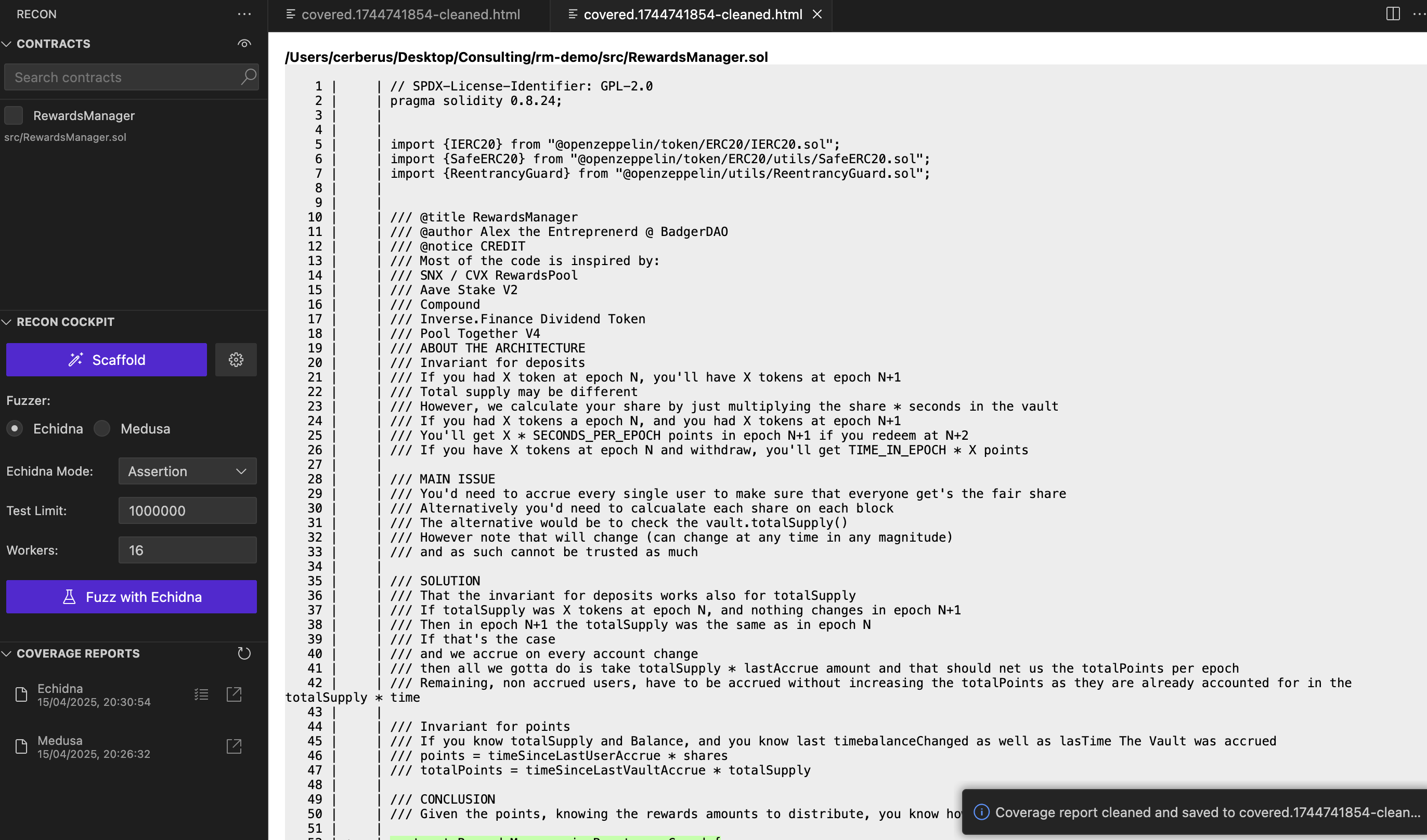

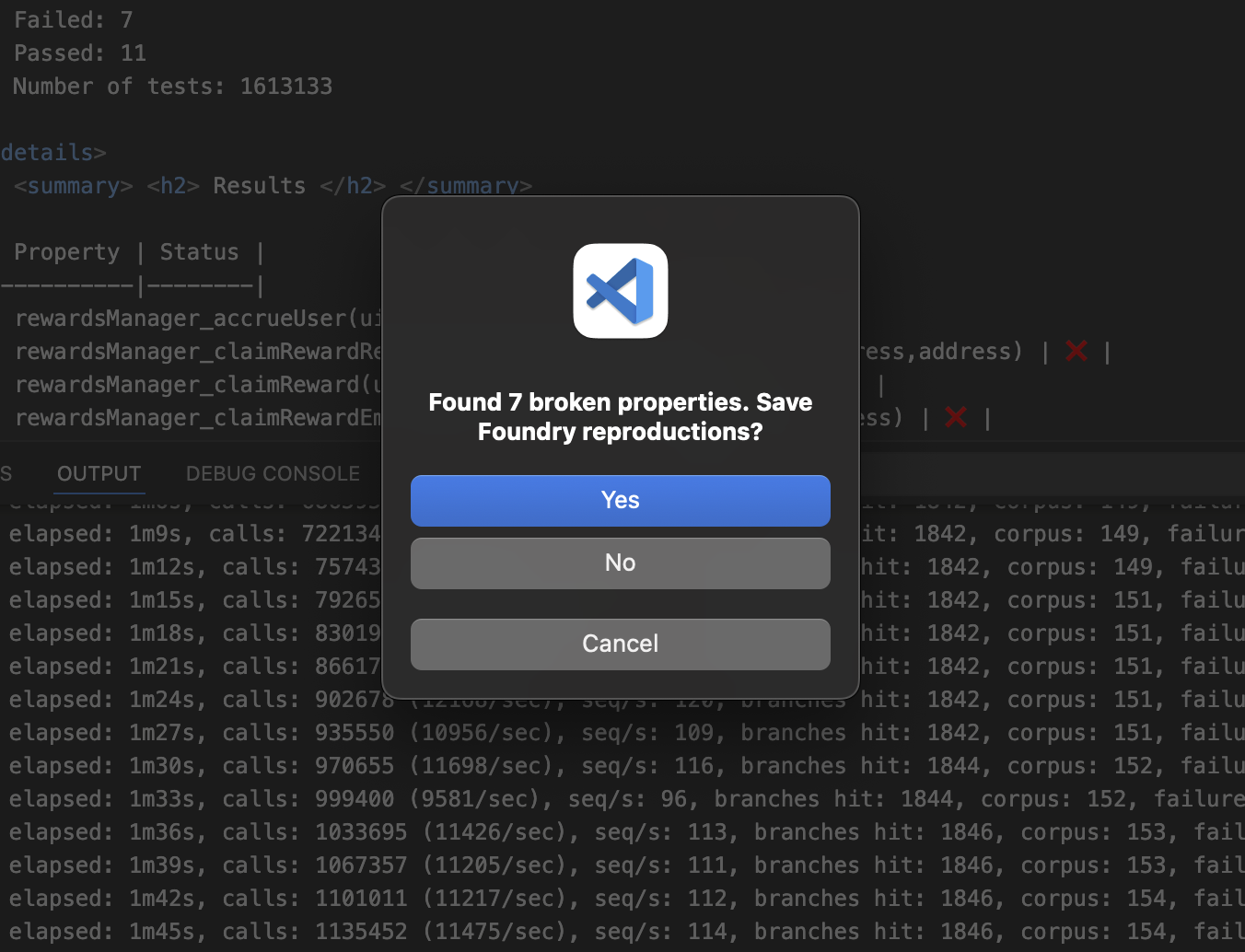

Recon Extension

The Visual Studio Code extension that combines our most useful fuzzing tools into one so you can get to fuzzing faster. Learn how to use it here.

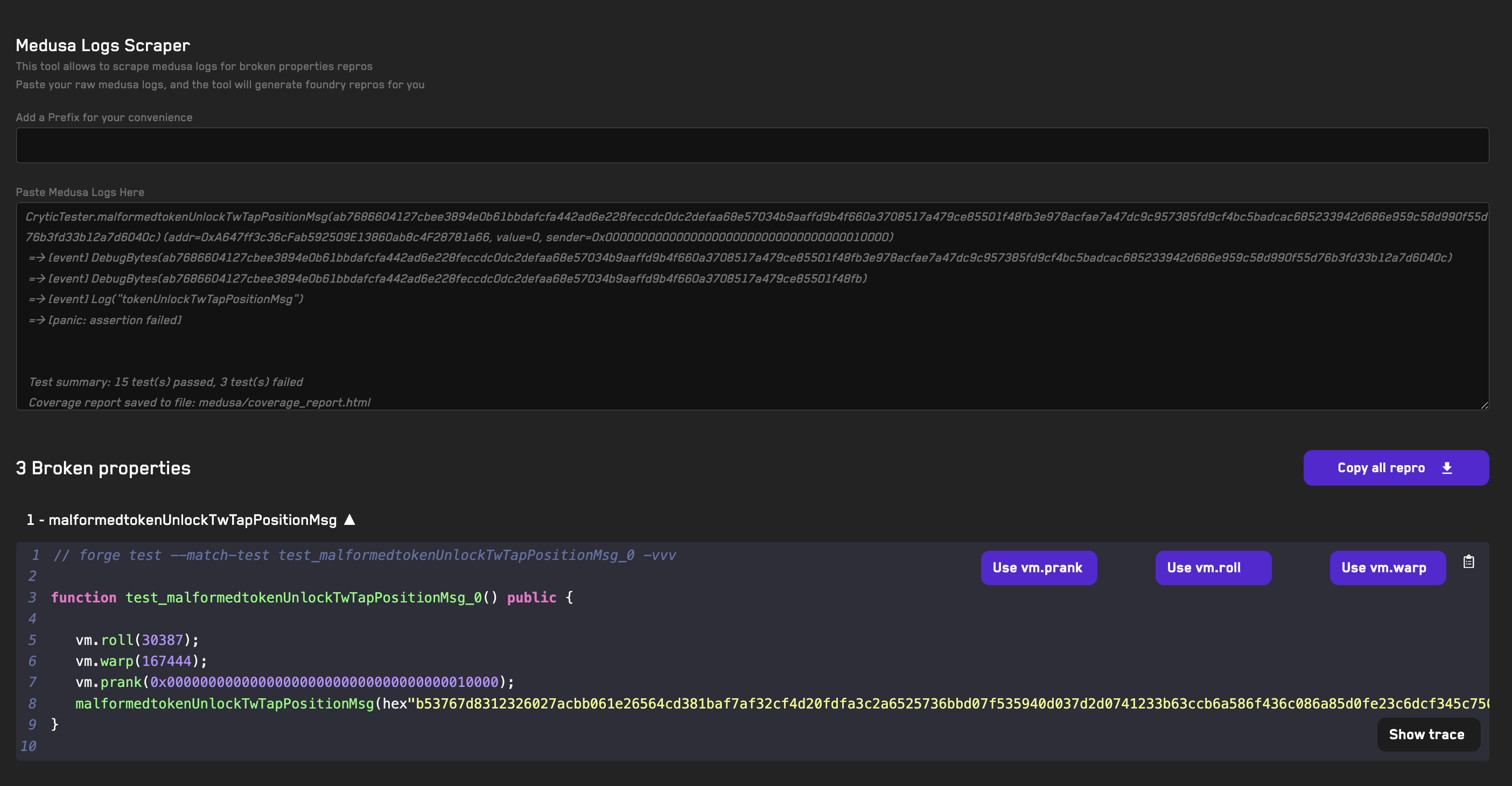

Recon Tools

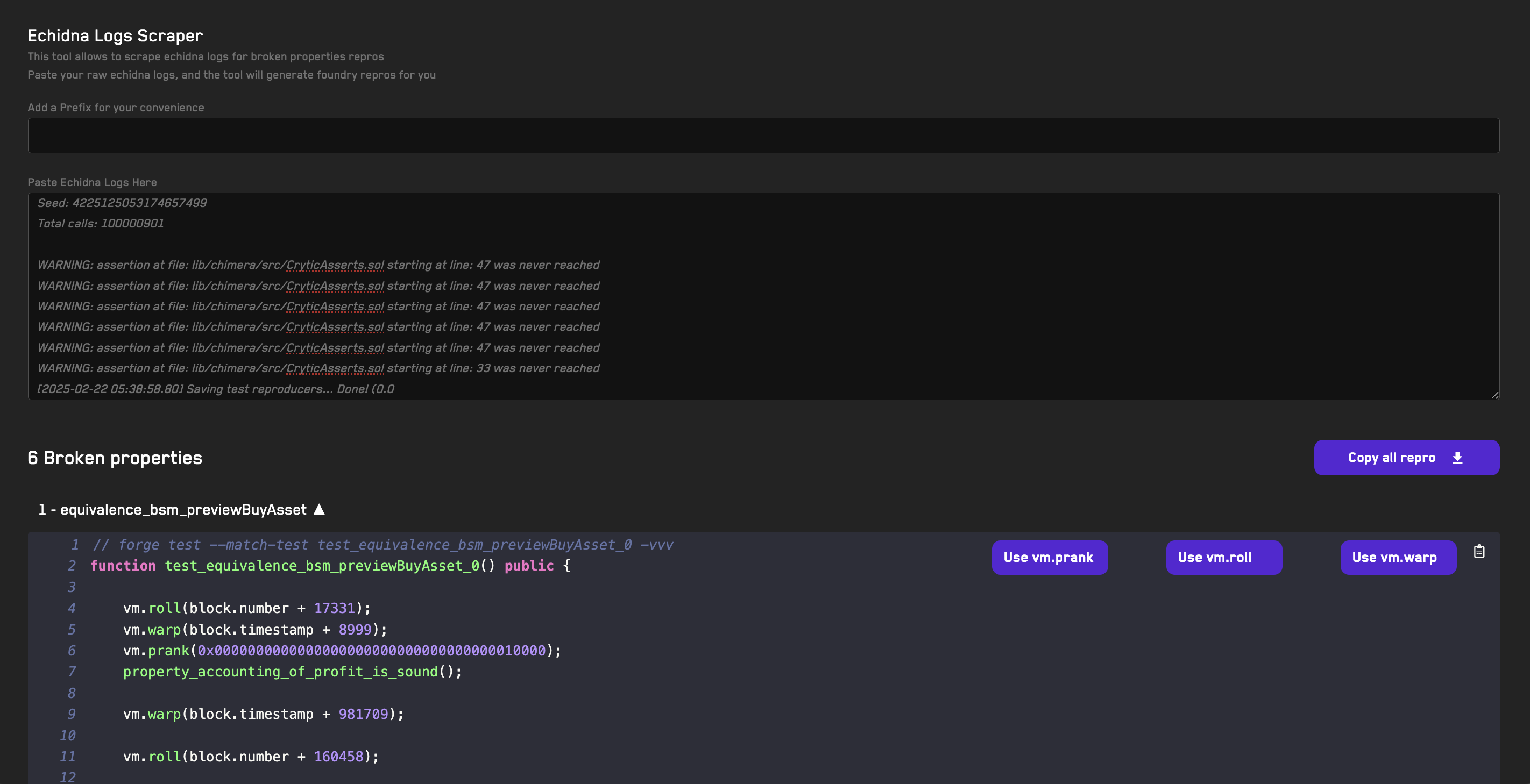

Recon's free tools can help you turn fuzzer logs into Foundry reproducers (Echidna/Medusa).

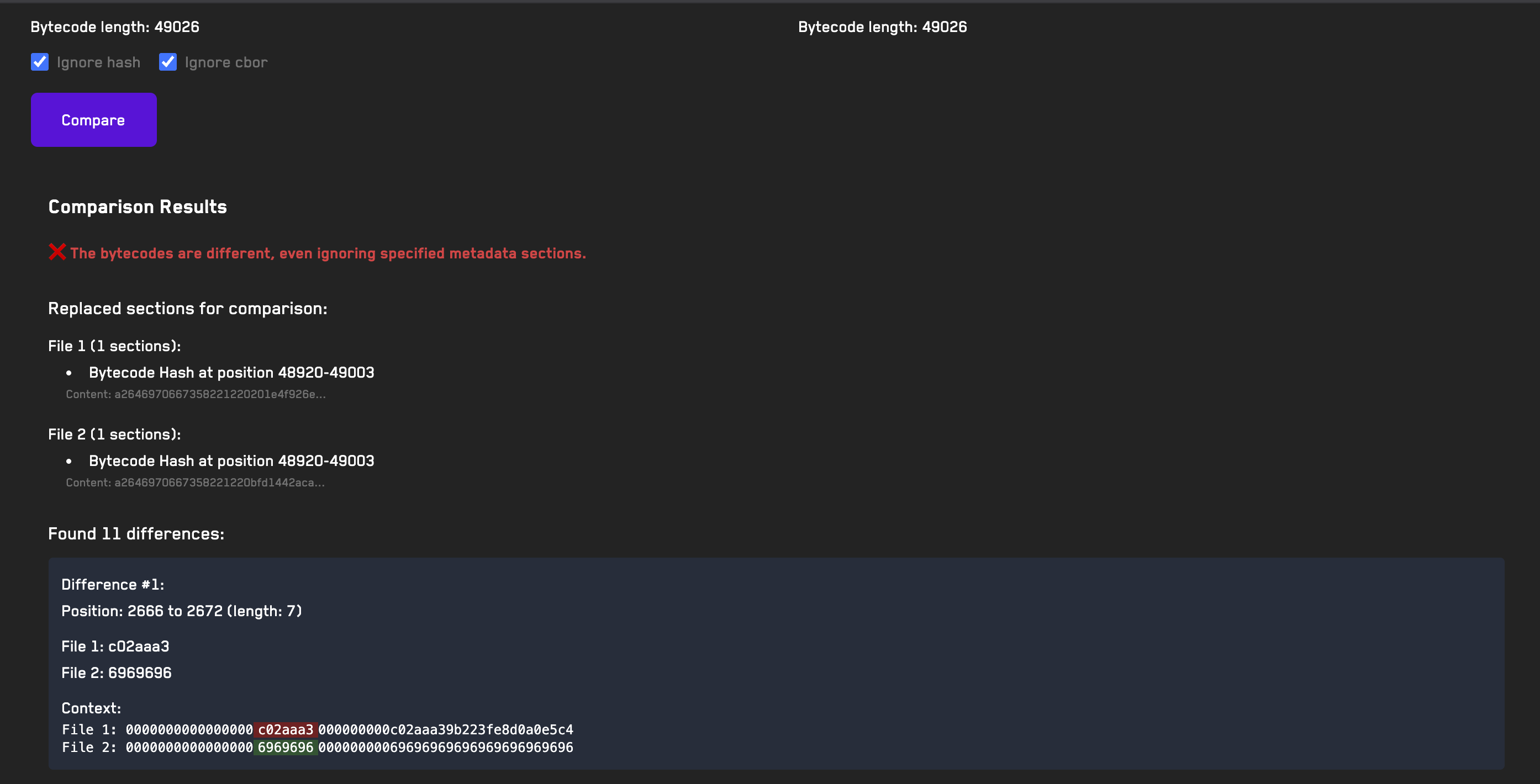

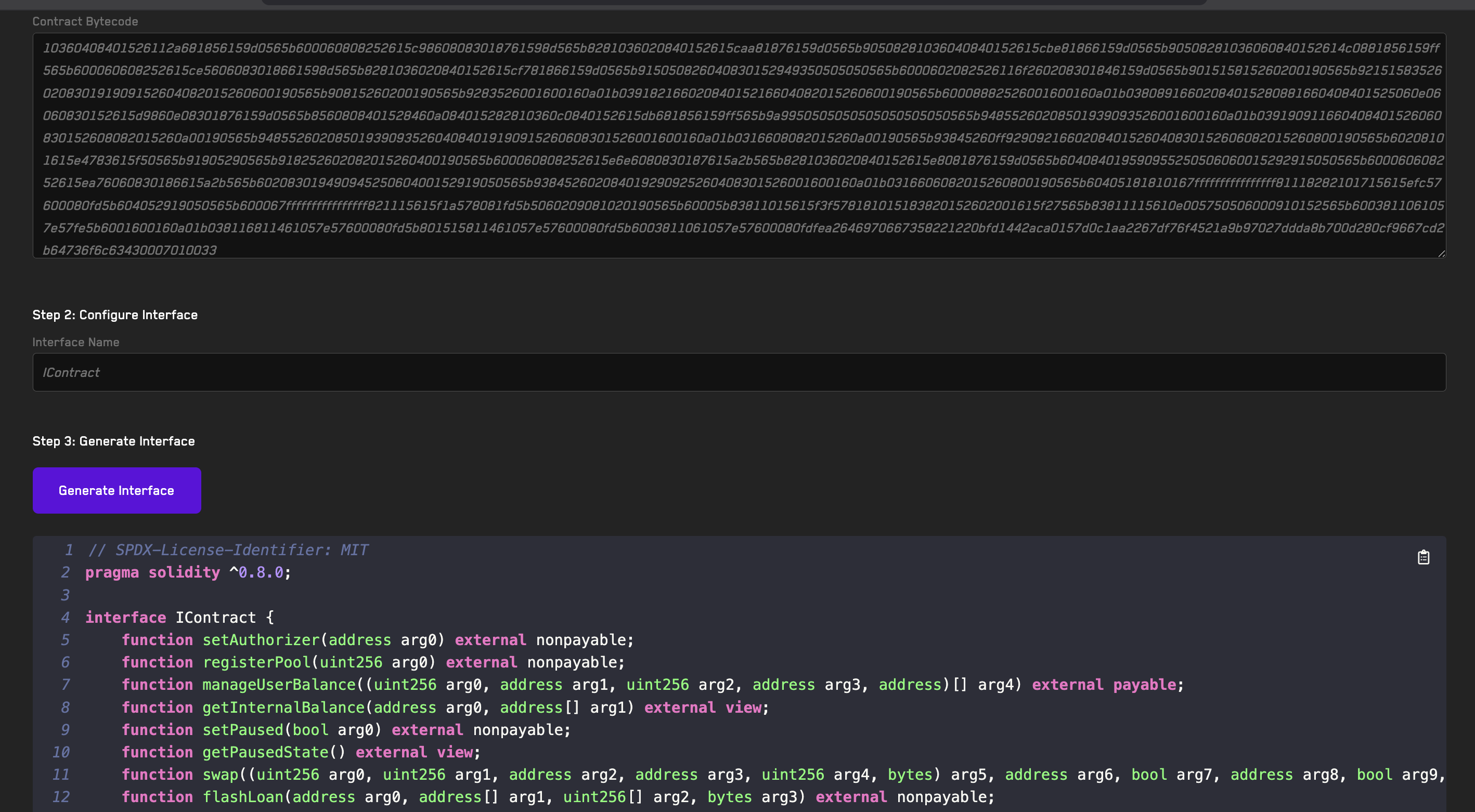

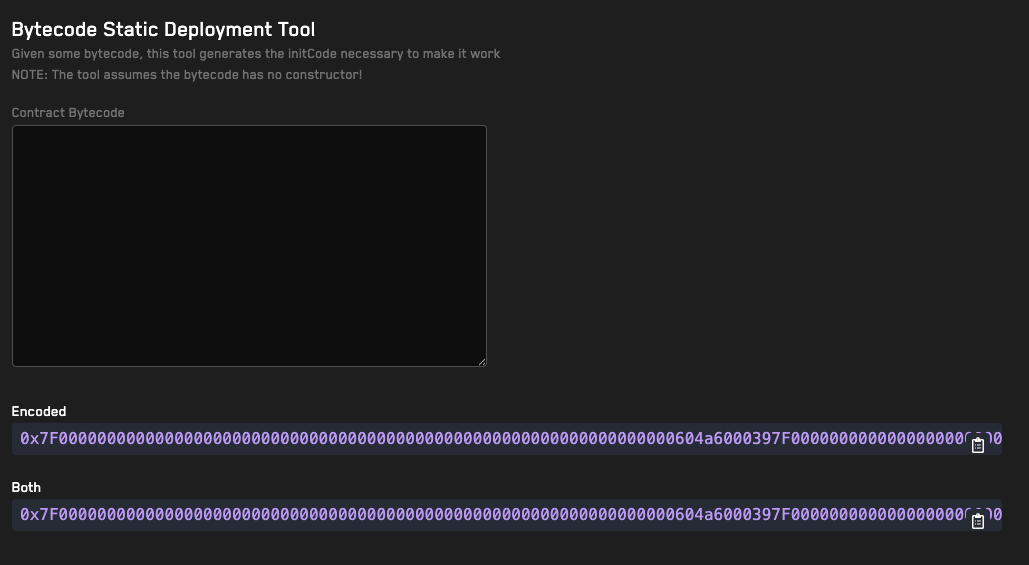

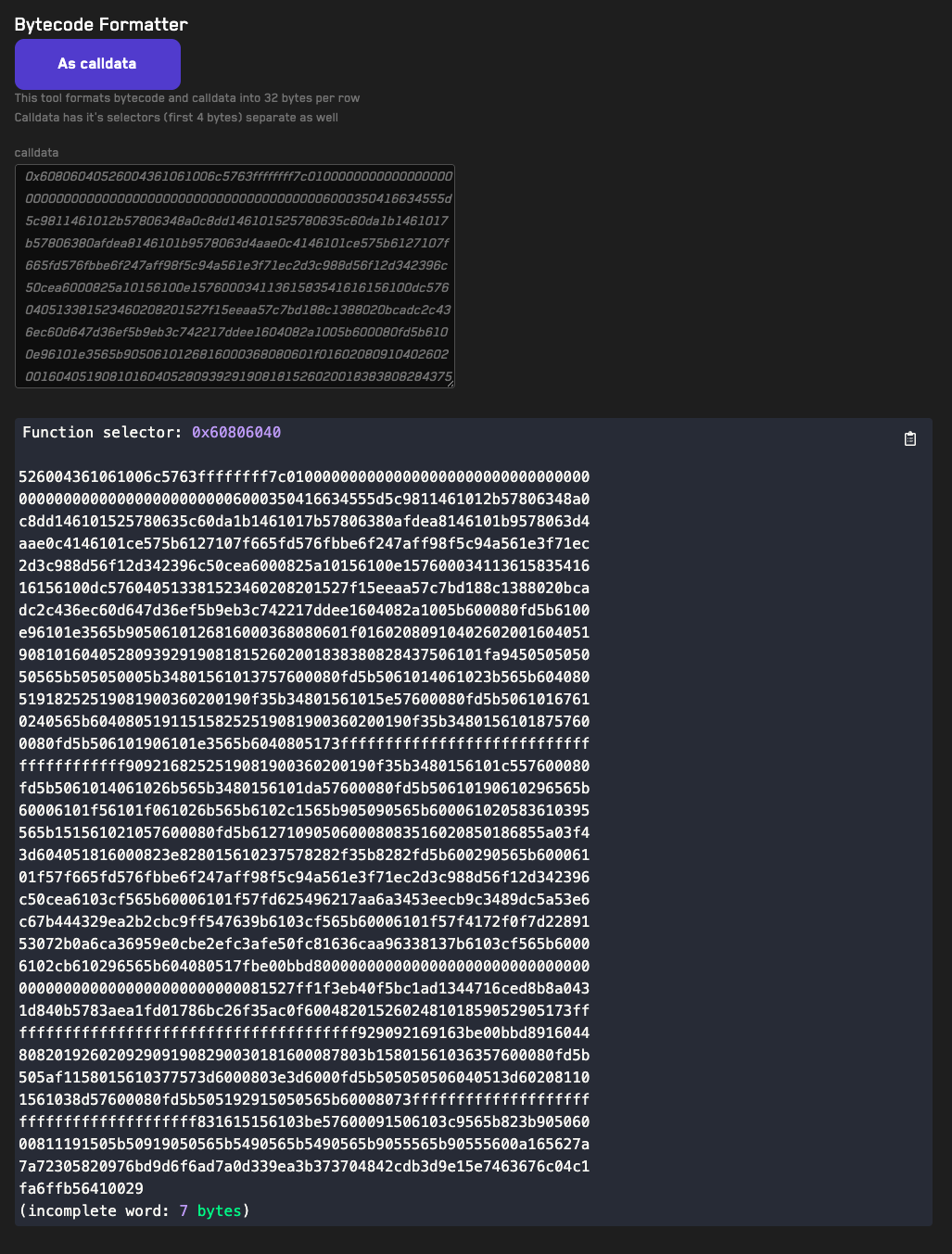

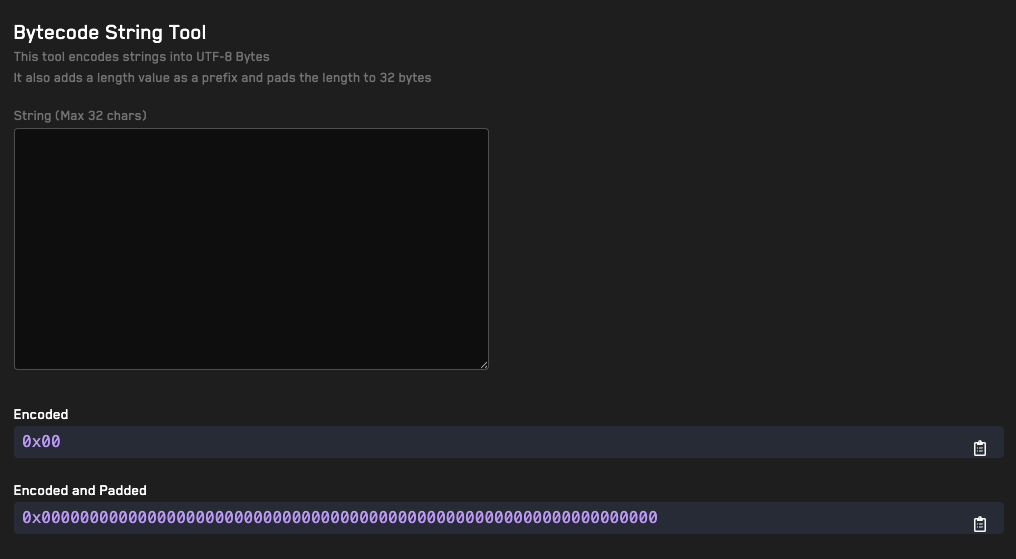

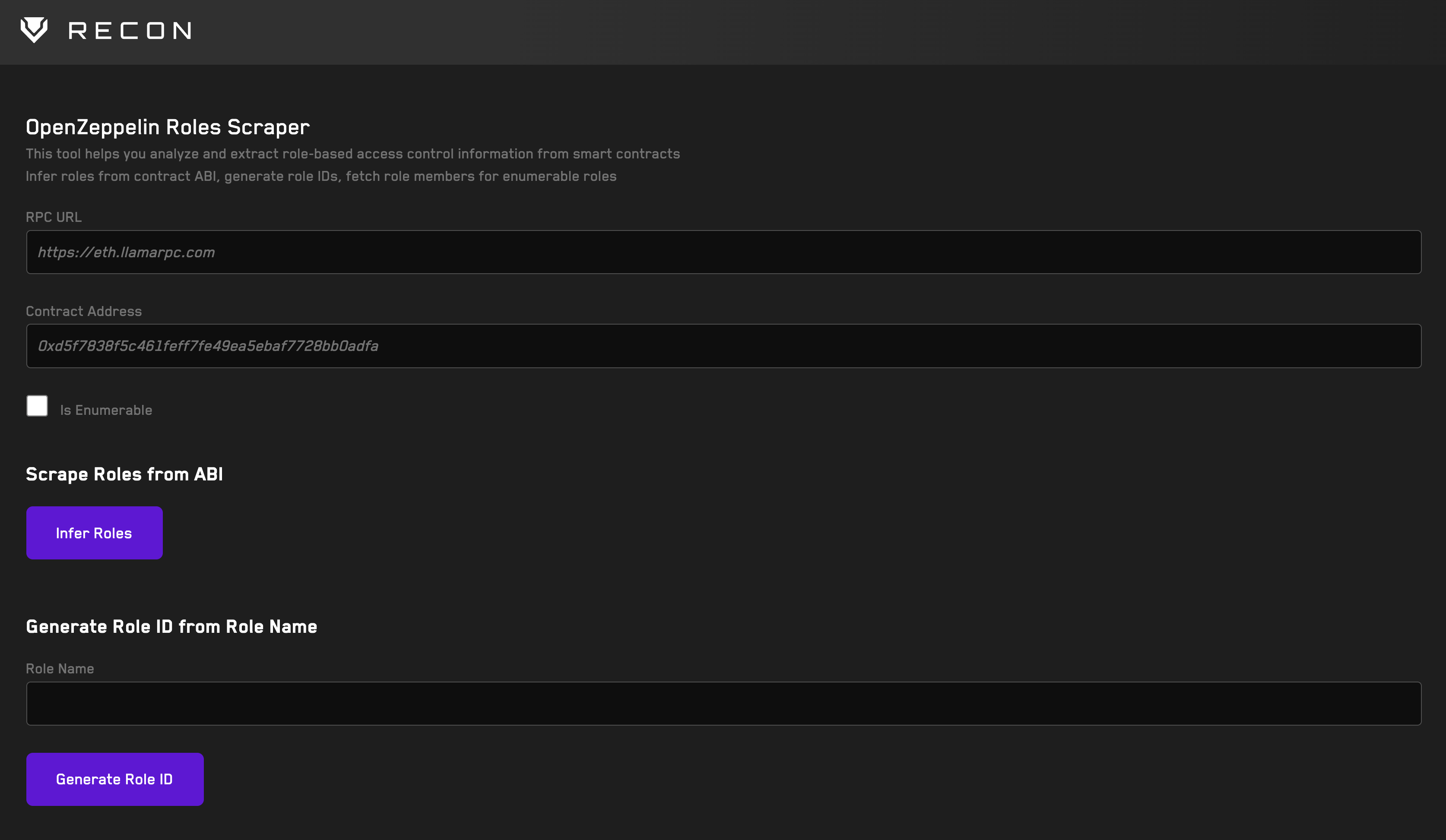

Our bytecode tools can help you compare the bytecode of two different contracts and generate an interface for a given contract's bytecode.

Video Tutorials:

- All Recon Tools (1min)

- Bytecode Tools (6min)

- Storage & Governance Tools (3min)

- Economic Tools (4min)

Open Source Contributions

Recon has created a number of open source tools to make invariant testing easier. These can be found in the OSS Repos section.

OpSec

Learn about best practices for operational security for web3 teams and the services that Recon provides to help projects with this.

Glossary

See the glossary for terminology used throughout the book.

Learn Invariant Testing

Most of our guides and documentation are focused on using fuzzing tools, primarily Echidna and Medusa because we use them internally at Recon. However, we also support running other tools on our cloud infrastructure such as Foundry (fuzzing), Halmos (formal verification), and Kontrol (formal verification).

After having chosen a tool best suited to your needs and downloading it locally, you can get started with the tutorials below.

If you're new to invariant testing, we recommend starting with the following series of posts to get you from 0 to 1:

- First Day At Invariant School

- How To Define Invariants

- Implementing Your First Smart Contract Invariants: A Practical Guide

If you prefer a full end-to-end bootcamp, checkout the written version here based on the livestreamed series here for everything you need to know about invariant testing.

Once you've grasped the basics of invariant testing you can setup your first suite to test with a fuzzing tool. For a step-by-step guide on how to do this, check out the example project.

If you have any questions about how to use Recon or invariant testing in general, you can reach out to our team on Discord.

Additional Learning Resources

Invariant Testing In General

If you're looking for more resources to help you get started with invariant testing, see the following:

Fuzzers

For more resources on our favorite fuzzers (Echidna and Medusa) see the following:

Retrospectives

Deep dives into the work we've done with our elite customers with tips and tricks on building effective invariant testing suites:

- Corn Retrospective

- eBTC Retrospective

- Centrifuge Retrospective part 1

- Centrifuge Retrospective part 2

Videos

Podcasts

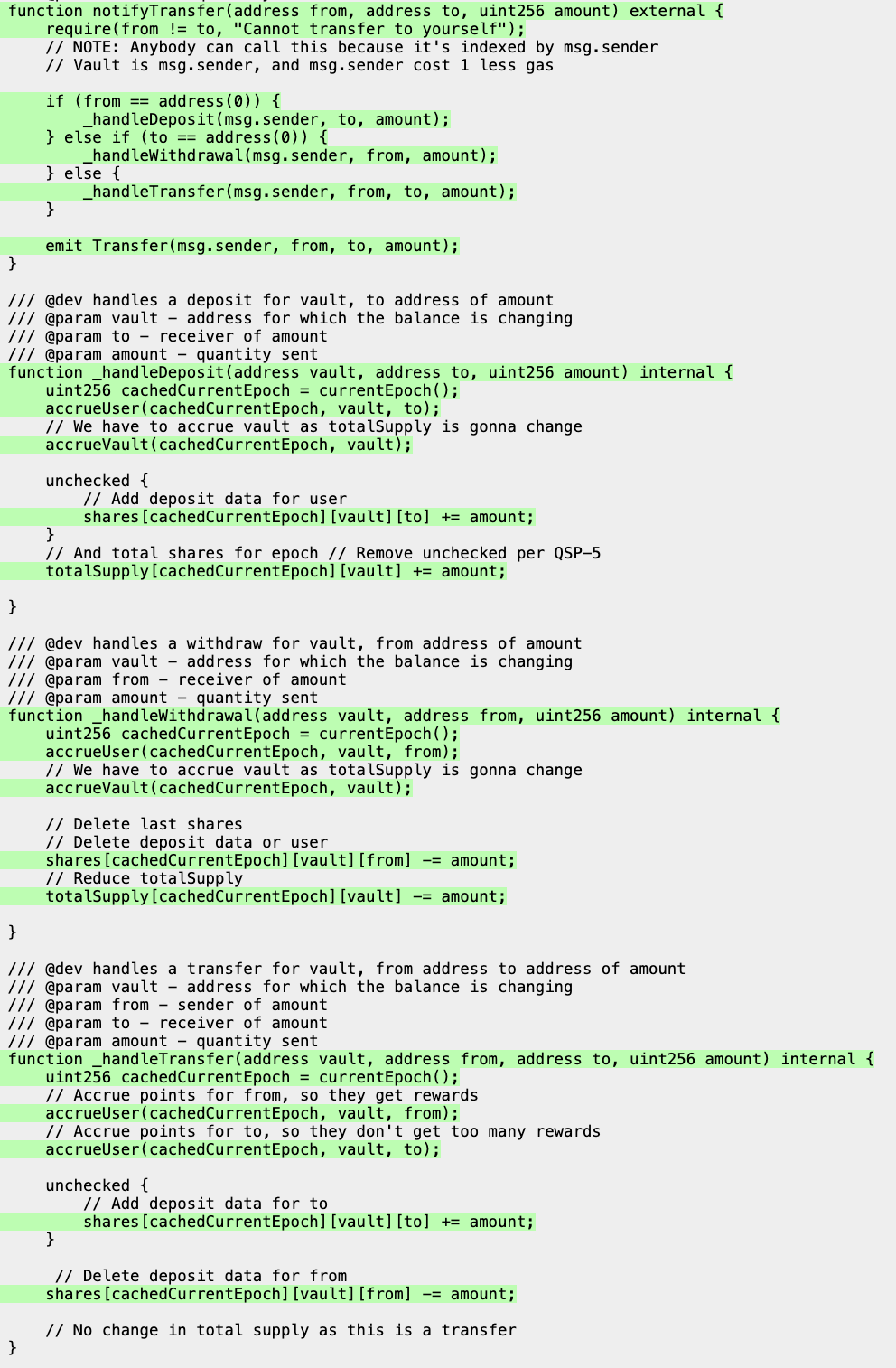

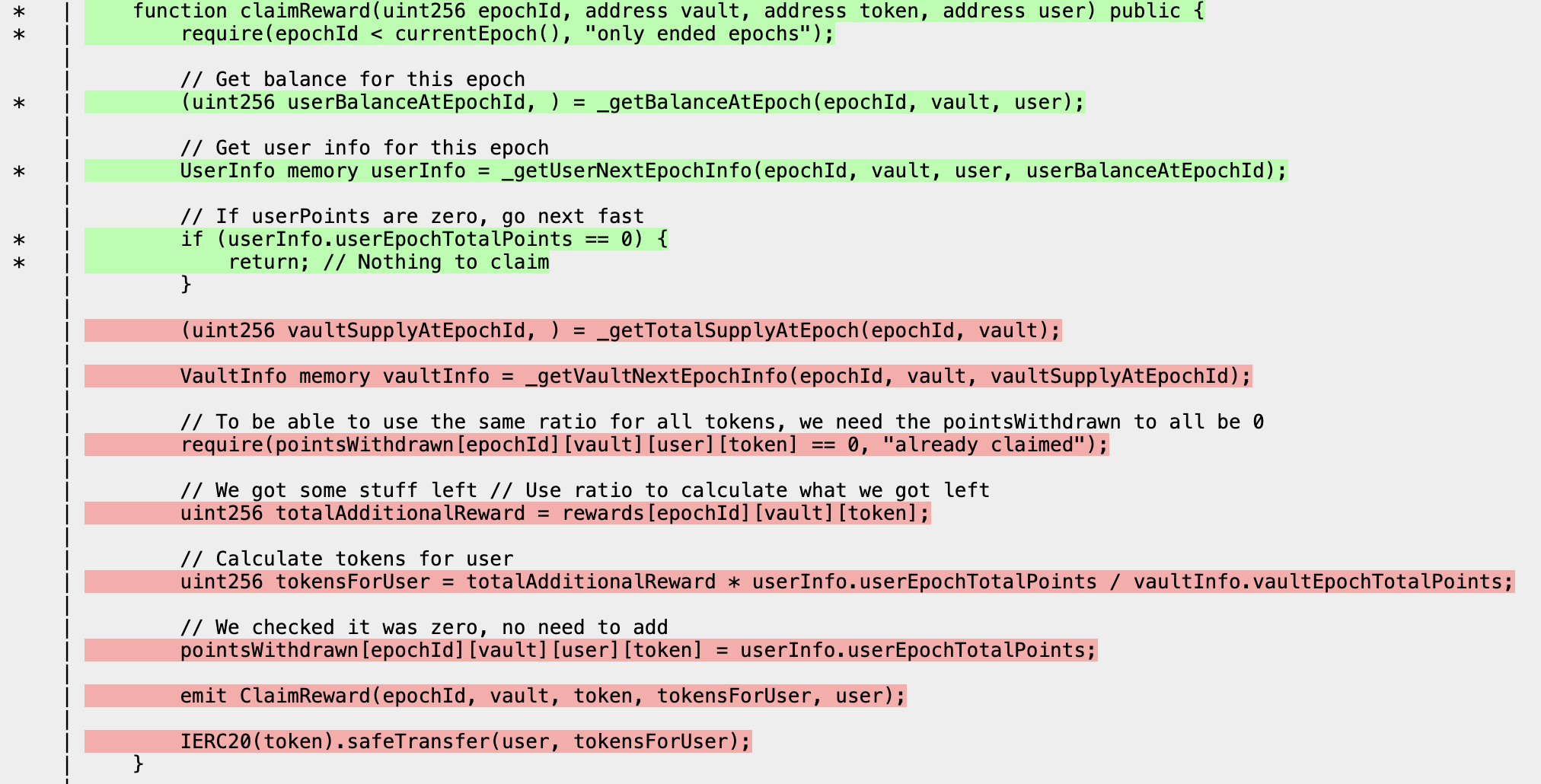

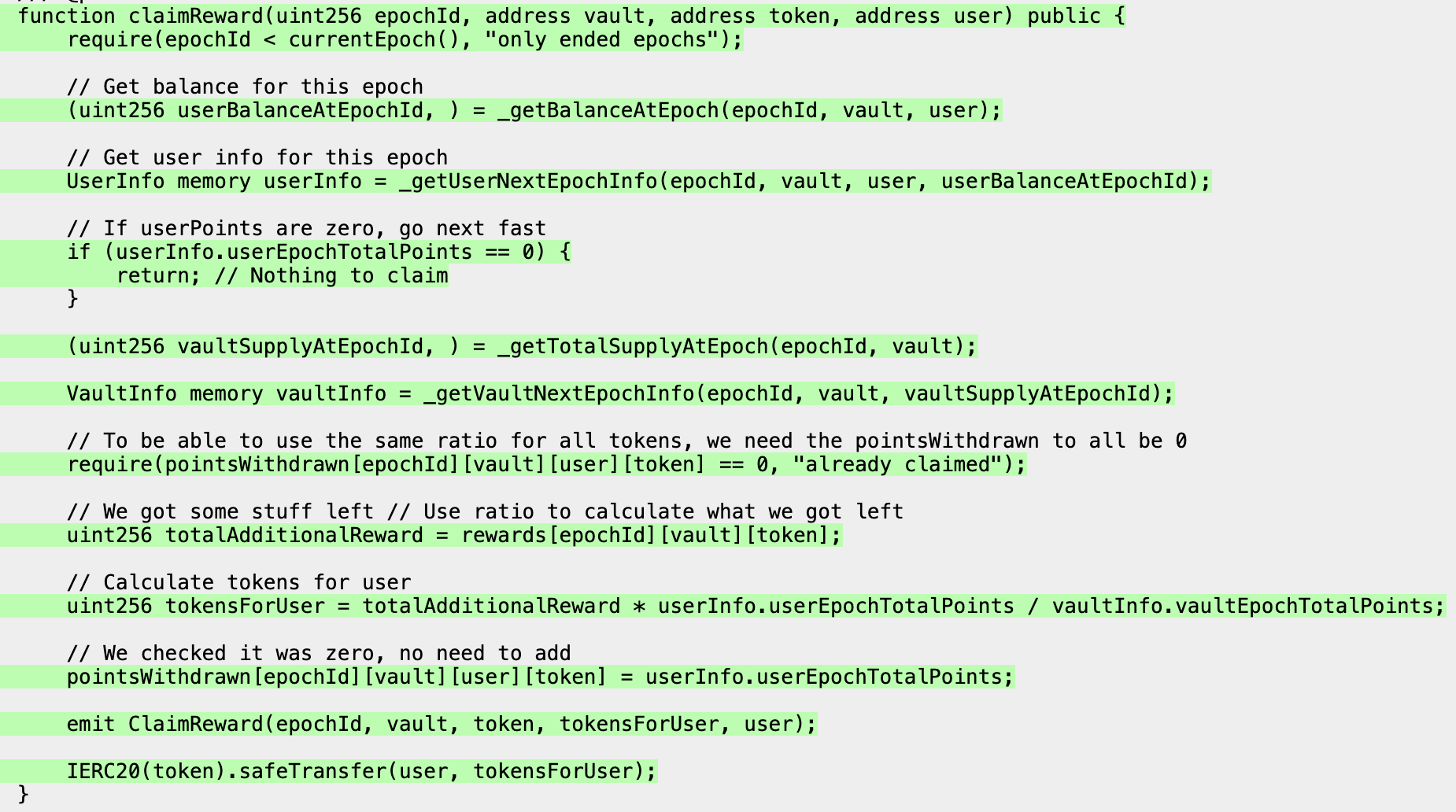

- Fuzzing a RewardsManager with the Recon Extension | Alex & the Remedy Team on Stateful Fuzzing for Security

- Workshop on how to go from a simple foundry test to a full blown critical exploit with fuzzing | Alex & Secureum on fuzzing for security research

- Fuzzing Sablier with the Recon Extension | Alex & Shafu on Invariant Testing

- Fuzzing MicroStable with Echidna | Alex & Shafu on Invariant Testing

- How can I run my Fuzz tests longer? Getrecon interview with Alex | Alex & Patrick Collins (Cyfrin Audits)

- Using Recon Pro to test invariants in the cloud | Alex & Austin Griffith

Office Hours

Office hours are live recordings of useful tips and tricks for invariant testing.

- Fuzz Fest | The best Talks of 2024 on Fuzzing for Security

- The Dangers of Arbitrary Calls | How to write safe contracts that use arbitrary calls and the risk tied to them

- Centrifuge Retrospective Pt1 | On scaffolding and getting to coverage

- Centrifuge Retrospective Pt2 | On breaking properties and the importance of understanding the system you're testing

- How we missed a bug with fuzzing! | On the dangers of clamping

- Finding bugs with Differential Fuzzing | Using differential fuzzing to find bugs

- Fuzzing Bytecode Directly

Trophies

A sample of some publicly disclosed bugs we've found using invariant testing. You can use these to understand what kinds of properties will help you find issues that manual review sometimes can't.

Example Project

In this section, we'll use the Create Chimera App template to create a simple contract and run invariant tests on it.

Prerequisites: the example shown below requires that you have Foundry and Medusa installed on your local machine

Getting started

Clone the create-chimera-app-no-boilerplate repo.

Or

Use forge init --template https://github.com/Recon-Fuzz/create-chimera-app-no-boilerplate

Writing the contract

First, in the src/ directory we'll create a simple Points contract that allows users to make a deposit and earn points proportional to the amount of time that they've deposited for, where longer deposits equal more points:

// SPDX-License-Identifier: UNLICENSED

pragma solidity ^0.8.13;

import {MockERC20} from "@recon/MockERC20.sol";

contract Points {

mapping (address => uint88) depositAmount;

mapping (address => uint256) depositTime;

uint256 totalDeposits;

MockERC20 public token;

constructor(address _token) {

token = MockERC20(_token);

}

function deposit(uint88 amt) external {

depositAmount[msg.sender] += amt;

depositTime[msg.sender] = block.timestamp;

totalDeposits += amt;

token.transferFrom(msg.sender, address(this), amt);

}

function power() external view returns (uint256) {

return depositAmount[msg.sender] * (block.timestamp - depositTime[msg.sender]);

}

}

Adding to Setup

Now with a contract that we can test, we can deploy it in the Setup contract:

abstract contract Setup is BaseSetup, ActorManager, AssetManager, Utils {

Points points;

/// === Setup === ///

function setup() internal virtual override {

_newAsset(18); // deploys an 18 decimal token

// Deploy Points contract

points = new Points(_getAsset()); // uses the asset deployed above and managed by the AssetManager

// Mints the deployed asset to all actors and sets max allowances for the points contract

address[] memory approvalArray = new address[](1);

approvalArray[0] = address(points);

_finalizeAssetDeployment(_getActors(), approvalArray, type(uint256).max);

}

/// === MODIFIERS === ///

modifier asAdmin {

vm.prank(address(this));

_;

}

modifier asActor {

vm.prank(address(_getActor()));

_;

}

}

The AssetManager allows us to deploy assets (using _newAsset) that we can use in our Points contract with simplified fetching of the currently set asset using _getAsset().

We then use the _finalizeAssetDeployment utility function provided by the AssetManager to approve the deployed asset for all actors (tracked in the ActorManager) to the Points contract.

Running the fuzzer

We can now run the fuzzer with no state exploration since we haven't added handler functions.

Before we run the fuzzer however we'll use Foundry to check that the project compiles correctly because it provides faster feedback on this than Medusa.

Running forge build we see that it compiles successfully, meaning the deployment in the Setup contract works as expected:

$ forge build

[⠊] Compiling...

[⠘] Compiling 44 files with Solc 0.8.28

[⠃] Solc 0.8.28 finished in 717.19ms

Compiler run successful!

We can now run the Medusa fuzzer using medusa fuzz, which gives us the following output:

medusa fuzz

⇾ Reading the configuration file at: /temp/example-recon/medusa.json

⇾ Compiling targets with crytic-compile

⇾ Running command:

crytic-compile . --export-format solc --foundry-compile-all

⇾ Finished compiling targets in 5s

⇾ No Slither cached results found at slither_results.json

⇾ Running Slither:

slither . --ignore-compile --print echidna --json -

⇾ Finished running Slither in 7s

⇾ Initializing corpus

⇾ Setting up test chain

⇾ Finished setting up test chain

⇾ Fuzzing with 16 workers

⇾ [NOT STARTED] Assertion Test: CryticTester.switch_asset(uint256)

⇾ [NOT STARTED] Assertion Test: CryticTester.add_new_asset(uint8)

⇾ fuzz: elapsed: 0s, calls: 0 (0/sec), seq/s: 0, branches hit: 289, corpus: 0, failures: 0/0, gas/s: 0

⇾ [NOT STARTED] Assertion Test: CryticTester.asset_approve(address,uint128)

⇾ [NOT STARTED] Assertion Test: CryticTester.asset_mint(address,uint128)

⇾ [NOT STARTED] Assertion Test: CryticTester.switchActor(uint256)

⇾ fuzz: elapsed: 3s, calls: 70172 (23389/sec), seq/s: 230, branches hit: 481, corpus: 126, failures: 0/692, gas/s: 8560148887

⇾ fuzz: elapsed: 6s, calls: 141341 (236

You can now stop medusa with CTRL + C.

At this point, we expect almost no lines to be covered (indicated by the low corpus value in the console logs). We can note however that because the corpus value is nonzero, something is being covered, in our case this is the exposed functions in the ManagerTargets which provide handlers for the functions in the AssetManager and ActorManager.

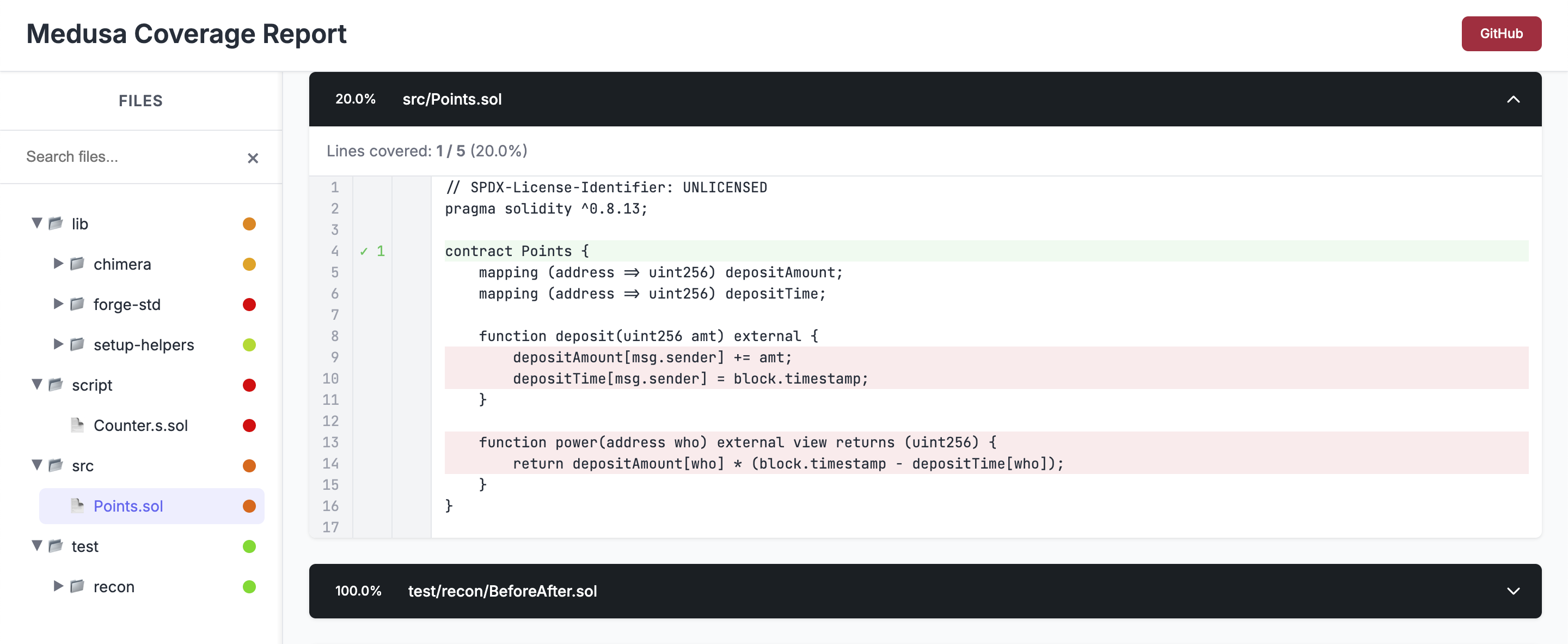

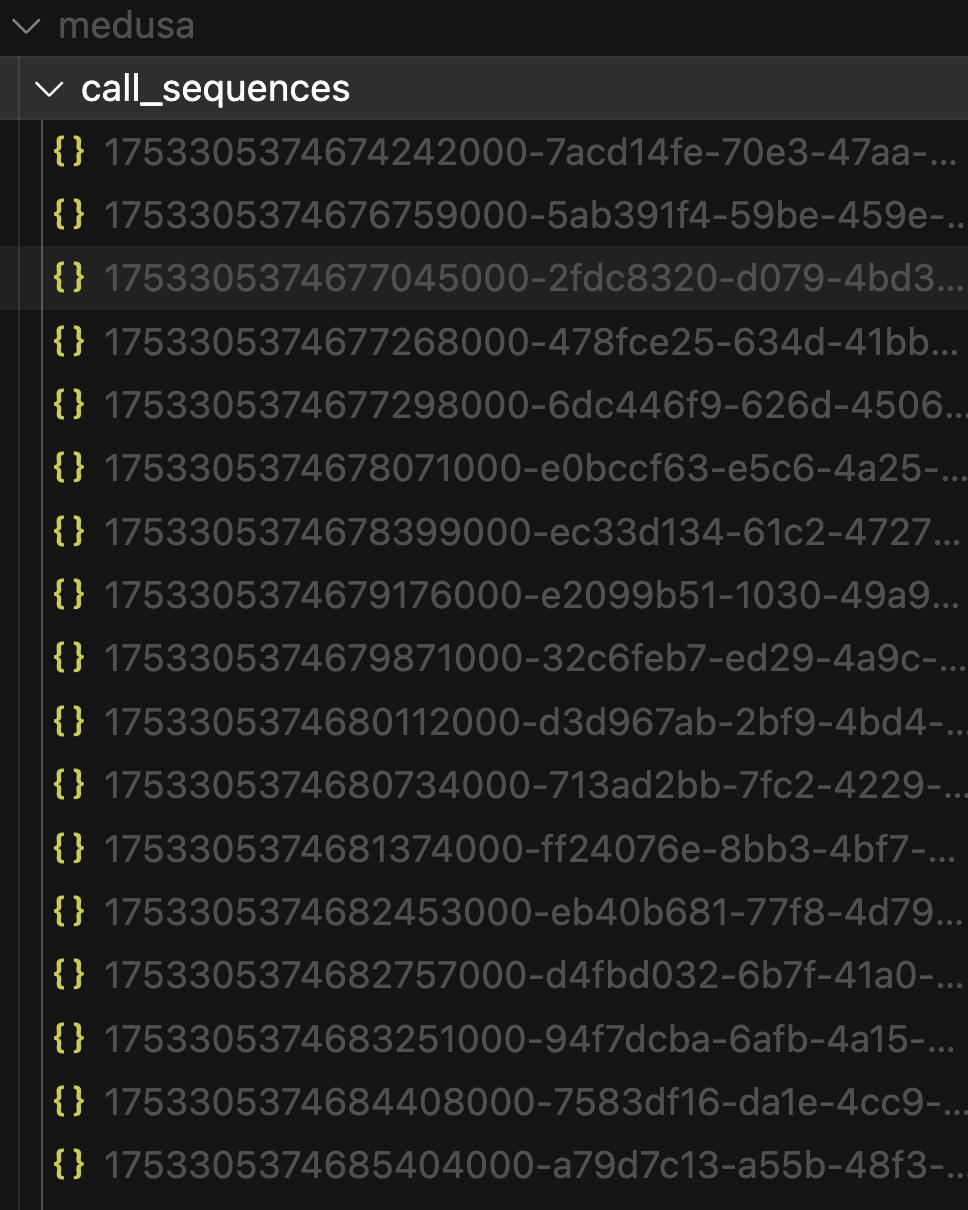

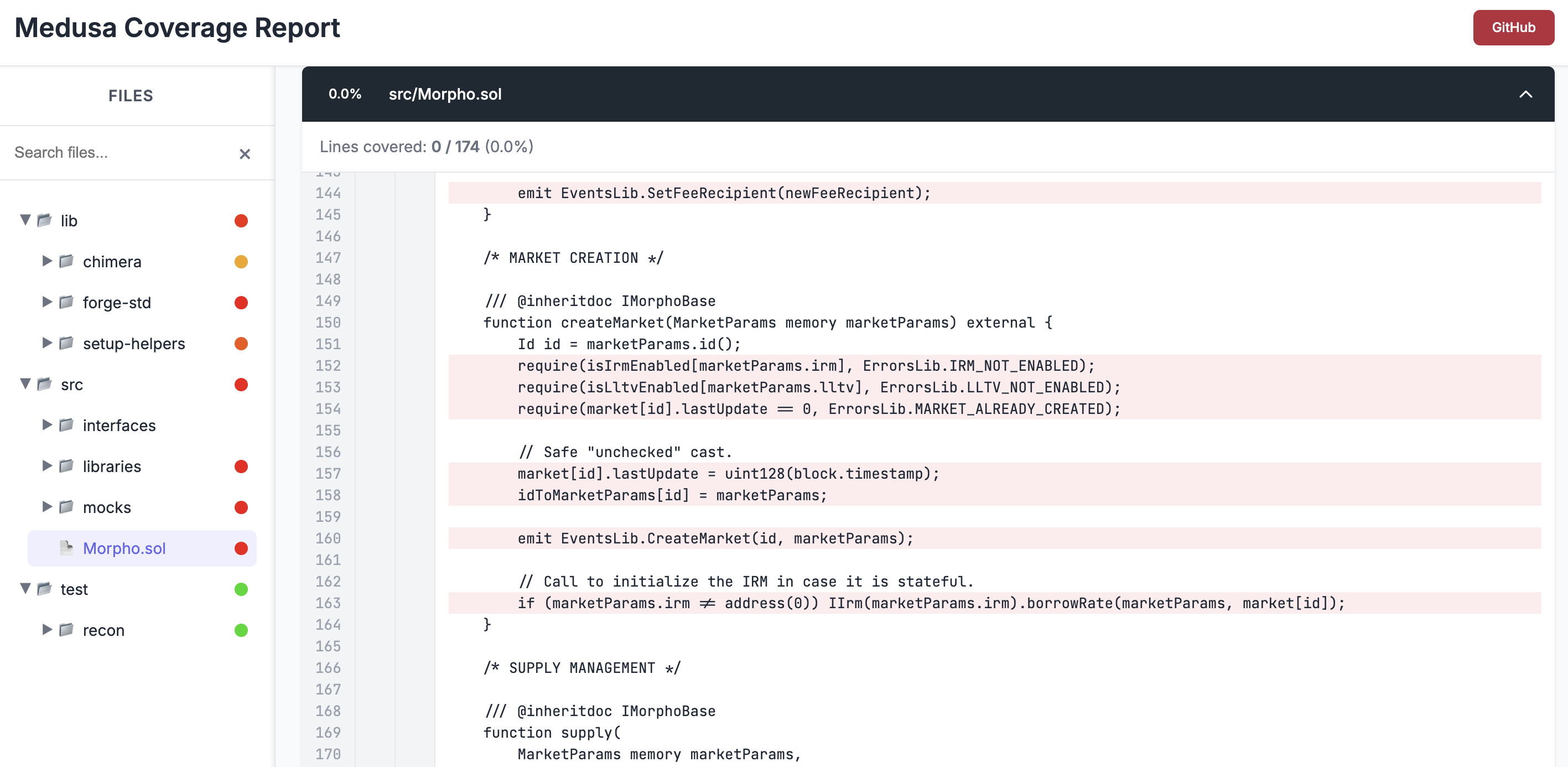

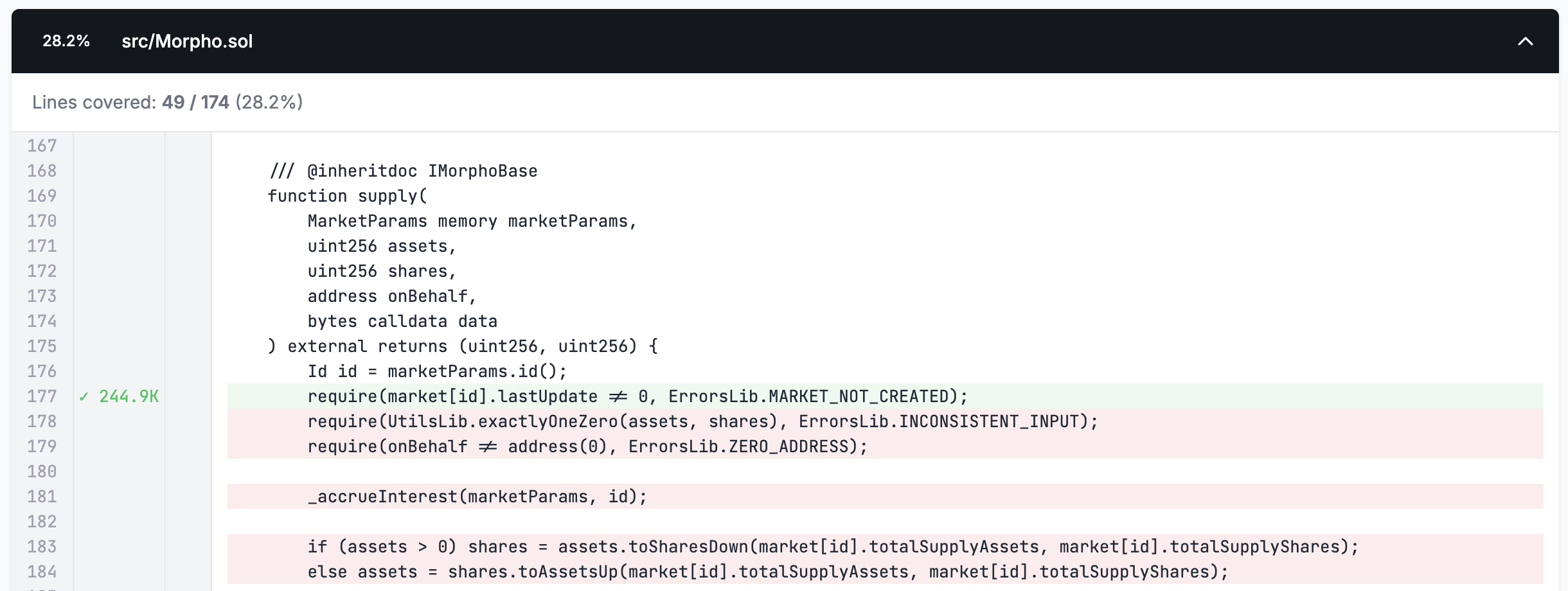

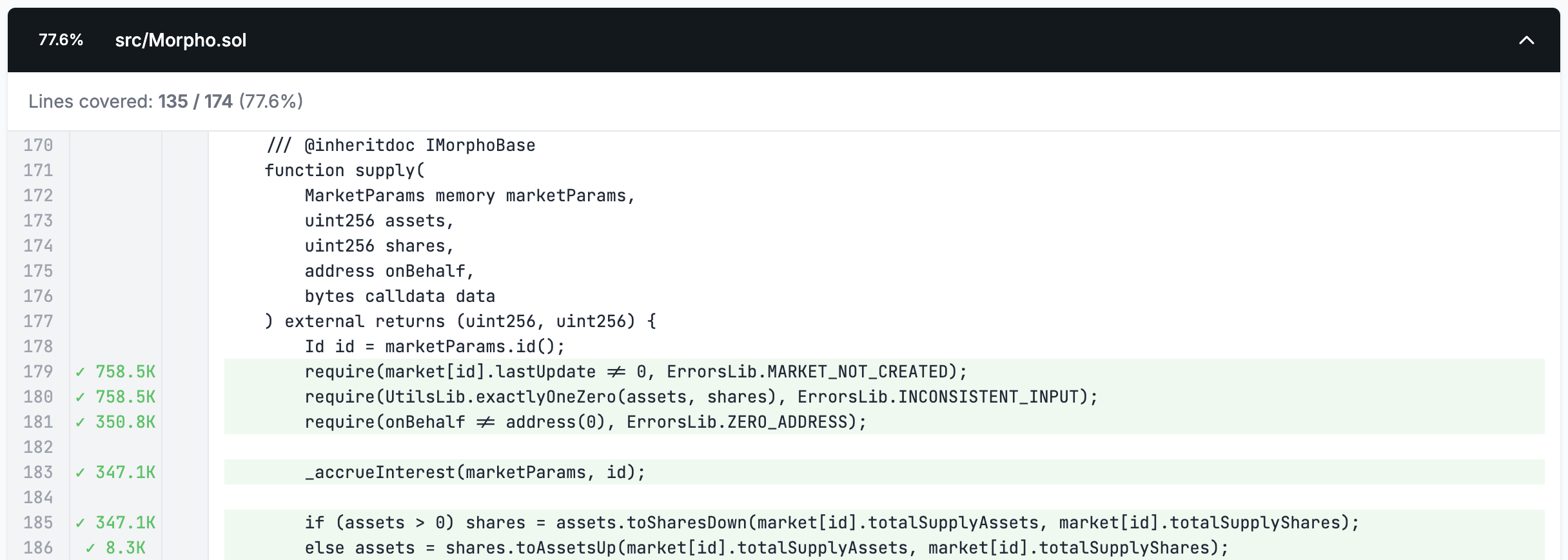

We can now open the coverage report located at /medusa/coverage/coverage_report.html to confirm that none of the lines in the Points contract are actually being covered.

In our coverage report a line highlighted in green means the line was reached by the fuzzer, a line highlighted in red means the line was not.

Let's rectify the lack of coverage in our Points contract by adding target function handlers.

Building target functions

Foundry produces an /out folder any time you compile your project which contains the ABI of the Points contract.

We'll use this in conjunction with our Invariants builder to quickly generate target function handlers for our TargetFunctions contract using the following steps:

- Open

out/Points.sol/Points.json - Copy the entire contents

- Navigate to the Invariants Builder

- Paste the ABI

- Rename the contract to

pointsreplacing the text in the "Your_contract" form field

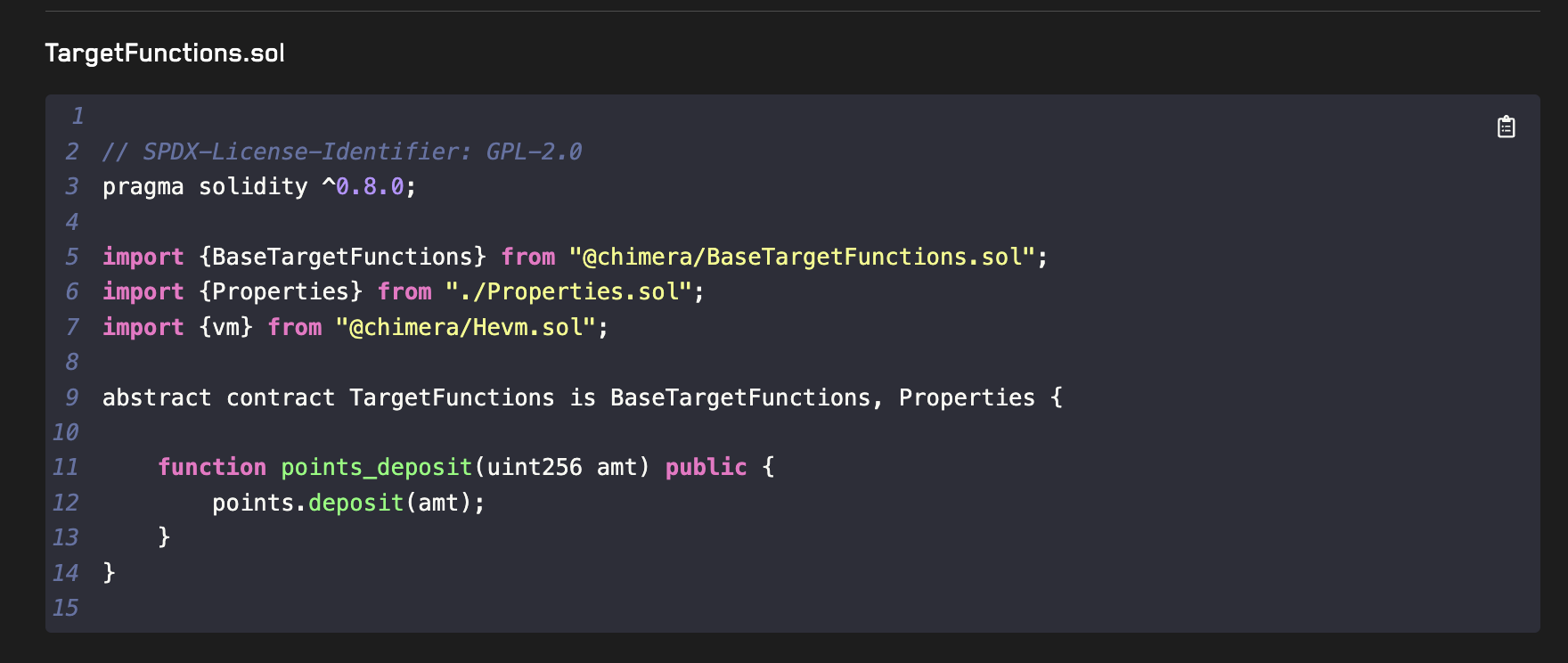

This generates a TargetFunctions contract for Points. In our case we'll first just add the handler created for the deposit function:

In this case you can just copy the points_deposit handler into your TargetFunctions.sol contract. When working on a larger project however, you can use the Download All Files button to add multiple handlers directly into your project at once.

Make sure to add the updateGhosts and asActor modifiers to the points_deposit function if they are not present:

updateGhosts- will update all ghost variables before and after the call to the functionasActor- will ensure that the call is made by the currently active actor (returned by_getActor())

Your TargetFunctions contract should now look like:

abstract contract TargetFunctions is

AdminTargets,

DoomsdayTargets,

ManagersTargets

{

function points_deposit(uint88 amt) public updateGhosts asActor {

points.deposit(amt);

}

}

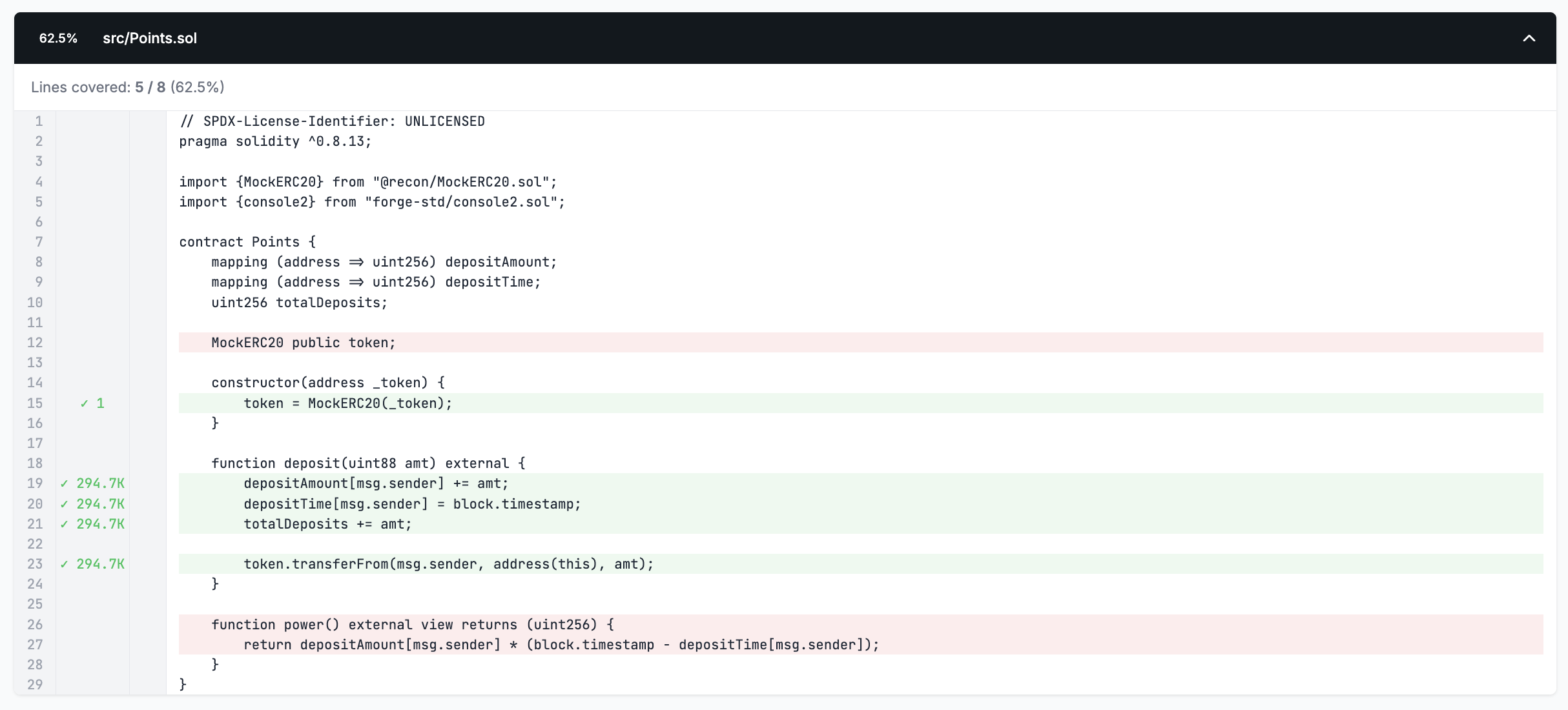

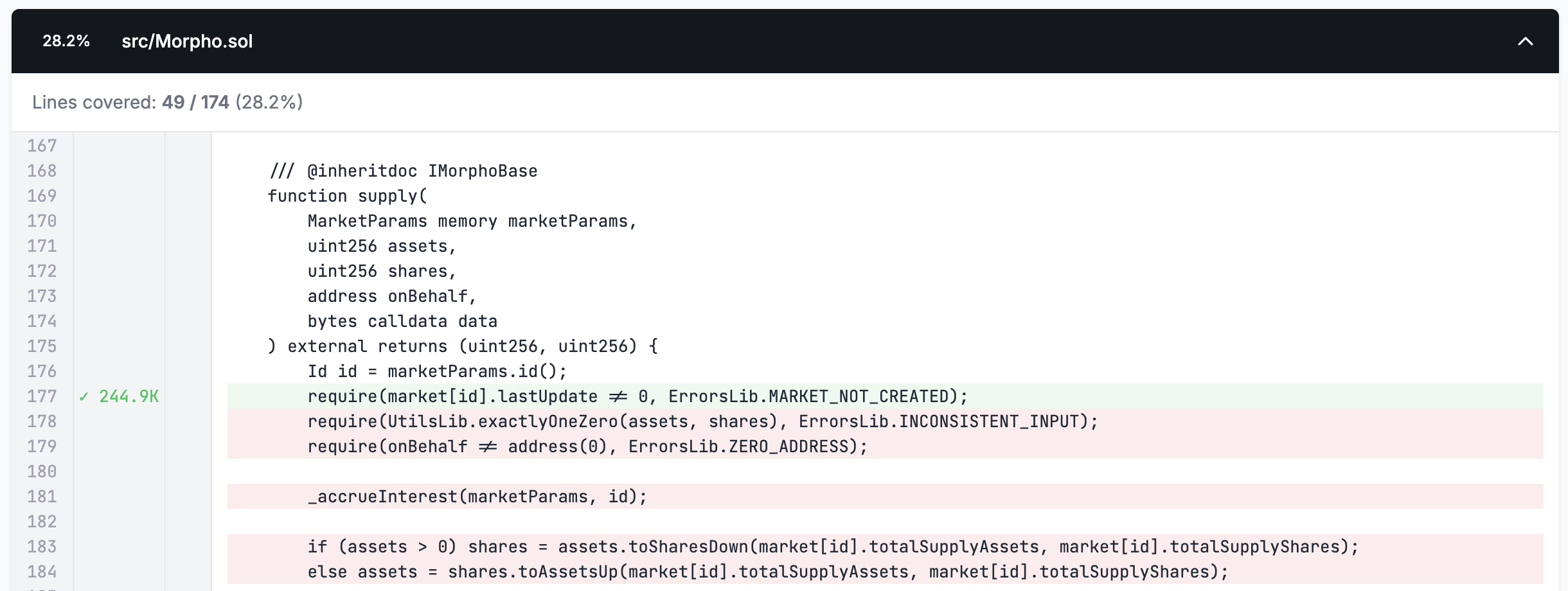

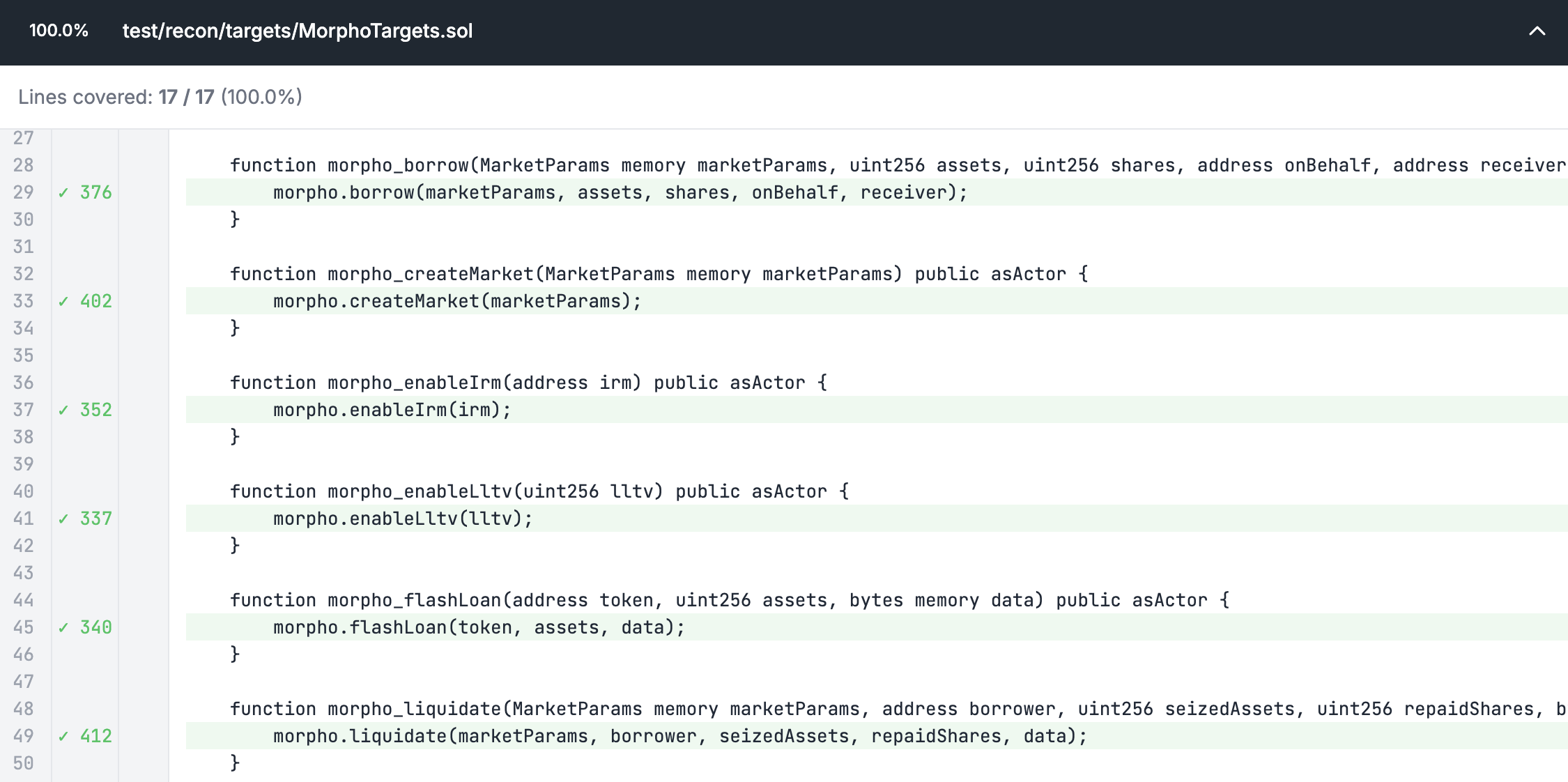

We can now run Medusa again to see how our newly added target function has changed our coverage.

We now see that the deposit function is fully covered, but the power function is not since we haven't added a handler for it. Since the power function is non-state-changing (indicated by the view decorator) we'll leave it without a handler for now as it won't affect our ability to test properties.

The coverage report is effectively our eyes into what the fuzzer is doing.

We can now start defining properties to see if there are any edge cases in our Points contract that we may not have expected.

Implementing Properties

Checking for overflow

A standard property we might want to check in our Points contract is that it doesn't revert due to overflow.

Reverts due to over/underflow are not detected by default in Medusa and Echidna, so to explicitly test for this we can use a try-catch block in our DoomsdayTargets contract (this contract is meant for us to define things that should never happen in the system):

...

import {Panic} from "@recon/Panic.sol";

abstract contract DoomsdayTargets is

BaseTargetFunctions,

Properties

{

/// Makes a handler have no side effects

/// The fuzzer will call this and because it reverts it will be removed from call sequences during shrinking

modifier stateless() {

_;

revert("stateless");

}

function doomsday_deposit_revert(uint88 amt) public stateless asActor {

try points.deposit(amt) {

// success

} catch (bytes memory err) {

expectedError = checkError(err, Panic.arithmeticPanic); // catches the specific revert we're interested in

t(!expectedError, "should never revert due to under/overflow");

}

}

}

We use the

checkErrorfunction from the Utils contract to allow us to check for a particular revert message. ThePaniclibrary allows us to easily check for an arithmetic panic in particular without having to specify the panic code (note that this needs to be added as an import in the above).

We use the stateless modifier so that state changes made by this function call aren't preserved because they make the same changes as the points_deposit function.

Having two handlers that make the same state changes makes it more difficult to debug when we have a broken call sequence because we can easily tell what the points_deposit function does but it's not as clear from the name what the doomsday_deposit_revert does. Having doomsday_deposit_revert be stateless ensures that it only gets executed as a test in a call sequence for specific behavior that should never happen.

This pattern is very useful if you want to perform extremely specific tests that would make your normal handlers unnecessarily complex.

Testing for monotonicity

We can say that the Points contract's power variable value should be monotonically increasing (always increasing) since there's no way to withdraw, which we can prove with a global property and ghost variables.

To keep things simple, we'll only test this property on the current actor (handled by the ActorManager) which we can fetch using _getActor().

Next we'll need a way to fetch the power for the deposited user before and after each call to our points_deposit target function using the BeforeAfter contract:

abstract contract BeforeAfter is Setup {

struct Vars {

uint256 power;

}

Vars internal _before;

Vars internal _after;

modifier updateGhosts {

__before();

_;

__after();

}

function __before() internal {

// reads value from state before the target function call

_before.power = points.power();

}

function __after() internal {

// reads value from state after the target function call

_after.power = points.power();

}

}

This will update the power value in the _before and _after struct when the updateGhosts modifier is called on the points_deposit handler.

Now that we can know the state of the system before our state changing call, we can specify the property in the Properties contract:

abstract contract Properties is BeforeAfter, Asserts {

function property_powerIsMonotonic() public {

gte(_after.power, _before.power, "property_powerIsMonotonic");

}

}

If we now run medusa fuzz we should get two broken properties!

Broken properties

Generating reproducers

After running the fuzzer you should see the following broken property in the console logs:

⇾ [FAILED] Assertion Test: CryticTester.doomsday_deposit_revert(uint88)

Test for method "CryticTester.doomsday_deposit_revert(uint88)" resulted in an assertion failure after the following call sequence:

[Call Sequence]

1) CryticTester.points_deposit(uint88)(235309800868430114045226835) (block=2, time=573348, gas=12500000, gasprice=1, value=0, sender=0x10000)

2) CryticTester.doomsday_deposit_revert(uint88)(102431335005787171573853953) (block=2, time=573348, gas=12500000, gasprice=1, value=0, sender=0x30000)

[Execution Trace]

=> [call] CryticTester.doomsday_deposit_revert(uint88)(102431335005787171573853953) (addr=0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC, value=0, sender=0x30000)

=> [call] StdCheats.prank(address)(0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC) (addr=0x7109709ECfa91a80626fF3989D68f67F5b1DD12D, value=0, sender=0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC)

=> [return ()]

=> [call] Points.deposit(uint88)(102431335005787171573853953) (addr=0x6804A3FF6bcf551fACf1a66369a5f8802B3C9C58, value=0, sender=0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC)

=> [panic: arithmetic underflow/overflow]

=> [event] Log("should never revert due to under/overflow")

=> [panic: assertion failed]

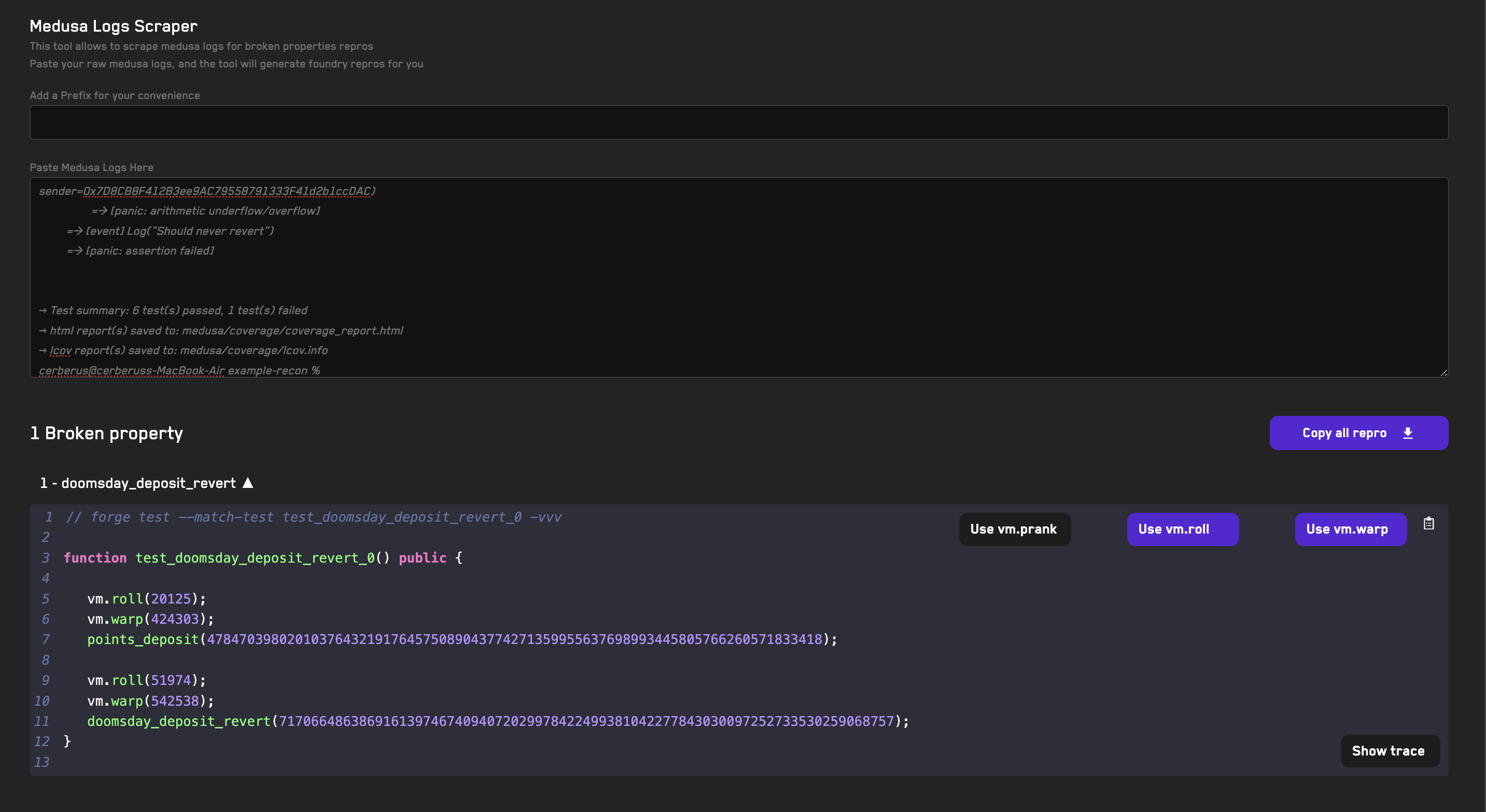

For all but the simplest call sequences this is very difficult to read and even harder to debug. This is why we made the Chimera Framework extremely opinionated, because we believe that reading Medusa and Echdina traces is a very slow and difficult way to debug broken properties.

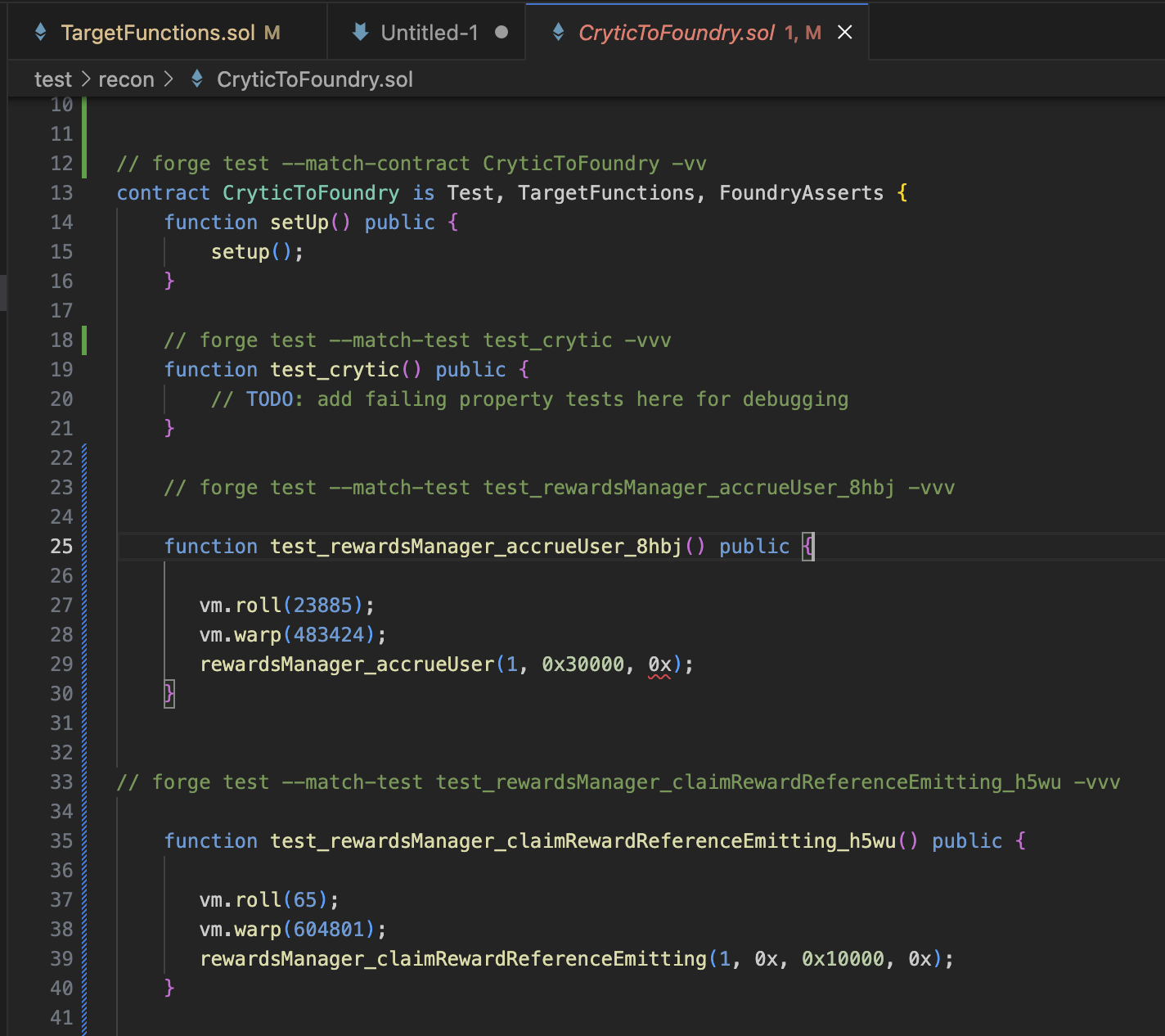

As a result of this, all of our templates come with the ability to reproduce broken properties as unit tests in Foundry.

So instead of debugging our broken property from the Medusa logs directly, we'll use Foundry:

- Copy the Medusa output logs in your terminal

- Go to the Medusa Log Scraper tool

- Paste the logs

- A reproducer unit test will be created for the broken property automatically

- Click the dropdown arrow to show the unit test

- Disable the

vm.prankcheatcode by clicking the button (as we're overriding Medusa's behavior) - Click on the clipboard icon to copy the reproducer

- Open the

CryticToFoundrycontract and paste the reproducer unit test - Run it with Foundry using the

forge test --match-test test_doomsday_deposit_revert_0 -vvvcommand in the comment above it

// forge test --match-contract CryticToFoundry -vv

contract CryticToFoundry is Test, TargetFunctions, FoundryAsserts {

function setUp() public {

setup();

}

// forge test --match-test test_doomsday_deposit_revert_0 -vvv

function test_doomsday_deposit_revert_0() public {

vm.roll(2);

vm.warp(573348);

points_deposit(235309800868430114045226835);

vm.roll(2);

vm.warp(573348);

doomsday_deposit_revert(102431335005787171573853953);

}

}

We now have a Foundry reproducer! This makes it much easier to debug because we can quickly test only the call sequence that causes the property to break and add logging statements wherever needed.

You'll also notice that although intuitively the property_powerIsMonotonic property should not break because we don't allow deposits to be withdrawn, the fuzzer breaks it:

⇾ [FAILED] Assertion Test: CryticTester.property_powerIsMonotonic()

Test for method "CryticTester.property_powerIsMonotonic()" resulted in an assertion failure after the following call sequence:

[Call Sequence]

1) CryticTester.points_deposit(uint88)(38781313) (block=9757, time=110476, gas=12500000, gasprice=1, value=0, sender=0x10000)

2) CryticTester.points_deposit(uint88)(0) (block=44980, time=367503, gas=12500000, gasprice=1, value=0, sender=0x20000)

3) CryticTester.property_powerIsMonotonic()() (block=44981, time=422507, gas=12500000, gasprice=1, value=0, sender=0x10000)

[Execution Trace]

=> [call] CryticTester.property_powerIsMonotonic()() (addr=0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC, value=0, sender=0x10000)

=> [event] Log("property_powerIsMonotonic")

=> [panic: assertion failed]

Debugging the cause of this break is left as an exercise for the reader, but we'll learn some useful tricks in the next section below for debugging the broken property in points_deposit.

Debugging the overflow property

Running the reproducer for the doomsday_deposit_revert property we can clearly see that we get an over/underflow but it's not very clear from the call trace where this happens exactly:

├─ [843] Points::deposit(102431335005787171573853953 [1.024e26])

│ └─ ← [Revert] panic: arithmetic underflow or overflow (0x11)

├─ [7048] Utils::checkError(0x4e487b710000000000000000000000000000000000000000000000000000000000000011, "Panic(17)")

│ ├─ [6142] Utils::_getRevertMsg(0x4e487b710000000000000000000000000000000000000000000000000000000000000011)

│ │ ├─ [930] Utils::_checkIfPanic(0x4e487b710000000000000000000000000000000000000000000000000000000000000011)

│ │ │ └─ ← true

│ │ ├─ [5062] Utils::_getPanicCode(0x4e487b710000000000000000000000000000000000000000000000000000000000000011)

│ │ │ └─ ← "Panic(17)", false

│ │ └─ ← "Panic(17)", false

│ └─ ← true

├─ [0] VM::assertTrue(false, "should never revert due to under/overflow") [staticcall]

│ └─ ← [Revert] should never revert due to under/overflow

└─ ← [Revert] should never revert due to under/overflow

We can add console logs to the Points contract and the reproducer to see where exactly it overflows:

function deposit(uint88 amt) external {

console2.log("here 1");

depositAmount[msg.sender] += amt;

console2.log("here 2");

depositTime[msg.sender] = block.timestamp;

console2.log("here 3");

totalDeposits += amt;

console2.log("here 4");

token.transferFrom(msg.sender, address(this), amt);

}

function test_doomsday_deposit_revert_0() public {

console2.log("=== Before Deposit ===");

vm.roll(2);

vm.warp(573348);

points_deposit(235309800868430114045226835);

console2.log("=== Before Doomsday ===");

vm.roll(2);

vm.warp(573348);

doomsday_deposit_revert(102431335005787171573853953);

}

Which gives us the following console logs when we run the test:

=== Before Deposit ===

here 1

here 2

here 3

here 4

=== Before Doomsday ===

here 1

This indicates to us that the issue is in the second increment of depositAmount. If we check the type of depositAmount we see that it's a uint88.

contract Points {

mapping (address => uint88) depositAmount;

...

}

This indicates that we must be depositing more than type(uint88).max, and if we check if the sum of the deposited amounts in the test is greater than type(uint88).max, we get the following:

sum of deposits 337741135874217285619080788

type(uint88).max 309485009821345068724781055

sum of deposits > type(uint88).max true

So we can see that the issue happens because in our Setup we initially mint type(uint256).max to the actor so they can deposit more than type(uint88).max over multiple calls:

function setup() internal virtual override {

...

_finalizeAssetDeployment(_getActors(), approvalArray, type(uint256).max);

}

This means that to fix the broken property we either need to change the type of the depositAmount variable to uint256 or limit the amount that we initially mint to the actor. For our purposes we'll change the type of the depositAmount variable.

Now when we run the fuzzer we can see that the property no longer breaks:

^C⇾ Fuzzer stopped, test results follow below ...

⇾ [PASSED] Assertion Test: CryticTester.add_new_asset(uint8)

⇾ [PASSED] Assertion Test: CryticTester.asset_approve(address,uint128)

⇾ [PASSED] Assertion Test: CryticTester.asset_mint(address,uint128)

⇾ [PASSED] Assertion Test: CryticTester.doomsday_deposit_revert(uint88)

⇾ [PASSED] Assertion Test: CryticTester.points_deposit(uint88)

⇾ [PASSED] Assertion Test: CryticTester.switch_asset(uint256)

⇾ [PASSED] Assertion Test: CryticTester.switchActor(uint256)

⇾ [FAILED] Assertion Test: CryticTester.property_powerIsMonotonic()

Test for method "CryticTester.property_powerIsMonotonic()" resulted in an assertion failure after the following call sequence:

[Call Sequence]

1) CryticTester.points_deposit(uint88)(73786976294838206465) (block=19477, time=38924, gas=12500000, gasprice=1, value=0, sender=0x30000)

2) CryticTester.asset_mint(address,uint128)(0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC, 79387721835223434743036999817) (block=43362, time=399548, gas=12500000, gasprice=1, value=0, sender=0x10000)

3) CryticTester.points_deposit(uint88)(0) (block=43362, time=399548, gas=12500000, gasprice=1, value=0, sender=0x10000)

4) CryticTester.property_powerIsMonotonic()() (block=43363, time=882260, gas=12500000, gasprice=1, value=0, sender=0x30000)

[Execution Trace]

=> [call] CryticTester.property_powerIsMonotonic()() (addr=0x7D8CB8F412B3ee9AC79558791333F41d2b1ccDAC, value=0, sender=0x30000)

=> [event] Log("property_powerIsMonotonic")

=> [panic: assertion failed]

⇾ Test summary: 7 test(s) passed, 1 test(s) failed

This is one of the key benefits of having properties defined, they allow you to check your codebase against any changes you make to ensure that you don't introduce new bugs.

Note that changing the

depositAmounttouint256only resolves this issue when we have a single actor that deposits, if there are multiple actors that deposit whose balances sum to more thantype(uint256).maxthe property will break again.

Now it's your turn, see if you can apply the techniques discussed here to figure out why the property_powerIsMonotonic breaks!

If you get stuck or need help, reach out to the Recon team in our discord!

Implementing Properties

Implementing properties is the most important part of invariant testing, here we'll look at the different types of properties that you can define and how these can be implemented (inlined or global) along with the different techniques that you can use to implement your properties as code using an ERC4626 vault as an example.

What are properties?

Properties allow us to define behaviors that we expect in our system. In the context of testing we can say that properties are logical statements about the system that we test after state-changing operations are made via a call to one of the target function handlers.

We can use the term invariants to specify properties of a system that should always hold true, meaning after any state-changing operation.

We can use an ERC20 token as an example and define one property and one invariant for it:

- Property: a user’s balance should increase only after calls to the

transferandmintfunctions - Invariant: the sum of user balances should never be greater than the

totalSupply

We prefer to use the term properties throughout this book because it covers invariants as well properties since invariants are a subset of properties but properties are not a subset of invariants.

Property types

In this presentation, Certora lays out the five fundamental types of properties we’re interested in when writing invariants.

Namely these types of properties are:

- Valid States - checking that the system only stays in one of the expected states

- State Transitions - checking transitions between valid/invalid states

- Variable Transitions - checking that variables only change to/from certain expected values

- High-Level Properties - checking broad system behavior

- Unit Tests - checking specific behavior within functions

For more info on understanding these different types of properties, see this post.

Inlined vs global properties

After having implemented many suites ourselves, we realized that methods to implement properties fall into major categories: inlined and global.

We call properties defined inside a function handler inlined, like the following from the Create Chimera App template:

function counter_setNumber2(uint256 newNumber) public asActor {

// same example assertion test as counter_setNumber1 using ghost variables

__before();

counter.setNumber(newNumber);

__after();

if (newNumber != 0) {

t(_after.counter_number == newNumber, "number != newNumber");

}

}

Because these properties are defined within the handlers themselves, they are only checked after the call to the target handler. This means that by definition they aren't required to hold for other function calls.

We call properties defined as standalone public functions in the Properties contract that read some state or ghost variable global; the following example is also taken from the Create Chimera App template:

function invariant_number_never_zero() public {

gt(counter.number(), 0, "number is zero");

}

Because global properties are publicly exposed functions, they can be called by the fuzzer after any call to one of the state-changing handlers (just like how boolean properties normally work). This lets us check that a property holds for any function call, so we can use it to implement our system's invariants.

Testing mode

Echidna and Medusa, the two primary fuzzers that we use, allow defining properties using assertions as well as boolean properties.

The same global property in the example above could be rewritten as an Echidna boolean property like so:

function echidna_number_never_zero() public returns (bool) {

return counter.number() == 0;

}

Any property that can be written as a boolean property however can also be writen using an assertion, simplifying the test writing process and allowing us to run only one job with Echidna in assertion mode rather than one job in assertion and one in property mode, since Echidna doesn't support using two testing modes at the same time.

Most professionals write all properties as assertions to run them with echidna

Example

We'll be using the scaffolding setup on this simplified ERC4626 vault repo on the book/example branch.

In the scaffolded setup linked to above, the handlers each explicitly clamp any addresses that receive shares using the

_getActor()function from theActorManager. This allows us to ensure that only actors that we've added to the Setup receive share tokens and makes it easier to check properties on them.

Vault Properties

We'll be looking at how we can implement the following properties on an ERC4626 vault.

- The

totalSupplyof the vault's shares must be greater than or equal to the shares accounted to each user. - The

depositfunction should never revert for a depositor that has sufficient balance and approvals. - The price per share shouldn't increase on removals.

1. totalSupply check

Looking at the property definition, we can see that it doesn't specify any operation that we should check the property after, so we can define this as a global property in the Properties contract.

Since this property only requires that we know the value of state variables after a given operation, we can just read these state values directly from the contract and make an assertion:

/// @dev Property: The `totalSupply` of the vault's shares must be greater than or equal to the shares accounted to each user

function property_total_supply_solvency() public {

uint256 totalSupply = vault.totalSupply();

// the only depositors in the system will be the actors that we added in the setup

address[] memory users = _getActors();

uint256 sumUserShares;

for (uint256 i = 0; i < users.length; i++) {

sumUserShares += vault.balanceOf(users[i]);

}

lte(sumUserShares, totalSupply, "sumUserShares must be <= to totalSupply");

}

Using the

/// @dev PropertyNatSpec tag makes clear to any collaborators what the property checks without them having to reason through the property themselves

We use the less-than-or-equal-to (lte) assertion in this case because we only care that the vault doesn't round up against the protocol. If it rounds in favor of the protocol, causing the sum of user balances to be less than the totalSupply, we can consider this acceptable because it still means the vault is solvent and users don't have more shares than expected.

2. Vault deposit never fails

This property is explicitly stating that the check should only be made for the deposit function. This means we can implement this property as an inlined property inside the vault_deposit function.

/// @dev Property: The `deposit` function should never revert for a depositor that has sufficient balance and approvals

function vault_deposit(uint256 assets) public updateGhosts asActor {

try vault.deposit(assets, _getActor()) {

// success

} catch (bytes memory reason) {

bool expectedError =

checkError(reason, "InsufficientBalance(address,uint256,uint256)") ||

checkError(reason, "InsufficientAllowance(address,address,uint256,uint256)");

// precondition: we only care about reverts for things other than insufficient balance or allowance

if (!expectedError) {

t(false, "deposit should not revert");

}

}

}

We use the checkError helper function from the Recon setup-helpers repo, which allows us to check the revert message from the call to make sure it's not reverting for the expected reasons of insufficient balance or allowance.

3. Price per share increase

This property is more open-ended, but we can conclude from the definition that the price per share shouldn't change for operations that remove assets from the vault.

Since we have two target functions that remove assets from the vault (withdraw/redeem), we can check this as a global property that excludes operations that don't remove assets from the vault. This simplifies the implementation so we don't implement the same property in the vault_withdraw and vault_redeem handler.

To allow us to track the operation of interest, we add the updateGhostsWithOpType modifier to the vault_redeem and vault_withdraw handlers:

function vault_redeem(uint256 shares) public updateGhostsWithOpType(OpType.REMOVE) asActor {

vault.redeem(shares, _getActor(), _getActor());

}

function vault_withdraw(uint256 assets) public updateGhostsWithOpType(OpType.REMOVE) asActor {

vault.withdraw(assets, _getActor(), _getActor());

}

We then define the following update in the BeforeAfter contract, which allows us to track the price per share before and after any operation using the updateGhostsWithOpType/updateGhosts modifiers:

function __before() internal {

_before.pricePerShare = vault.previewMint(10**vault.decimals());

}

function __after() internal {

_after.pricePerShare = vault.previewMint(10**vault.decimals());

}

We can then define a global property that checks that removal operations do not change the price per share:

function property_price_per_share_change() public {

if (currentOperation == OpType.REMOVE) {

eq(_after.pricePerShare, _before.pricePerShare, "pricePerShare must not change after a remove operation");

}

}

Running the fuzzer

Now with the properties implemented, all that's left to do is run the fuzzer to determine if any of the properties break.

Since we used the Create Chimera App template when scaffolding the above example, we can run Echidna locally using:

echidna . --contract CryticTester --config echidna.yaml

and Medusa using:

medusa fuzz

to run the job using the Recon cloud runner see the Running Jobs page.

Optimizing Broken Properties

Echidna's optimization mode is a powerful tool for understanding the maximum possible impact of a vulnerability discovered by a broken property.

Optimization mode often allows you to determine if the impact of a vulnerability can be greater than what's shown by the originally broken property or if it's minimal because it isn't increased by the optimization test.

As of the time of writing, optimization mode is only available in Echidna, not Medusa

What is optimization mode?

Optimization mode allows you to define a test function that starts with a special prefix (we tend to set this to optimize_ in the echidna.yaml), takes no arguments and returns an int256 value:

function optimize_price_per_share_difference() public view returns (int256) {

return maxPriceDifference;

}

In the above example the maxPriceDifference value would be set by one of our target function handlers, then Echidna would call all the function handlers with random values in an attempt to maximize the value returned by optimize_price_per_share_difference.

Example

We'll now look at an example of how we can use optimization mode to increase the severity of a finding discovered by a broken property using this ERC4626 vault as an example.

Defining the property

First, because this is an ERC4626 vault without the possibility of accumulating yield or taking losses we can define a standard property that states: a user should not be able to change the price per share when adding or removing.

This check ensures that a malicious user cannot perform actions that would make it favorable for them to arbitrage the share token/underlying asset or allow them to steal funds from other users by giving themselves a more favorable exchange rate.

We specify when adding or removing in the property because these are the only operations that change the amount of underlying assets and shares in the vault so these are the ones that would affect the share price.

Given that an ERC4626 vault has user operations that don't exclusively add or remove shares and assets from the vault (tranfer and approve), we can use the OpType enum in the updateGhostsWithOpType modifier in our target handlers to only check the property after a call to one of our target functions of interest:

function vault_deposit(uint256 assets) public updateGhostsWithOpType(OpType.ADD) asActor {

vault.deposit(assets, _getActor());

}

function vault_mint(uint256 shares) public updateGhostsWithOpType(OpType.ADD) asActor {

vault.mint(shares, _getActor());

}

function vault_redeem(uint256 shares) public updateGhostsWithOpType(OpType.REMOVE) asActor {

vault.redeem(shares, _getActor(), _getActor());

}

function vault_withdraw(uint256 assets) public updateGhostsWithOpType(OpType.REMOVE) asActor {

vault.withdraw(assets, _getActor(), _getActor());

}

We can then add updates to the price per share in the BeforeAfter contract like so:

function __before() internal {

_before.pricePerShare = vault.convertToShares(10**underlyingAsset.decimals());

}

function __after() internal {

_after.pricePerShare = vault.convertToShares(10**underlyingAsset.decimals());

}

The above checks how many shares would be received for a deposit of 1 unit of the underlying asset (using the underlyingAsset's decimal precision) giving us an implicit price per share.

Finally, we can implement our property as:

function property_user_cannot_change_price_per_share() public {

if(currentOpType == OpType.ADD || currentOpType == OpType.REMOVE) {

eq(_before.pricePerShare, _after.pricePerShare, "price per share should not change with user operations");

}

}

which simply checks that the pricePerShare stays the same after any of the user operations that add or remove tokens to the system (mint, deposit, withdraw and redeem).

For more details on how to implement properties using the above

OpTypeenum see this section.

Breaking the property

After a brief run of Echidna, the property is broken with less than 5000 tests run:

property_user_cannot_change_price_per_share(): failed!💥

Call sequence:

CryticTester.vault_mint(3)

CryticTester.property_user_cannot_change_price_per_share()

Traces:

emit Log(«price per share should not change with user operations»)

This indicates that something in the ERC4626Vault::mint function is allowing the user to manipulate the price per share.

However, after running the unit test that we generate with the Echidna log scraper tool we can see that the pricePerShare only changes by 1 wei:

[FAIL: price per share should not change with user operations: 1000000000000000000 != 999999999999999999] test_property_user_cannot_change_price_per_share_0() (gas: 182423)

Logs:

price per share difference 1

Given that any price manipulation by an attacker would allow them to perform arbitrage on the vault, we know this is already a vulnerability, but we can now create an optimization test to determine if the maximum price change is limited to this 1 wei value or if it can be made greater, potentially allowing an attacker to directly steal funds from other users as well.

Creating the optimization tests

Since we want to optimize the difference between the price per share, we can define two separate variables in our Setup contract for tracking this, one which tracks increases to the price per share and one that tracks decreases to it:

int256 maxPriceDifferenceIncrease;

int256 maxPriceDifferenceDecrease;

We can then define two separate optimization tests to optimize each of these values. If we were to only define one optimization test, we'd be losing information on other potential issue paths as the call sequence given at the end of the fuzzing run will only give us one maximized value.

For example, in our scenario if we only had one test for the price increasing and decreasing, if the price decrease was greater than the price increase, we'd only be able to analyze scenarios where it decreases. Given that we already knew from the initial property break that decreasing the price is possible, this would leave us unaware of exploits paths that could be executed with an increasing price.

We therefore define the following two optimization tests for the difference in price before and after a call to one of our handlers of interest in our Properties contract:

function optimize_user_increases_price_per_share() public returns (int256) {

if(currentOpType == OpType.ADD || currentOpType == OpType.REMOVE) {

if(_before.pricePerShare < _after.pricePerShare) {

maxPriceDifferenceIncrease = int256(_after.pricePerShare) - int256(_before.pricePerShare);

return maxPriceDifferenceIncrease;

}

}

}

function optimize_user_decreases_price_per_share() public returns (int256) {

if(currentOpType == OpType.ADD || currentOpType == OpType.REMOVE) {

if(_before.pricePerShare > _after.pricePerShare) {

maxPriceDifferenceDecrease = int256(_before.pricePerShare) - int256(_after.pricePerShare);

return maxPriceDifferenceDecrease;

}

}

}

which will set each of the respective maxPriceDifference variables accordingly.

Note that the tests above are optimizing the difference in price so they will allow us to confirm if we are able to change the price by more than the 1 wei which we already know is possible from the initial property break.

Running the optimization tests

We can then run the tests using optimization mode by either modifying the testMode parameter in the echidna.yaml config file or by passing the --test-mode optimization flag to the command we use to run Echidna. For our case we'll use the latter:

echidna . --contract CryticTester --config echidna.yaml --test-mode optimization --test-limit 100000

Note that we also increased the testLimit so that we can give the fuzzer plenty of chances to find a call sequence that optimizes the value. This is key in allowing you to generate a truly optimized value, in production codebases we tend to run optimization mode for 100,000,000-500,000,000 tests but theoretically for a test suite with many handlers and state variables that influence the value you're trying to optimize, the longer you run the tests for, the higher the possibility of finding an optimized value.

Typically when we've found a value that's sufficient to prove an increase in the severity of the vulnerability, by demonstrating that the value can be made greater than a small initial value in the original property break, we'll stop the optimization run.

Conversely, if the optimization run shows no increase after a few million tests it typically means it was incorrectly specified or there is in fact no way to increase the value further.

Note: stopping an Echidna optimization run early doesn't allow the call sequence that increased the value to be properly shrunk as of the time of writing (see this issue). If you've already found a sufficiently optimized value and don't want to wait until the run completes, you can stop the run and start a new run with a shorter

testLimitand reuse the corpus from the previous run to force Echidna to shrink the reproducer in the corpus.

After running the fuzzer for 100,000 runs for the above defined tests we get the following outputs in the console:

optimize_user_decreases_price_per_share: max value: 1000000000000000000

Call sequence:

CryticTester.asset_mint(0x2e234dae75c793f67a35089c9d99245e1c58470b,1005800180287214122)

CryticTester.vault_deposit(1)

...

optimize_user_increases_price_per_share: max value: 250000000000000000

Call sequence:

CryticTester.vault_deposit(2)

CryticTester.vault_deposit(1)

CryticTester.vault_deposit(1)

CryticTester.vault_withdraw(2)

from which we can see that an attacker can therefore not only decrease the price per share as our original property break implied, but they can also increase the price using a completely different call sequence. This is why it's key to define separate optimization tests for increases and decreases for whatever value your initial property breaks.

Investigating the issue

We can use the above call sequences with our Echidna log scraper tool to generate unit tests that allow us to debug the root cause of the issues by adding the following tests to our CryticToFoundry contract:

// forge test --match-test test_optimize_user_decreases_price_per_share_0 -vvv

function test_optimize_user_decreases_price_per_share_0() public {

// Max value: 1000000000000000000;

asset_mint(0x2e234DAe75C793f67A35089C9d99245E1C58470b,1005800180287214122);

vault_deposit(1);

}

// forge test --match-test test_optimize_user_increases_price_per_share_1 -vvv

function test_optimize_user_increases_price_per_share_1() public {

// Max value: 250000000000000000;

vault_deposit(2);

vault_deposit(1);

vault_deposit(1);

vault_withdraw(2);

}

Decreasing price

For the test_optimize_user_decreases_price_per_share_0 test, we see that the asset_mint is making a donation. We don't know which of the deployed contracts in the setup is deployed at the address to which the donation is made (0x2e234DAe75C793f67A35089C9d99245E1C58470b) but we can make an educated guess that it's the vault contract because if the test allows us to manipulate the share price it must be affecting the ratio of shares to assets in the vault.

Logging the value of the deployed vault in the test we can confirm this:

Logs:

vault 0x2e234DAe75C793f67A35089C9d99245E1C58470b

So essentially this test is telling us that: "if I donate a large amount then make a deposit I can decrease the price per share".

Investigating further we can see that the ERC4626Vault::deposit function calls ERC4626Vault::previewDeposit which calls ERC4626Vault::convertToShares:

contract ERC4626Vault is MockERC20 {

...

function deposit(uint256 assets, address receiver) public virtual returns (uint256) {

uint256 shares = previewDeposit(assets);

_deposit(msg.sender, receiver, assets, shares);

return shares;

}

...

function previewDeposit(uint256 assets) public view virtual returns (uint256) {

return convertToShares(assets);

}

...

function convertToShares(uint256 assets) public view virtual returns (uint256) {

uint256 supply = totalSupply;

if (supply == 0) return assets;

// VULNERABILITY: Precision Loss Inflation Attack

// This creates a subtle inflation vulnerability through precision loss manipulation

// The attack works by exploiting division-before-multiplication in specific scenarios

uint256 totalAssets_ = totalAssets();

// Step 1: Calculate share percentage first (division before multiplication)

uint256 sharePercentage = assets * 1e18 / totalAssets_; // Get percentage with 18 decimals

// Step 2: Apply percentage to total supply

uint256 shares = sharePercentage * supply / 1e18;

// The vulnerability: When totalAssets_ >> assets, sharePercentage rounds down to 0

// This allows attackers to:

// 1. Deposit large amount to inflate totalAssets

// 2. Make small deposits from other accounts that get 0 shares due to rounding

// 3. Withdraw their initial deposit plus the "donated" assets from failed deposits

return shares;

}

}

which identifies the bug that was planted.

If we expand the existing test, we can prove the observation in the comments that the first depositor's donation can drive the depositor's received shares for small deposits down to 0:

function test_optimize_user_decreases_price_per_share_0() public {

// Max value: 1000000000000000000;

asset_mint(0x2e234DAe75C793f67A35089C9d99245E1C58470b,1005800180287214122);

vault_deposit(1);

// switch to the other actor and deposit a small amount

switchActor(1);

vault_deposit(1e18);

console2.log("balance of actor 2 after deposit", vault.balanceOf(_getActor()));

}

Logs:

balance of actor 2 after deposit 0

this allows the attacker to steal shares of the other depositor(s) on withdrawal, an increase in severity of the originally identified bug of arbitraging by deflating the price.

Increasing price

For the test_optimize_user_increases_price_per_share_1 we can see that multiple small deposits followed by a withdrawal of a small amount allows the user to manipulate the price upward.

The root cause of this issue is the same but can you identify why it increases the price instead of decreasing it? What would the impact of being able to manipulate the price in this way?

Optimization Mode Gotchas

Coverage

When using optimization mode it's often best to run an extended job using the Recon cloud runner to increase the probability of finding a high maximized value by letting the fuzzer run for an extended period of time. If you need to stop this run midway through because you've already found a sufficiently high value you'll need to download the corpus so you can generate a shrunken reproducer locally (as mentioned in the Running the optimization tests section above).

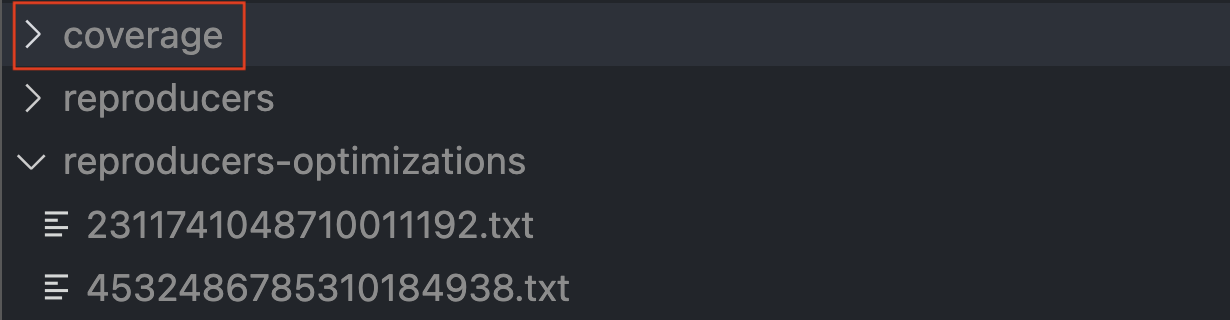

To reuse the corpus from a Recon cloud run locally you can download it using the Download Corpus button on the job page:

Unlike normal corpus reuse in Echidna however, when reusing a downloaded corpus for optimization mode you need to copy the contents of the reproducers-optimization directory into the coverage directory:

You can then run Echidna in optimization mode and you should be able to locally reproduce the maximized value from your cloud run.

Corpus

If the interface of your handlers change, you'll need to delete your old corpus and start with a fresh corpus, otherwise your old corpus will introduce call sequences that are no longer valid and cause your optimization run to be less efficient, possibly not being able to find a true maximum value.

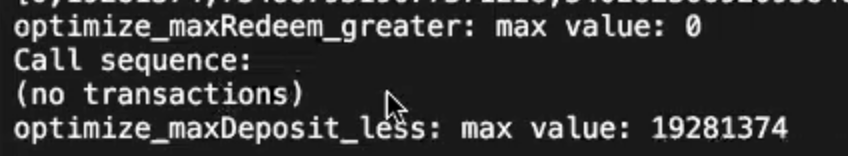

Zero Call Sequence

There's a bug in Echidna optimization mode that causes it to fail to effectively shrink if one of the optimization test functions returns a 0 value as its maximum with an empty call sequence, this causes echidna to crash and the shrinking to fail:

In the above image the optimize_maxRedeem_greater causes the shrinking for optimize_maxDeposit_less to fail.

To work around this issue you can either:

- use the

filterBlacklistconfiguration option set tofalsewith only the function you want to optimize passed tofilterFunctions - comment out all other optimization tests so that you only test the one of interest

Continue learning

To see how the Recon team used optimization mode in a real world project to escalate the severity of a vulnerability to critical during an audit of the Beraborrow codebase, check out this article.

To see how optimization mode was used to escalate the severity of a QA vulnerability discovered in the Liquity V2 governance codebase to a high severity, see this presentation.

Advanced Fuzzing Tips

This is a compilation of best practices that the Recon team has developed while using the Chimera framework.

Target Functions

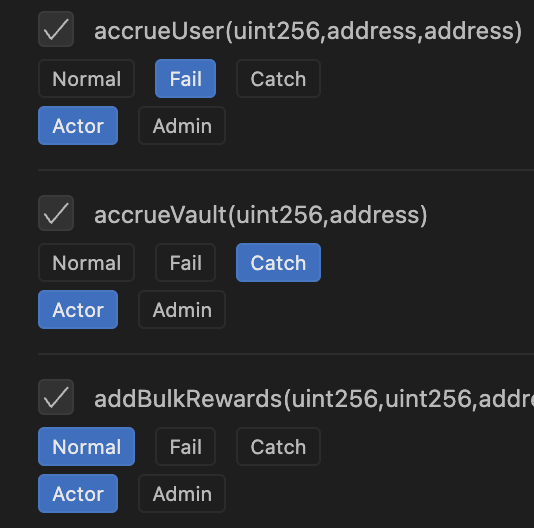

For each contract you want to fuzz (your target contract), select the state-changing functions (target functions) you want to include in your fuzzing suite. Wrap the function in a handler which passes in the input to the function call and allows the fuzzer to test random values.

// contract to target

contract Counter {

uint256 public number;

function increment() public {

number++;

}

}

abstract contract TargetFunctions {

// function handler that targets our contract of interest

function counter_increment() public asActor {

counter.increment();

}

}

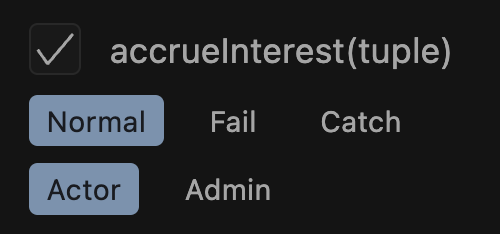

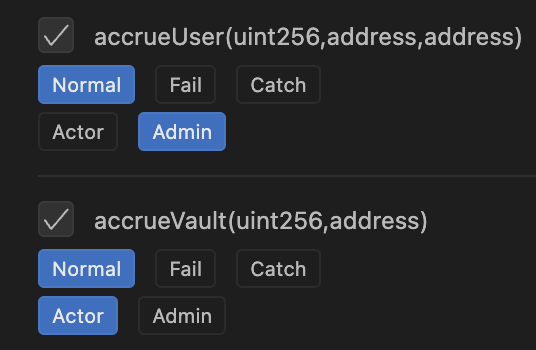

The easiest way to do this is with our Invariants Builder tool or with the Recon Extension directly in your code editor.

By using the asActor or asAdmin modifiers in combination with an Actor Manager ensures efficient fuzzing by not wasting tests that should be executed only by an admin getting executed by a non-admin actor. These modifiers prank the call to the target contract as the given actor, ensuring that the call is made with the actor as the msg.sender.

// contract to target

contract Yield {

address admin;

uint256 yield;

modifier onlyAdmin() {

require(msg.sender == admin);

}

function resetYield(address _newAdmin) public onlyAdmin {

yield = 0;

}

}

abstract contract TargetFunctions {

// calling this as an actor would waste fuzz calls because it would always revert so we use the asAdmin modifier

function yield_resetYield() public asAdmin {

yield.resetYield();

}

}

Clamping Target Functions

Clamping reduces the search space for the fuzzer, making it more likely that you'll explore interesting states in your system.

Clamped handlers should be a subset of all target functions by calling the unclamped handlers with the clamped inputs. This ensures that the fuzzer doesn't become overbiased, preventing it from exploring potentially interesting states, and also ensures checks for inlined properties which are implemented in the unclamped handlers are always performed.

contract Counter {

uint256 public number = 1;

function setNumber(uint256 newNumber) public {

if (newNumber != 0) {

number = newNumber;

}

}

}

abstract contract TargetFunctions {

function counter_setNumber(uint256 newNumber) public asActor {

// unclamped handler explores the entire search space; allows the input to be 0

counter.setNumber(newNumber);

// inlined property check in the handler always gets executed

if (newNumber != 0) {

t(counter.number == newNumber, "number != newNumber");

}

}

// NOTE: Clamped handler doesn't use `asActor` nor before after handlers, as it's going to use the unclamped handler

function counter_setNumber_clamped(uint256 newNumber) public {

// clamping prevents the newNumber passed into setNumber from ever being 0

newNumber = between(newNumber, 1, type(uint256).max);

// clamped handler calls the unclamped handler

counter_setNumber(newNumber);

}

}

Disabling Target Functions

Certain state-changing functions in your target contract may not actually explore interesting state transitions and therefore waste calls made by the fuzzer which is why our ABI to invariants tool only scrapes functions from the targeted contract ABI that are non-view/pure. Other functions (generally admin privileged ones) introduce so many false positives into properties being tested (usually via things like contract upgrades or token sweeping functions) that it's better to ignore them. Doing so is perfectly okay even though it will reduce overall coverage of the targeted contracts.

To make sure it's understood by others looking at the test suite that you purposefully meant to ignore a function we tend to prefer commenting out the handler or including a alwaysRevert modifier that causes the handler to revert every time it's called by the fuzzer.

Setup

This section covers a few rules we've come to follow in our engagements regarding setting up invariant testing suites.

- Create your own test setup

- Keep the story clean

- State exploration and coverage

- Programmatic deployment

- Implicit clamping

Create Your Own Test Setup

If you're working on a codebase which you didn't originally develop, it's tempting to use the Foundry test setup that the developers used for their unit tests, however this can lead to introducing any of the biases present in the existing setup into the invariant testing suite.

We've found that it's best to create the simplest setup possible starting from scratch, where you only deploy the necessary contracts of interest and periphery contracts.

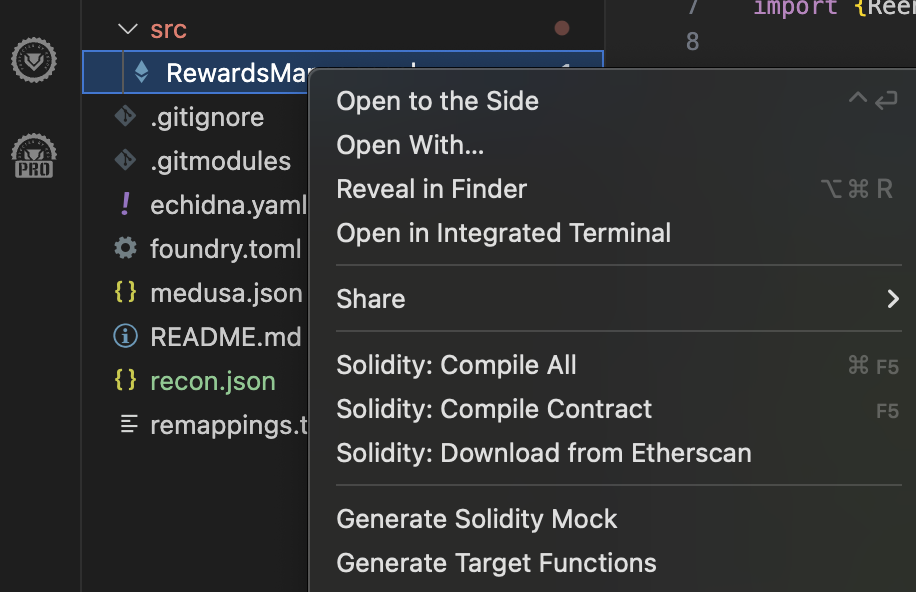

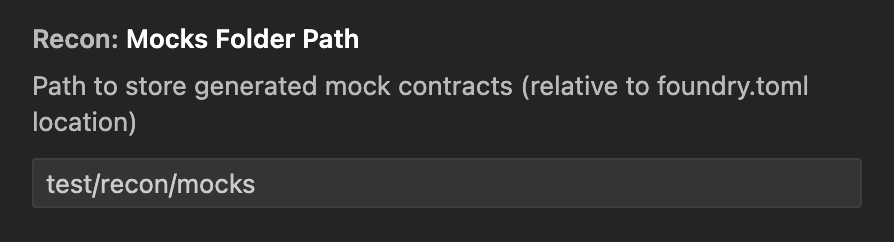

Periphery contracts can also often be mocked (see this section on how to automatically generate mocks using the Recon Extension) if their behavior is irrelevant to the contracts of interest. This further reduces complexity, making the suite more easily understood by collaborators and making it more likely you'll get full line coverage over the contracts of interest more quickly.

Keep The Story Clean

We call the "story" the view of the state changes made by a given call sequence in a tester. By maintaining only one state-changing operation per target function handler, it makes it much simpler to understand what's happening within a call sequence when a tool generates a reproducer that breaks a property that you've defined.

If you include multiple state-changing operations within a single handler it adds additional mental overhead when trying to debug a breaking call sequence because you not only need to identify which handler is the source of the issue, but the individual operation within the handler as well.

Take the following example of handlers defined for an ERC4626 vault:

// having multiple state-changing operations in one handler makes it difficult to understand what's happening in the story

function vault_deposit_and_redeem(uint256 assets) public asActor {

uint256 sharesReceived = vault.deposit(assets);

vault.redeem(sharesReceived);

}

// having separate target function handlers makes it easier to understand the logical flow that lead to a broken property in a reproducer call sequence

function vault_deposit(uint256 assets) public asActor {

uint256 sharesReceived = vault.deposit(assets);

}

function vault_redeem(uint256 assets) public asActor {

uint256 sharesReceived = vault.deposit(assets);

vault.redeem(sharesReceived);

}

Although this is a simplified example we can see that maintaining separate handlers for each state-changing function makes our story much simpler to read.

If you need to perform multiple state-changing operations to test a given property, consider making the function stateless as discussed in the inlined fuzz properties section

State Exploration and Coverage

The most important aspect of invariant testing is what you actually test, and what you actually test is implied by the target functions you define in your test suite setup.

Some contracts, like oracles, may be too complex to fully model (e.g. having to reach a quorom of 3 signers to update the price) because they would add overhead in terms of function handlers that require specific clamping and test suite setup.

In these cases, mocking is preferred because it simplifies the process for the fuzzer to change return values (price in the case of the oracle example) or allow more straightforward interactions to be made by your targeted contracts (without requiring things like input validation on the mocked contract's side).

Mocking is a destructive operation because it causes a loss of information, but the simplification it provides leads fuzzing and formal verification tools to explore possible system states much faster.

Adding additional handlers for things like token donations (transfering/minting tokens directly to one of your targeted contracts) can allow you to explore interesting states that otherwise wouldn't be possible if you only had handlers for your contracts of interest.

Programmatic Deployment

Most developers tend to write static deployments for their test suites where specific contract are deployed with some default values.

However, this can lead to missing a vast amount of possible system states, some of which would be considered admin mistakes (because they're related to deployment configurations), others which are valid system configurations that are never tested with fixed deployment parameters.

This is why we now prefer using programmatic deployments because they allow us to use the randomness of the fuzzer to introduce randomness into the system configuration that's being tested against. Although programmatic deployments make running a suite slower (because the fuzzer needs to find a valid deployment configuration before achieving meaningful line coverage), they turn simple suites into multi-dimensional ones.

This is best understood with an example of a system designed to accept multiple tokens. With a static deployment you may end up testing tokens with 6 and 18 decimals (the two most common extremes). However, with a programmatic deployment, you can test many token configurations (say all tokens with 6-24 decimals) to ensure that your system works with all of them.

We've standardized these ideas around programmatic deployments in our Manager contracts.

Programmatic deployment consists of adding 4 general function types:

- A

deployhandler, which will deploy a new version of the target (e.g a token via_newAssetin theAssetManager) - A

switchTohandler, to change the current target being used (e.g the_switchAssetfunction in theAssetManager) - A

getCurrentinternal function, to know which is the current active target (e.g the_getAssetfunction in theAssetManager) - A

getAllinternal function, to retrieve all deployments (e.g the_getAssetsfunction in theAssetManager)

Using the pattern of managers can help you add multi-dimensionality to your test setup and make tracking deployed components simpler.

Implicit Clamping

Based on your deployments, configuration, and the target functions you expose, a subset of all possible system states will be reachable. This leads to what we call implicit clamping as the states not reachable by your test suite setup will obviously not be tested and therefore have a similar effect as if they were excluded via clamping.

Mapping out what behavior is and isn't possible given your suite setup can therefore be helpful for understanding the limitations of your suite.

With these limitations outlined, you can write properties that define what behaviors should and shouldn't be possible given your setup. Checking these properties with fuzzing or formal verification won't necessarily prove they're always true, simply that they're true for the setup you've created.

This tends to be the source of bugs that are missed with fuzzing, as ultimately you can only detect what you test and if your system isn't configured so that it can reach a certain state in which there's a bug, you won't ever be able to detect it.

Ghost Variables

Ghost variables are a supporting tool that allow you to track system state over time. These can then be used in properties to check if the state has evolved as expected.

In the Chimera Framework we've concretized our prefered method of tracking ghost variables using a BeforeAfter contract which exposes an updateGhosts modifier that allows you to cache the system state before and after a call to a given target function.

As a rule of thumb it's best to avoid computation in your updates to the ghost variables as it ends up adding addtional operations that need to be performed for each call executed by the fuzzer, slowing down fuzzing efficiency.

Do NOT put any assertions in your ghost variables and avoid any operation or call that may cause a revert. These cause all operations in the call sequence created by the fuzzer to be undone, leaving the fuzzer with a blindspot because it will be unable to reach certain valid states.

Overcomplicating ghost variables has the unfortunate side effect of making the coverage report look promising as it will show certain lines fully covered but in reality might be applying implicit clamping by causing reverts that prevent edge cases for certain properties being explored since an update that reverts before the property is checked will not generate a reproducer call sequence.

contract Counter {

uint256 public number = 1;

function setNumber(uint256 newNumber) public {

if (newNumber != 0) {

number = newNumber;

}

}

}

abstract contract TargetFunctions {

// updateGhosts updates the ghost variables before and after the call to the target function

function counter_setNumber1(uint256 newNumber) public updateGhosts asActor {

counter.setNumber(newNumber);

}

}

abstract contract BeforeAfter is Setup {

struct Vars {

uint256 counter_difference;

uint256 counter_number;

}

Vars internal _before;

Vars internal _after;

modifier updateGhosts {

__before();

_;

__after();

}

function __before() internal {

// this line would revert for any value where the number before < the new number

_before.counter_difference = _before.counter_number - counter.number();

}

function __after() internal {

_after.counter_difference = _before.counter_number - counter.number();

}

}

In the above example we can see that the __before() function would revert for any values where the newNumber passed into setNumber is greater than the value stored in _before.counter_number. This would still allow the coverage report to show the function as covered however because for all newNumber values less than or equal to _before.counter_number the update would succeed. This means that fundamentally we'd be limiting the search space of the fuzzer, preventing it from exploring any call sequences where newNumber is greater than the value stored in _before.counter_number and potentially missing bugs because of it.

Grouping Function Types

A simple pattern for grouping function types using ghost variables so they can easily be used as a precondition to a global property check is to group operations by their effects.

For example, you may have multiple deposit/mint operations that have the effect of "adding" and others of "removing".

You can group these effects using an enum type as follows:

enum OpType {

GENERIC,

ADD,

REMOVE

}

modifier updateGhostsWithType(OpType op) {

currentOperation = op;

__before();

_;

__after();

}

modifier updateGhosts() {

currentOperation = OpType.GENERIC;

__before();

_;

__after();

}

And add the updateGhostsWithType modifier only to handlers which perform one of the operations of interest. All other handlers using the standard updateGhosts modifier will default to the GENERIC operation type so that you don't have to refactor any existing modifiers.

Now with the ability to elegantly track the current operation you can easily use the operation type to write a global property for it like so:

contract Counter {

uint256 public number = 1;

function increment() public {

number++;

}

function decrement() public {

number--;

}

}

abstract contract TargetFunctions {

// we set the respective operation type on our target function handlers

function counter_increment() public updateGhostsWithType(OpType.INCREASE) asActor {

counter.setNumber(newNumber);

}

function counter_increment() public updateGhostsWithType(OpType.DECREASE) asActor {

counter.setNumber(newNumber);

}

}

abstract contract BeforeAfter is Setup {

enum OpType {

INCREASE,

DECREASE

}

struct Vars {

uint256 counter_number;

}

Vars internal _before;

Vars internal _after;

modifier updateGhosts {

__before();

_;

__after();

}

modifier updateGhostsWithType(OpType op) {

currentOperation = op;

__before();

_;

__after();

}

function __before() internal {

_before.counter_number = counter.number();

}

function __after() internal {

_after.counter_number = counter.number();

}

}

abstract contract Properties is BeforeAfter, Asserts {

function propert_number_decreases() public {

// we can use the operation type as a precondition to a check in a global property

if(currentOperation == OpType.DECREASE)

gte(_before.counter_number, _after.counter_number, "number does not decrease");

}

}

}

Inlined Fuzz Properties

Inlined properties allow you to make an assertion about the system state immediately after a given state-changing target function call:

contract Counter {

uint256 public number = 1;

function setNumber(uint256 newNumber) public {

if (newNumber != 0) {

number = newNumber;

}

}

}

abstract contract TargetFunctions

{

function counter_setNumber(uint256 newNumber) public updateGhosts asActor {

try counter.setNumber(newNumber) {

// if the call to the target contract was successful, we make an assertion about the expected system state after

if (newNumber != 0) {

t(counter.number() == newNumber, "number != newNumber");

}

}

}

}

Repeating the same inlined property in multiple places should be avoided whenever possible.

Implementing the same inlined property in multiple places is essentially asking the fuzzer to break the property, not change the state (as an assertion failure prevents the call to the handler from completing), then to break the same property in a different way via another handler while already knowing that the property breaks. This is a waste of computational resources as you're asking the fuzzer to prove a fact that you already know instead of asking it to prove a new fact for which you're not sure if there's a proof (in the sense of a broken property, not a mathematical proof) or not.

If you find yourself implementing the same inline property multiple times, you should refactor them to only be assessed in one handler or checked as a global property using ghost variables.

If you can only implement a property as an inlined test but don't want multiple state changes to be maintained as it would make the story difficult to read, you can make your handler stateless using a stateless modifier.

modifier stateless() {

_;

// the revert is only made after the handler function execution completes

revert("stateless")

}

This causes the handler to revert only at the end of execution, meaning any coverage exploration will be maintained and any assertion failures will happen before the revert.

Exploring Rounding Errors

Fuzzing is a particularly useful technique for finding precision loss related issues, as highlighted by @DevDacian in this blog post.

Simply put the approach for finding such issues is as follows. For any place where there is a division operation which you believe may lead to potential loss of precision:

- Start by using and exact check in a property assertion to check if the return value of a variable/function is as expected.

- Run the fuzzer and allow it to find a case where the return value is not what's expected via a falsified assertion.

- Create an Echidna optimization test to increase the difference with the expected value.

The results of the optimization run will allow you to determine the severity of any potential precision loss and how it may be used as part of an exploit path.

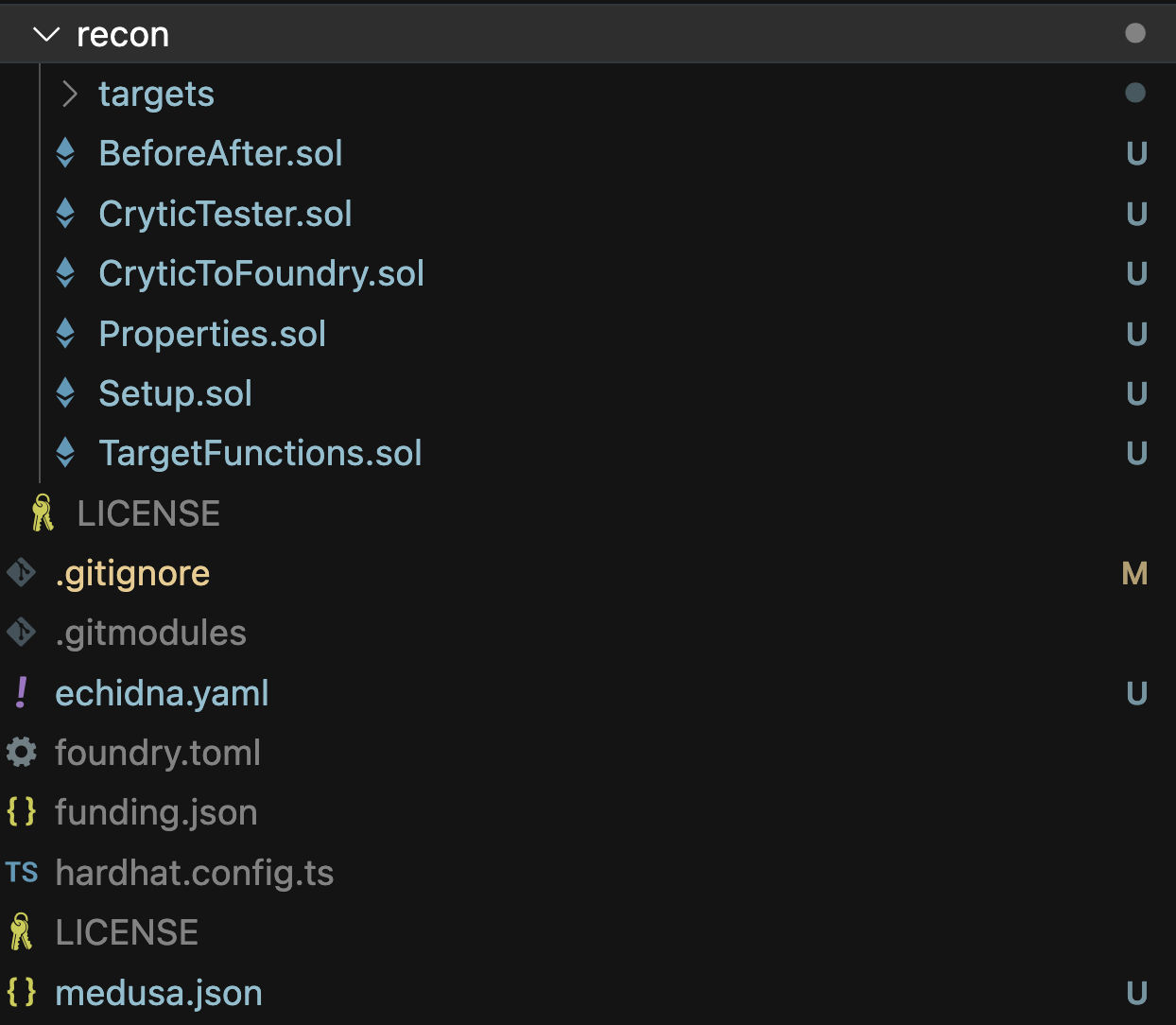

Chimera Framework

The Chimera framework lets you run invariant tests with Echidna and Medusa that can be easily debugged using Foundry.

The framework is made up of the following contracts:

When you build your handlers using Recon these files get automatically generated and populated for you. To use the framework in your project, you just need to download these files that get generated for you and add the Chimera dependency to your project:

forge install Recon-Fuzz/chimera

How It Works

The Chimera Framework uses an inheritance structure that allows all the supporting contracts to be inherited by the CryticTester contract so you can add target function handlers for multiple contracts all with a single point of entry for the fuzzer via CryticTester:

⇾ Fuzzer stopped, test results follow below ...

⇾ [PASSED] Assertion Test: CryticTester.add_new_asset(uint8)

⇾ [PASSED] Assertion Test: CryticTester.asset_approve(address,uint128)

⇾ [PASSED] Assertion Test: CryticTester.asset_mint(address,uint128)

⇾ [PASSED] Assertion Test: CryticTester.counter_increment()

⇾ [PASSED] Assertion Test: CryticTester.counter_increment_asAdmin()

⇾ [PASSED] Assertion Test: CryticTester.counter_setNumber1(uint256)

⇾ [PASSED] Assertion Test: CryticTester.counter_setNumber2(uint256)

⇾ [PASSED] Assertion Test: CryticTester.invariant_number_never_zero()

⇾ [PASSED] Assertion Test: CryticTester.switch_asset(uint256)

⇾ [PASSED] Assertion Test: CryticTester.switchActor(uint256)

⇾ [FAILED] Assertion Test: CryticTester.doomsday_increment_never_reverts()

The above output is taken from a run on the create-chimera-app template and we can see that we can call handlers for multiple contracts all through the interface of the

CryticTestercontract.

Ultimately this allows you to have one Setup contract that can deploy multiple contracts that you'll be targeting and make the debugging process easier because each broken property can be turned into an easily testable unit test that can be run in the CryticToFoundry contract.

This also simplifies the testing process, allowing you to use the following commands to run fuzz tests without having to modify any configurations.

For Echidna:

echidna . --contract CryticTester --config echidna.yaml

For Medusa:

medusa fuzz

The Contracts

We'll now look at the role each of the above-mentioned contracts serve in building an extensible and maintainable fuzzing suite. We'll be looking at examples using the create-chimera-app template project.

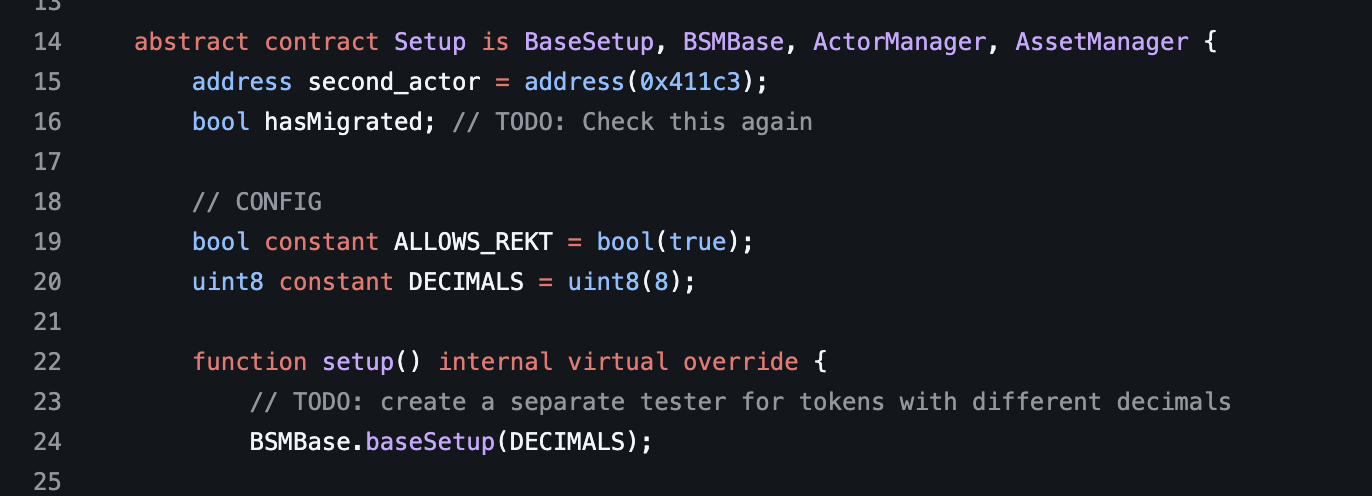

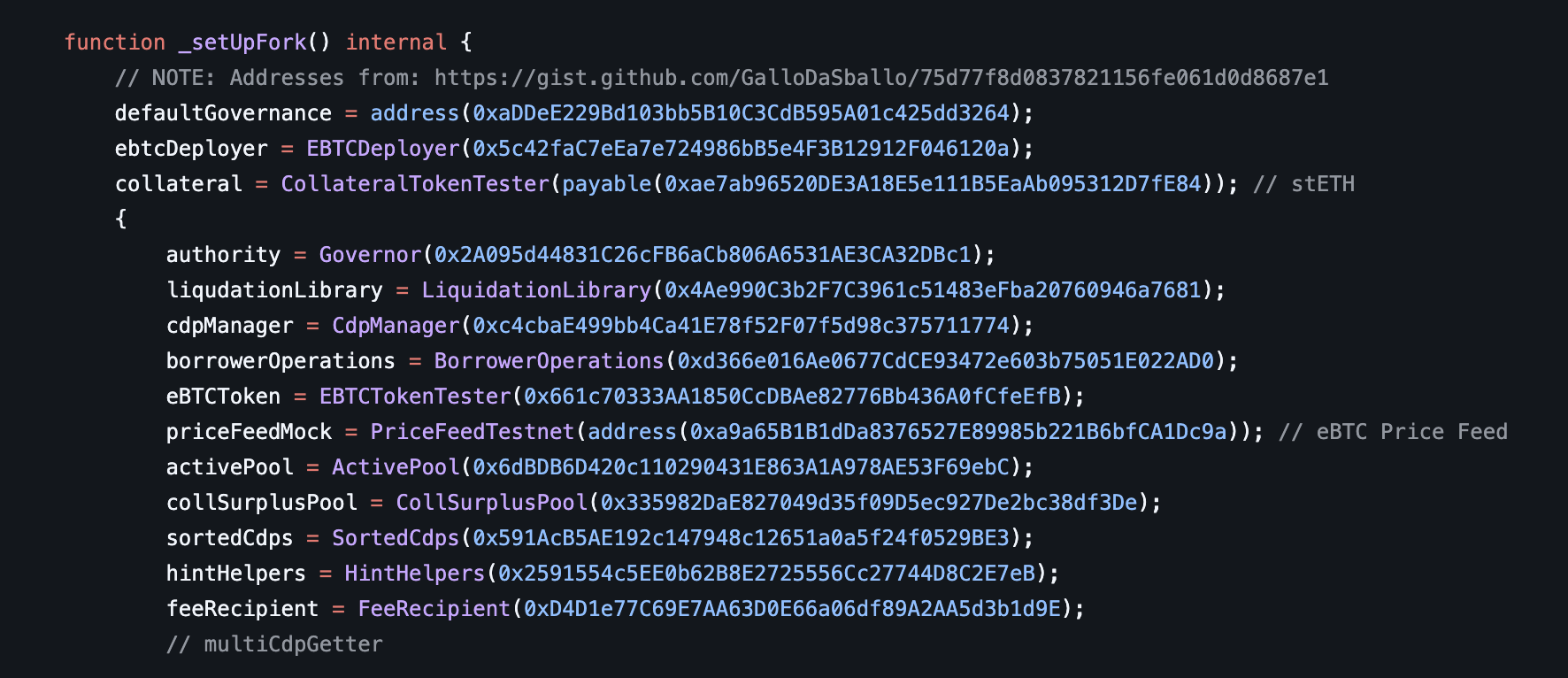

Setup

This contract is used to deploy and initialize the state of your target contracts. It's called by the fuzzer before any of the target functions are called.

Any contracts you want to track in your fuzzing suite should live here.

In our create-chimera-app template project, the Setup contract is used to deploy the Counter contract:

abstract contract Setup is BaseSetup {

Counter counter;

function setup() internal virtual override {

counter = new Counter();

}

}

TargetFunctions